1. Open Container Initiative

The Open Container Initiative (OCI) is an open governance structure for the express purpose of creating open industry standards around container formats and runtimes.

Docker is donating its container format and runtime, runC, to the OCI to serve as the cornerstone of this new effort.

2. runC

unix principles: several simple components are better than a single, complicated one.

runc is a CLI tool, only supports Linux, for spawning and running containers on Linux according to the OCI specification.

runhcs is a fork of runc, a command line client for running applications packaged on Windows according to the OCI format and is a compliant implementation of the OCI specification.

$ sudo runc

NAME:

runc - Open Container Initiative runtime

runc is a command line client for running applications packaged according to

the Open Container Initiative (OCI) format and is a compliant implementation of the

Open Container Initiative specification.

runc integrates well with existing process supervisors to provide a production

container runtime environment for applications. It can be used with your

existing process monitoring tools and the container will be spawned as a

direct child of the process supervisor.

Containers are configured using bundles. A bundle for a container is a directory

that includes a specification file named "config.json" and a root filesystem.

The root filesystem contains the contents of the container.

GLOBAL OPTIONS:

--root value root directory for storage of container state (this should be located in tmpfs) (default: "/run/user/1000/runc")-

Lists containers started by runc with containerd using Docker with the given root (default "/var/run/docker/runtime-runc/moby")

$ dockerd --help | grep exec-root --exec-root string Root directory for execution state files (default "/var/run/docker") $ sudo runc --root /var/run/docker/runtime-runc/moby list ID PID STATUS BUNDLE CREATED OWNER 011d6fd316e09fc8a5ff3b226d35b9394cd93c57604c91aa52573559368a822c 940971 running /run/containerd/io.containerd.runtime.v2.task/moby/011d6fd316e09fc8a5ff3b226d35b9394cd93c57604c91aa52573559368a822c 2021-11-25T04:10:25.216394136Z root . . . -

Lists containers started by runc with containerd using Kubernetes with the given root (default "/run/containerd/runc/k8s.io")

$ containerd config dump | grep '^state' state = "/run/containerd" $ sudo runc --root /run/containerd/runc/k8s.io list ID PID STATUS BUNDLE CREATED OWNER 14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704 1191 running /run/containerd/io.containerd.runtime.v2.task/k8s.io/14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704 2021-12-08T12:55:21.616268255Z root . . . $ sudo runc --root /run/containerd/runc/k8s.io state 14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704 { "ociVersion": "1.0.2-dev", "id": "14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704", "pid": 1191, "status": "running", "bundle": "/run/containerd/io.containerd.runtime.v2.task/k8s.io/14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704", "rootfs": "/run/containerd/io.containerd.runtime.v2.task/k8s.io/14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704/rootfs", "created": "2021-12-08T12:55:21.616268255Z", "annotations": { "io.kubernetes.cri.container-name": "kube-flannel", "io.kubernetes.cri.container-type": "container", "io.kubernetes.cri.image-name": "quay.io/coreos/flannel:v0.15.0", "io.kubernetes.cri.sandbox-id": "f0c7bf11fac17f29a8df40f1d937ec35df81e202e42ea8a604e37806aebbb662", "io.kubernetes.cri.sandbox-name": "kube-flannel-ds-6xpbj", "io.kubernetes.cri.sandbox-namespace": "kube-system" }, "owner": "" } $ sudo runc --root /run/containerd/runc/k8s.io ps 14542e5b60446f87af20e200e019a15d1ad1509eb506a2068266b9f98694c704 UID PID PPID C STIME TTY TIME CMD root 1191 806 0 Dec08 ? 00:00:25 /opt/bin/flanneld --ip-masq --kube-subnet-mgr

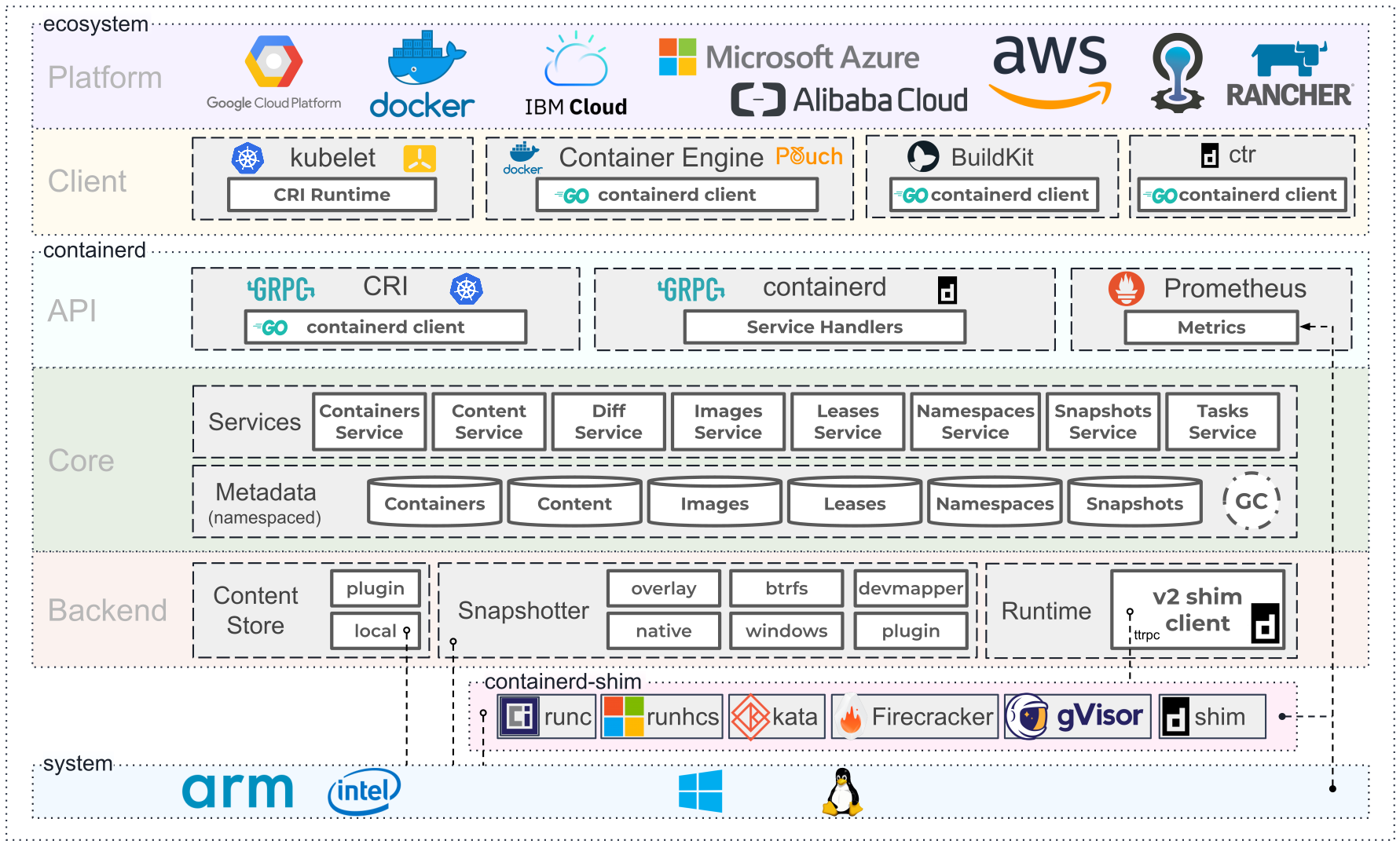

3. containerd

containerd is available as a daemon for Linux and Windows. It manages the complete container lifecycle of its host system, from image transfer and storage to container execution and supervision to low-level storage to network attachments and beyond.

containerd is designed to be embedded into a larger system, rather than being used directly by developers or end-users.

There are many different ways to use containerd:

-

If you are a developer working on containerd you can use the

ctrtool to quickly test features and functionality without writing extra code -

If you want to integrate containerd into your project, you can use a simple client package.

$ ctr

containerd CLI

USAGE:

ctr [global options] command [command options] [arguments...]

VERSION:

1.6.27

DESCRIPTION:

ctr is an unsupported debug and administrative client for interacting

with the containerd daemon. Because it is unsupported, the commands,

options, and operations are not guaranteed to be backward compatible or

stable from release to release of the containerd project.

COMMANDS:

plugins, plugin provides information about containerd plugins

version print the client and server versions

containers, c, container manage containers

content manage content

events, event display containerd events

images, image, i manage images

leases manage leases

namespaces, namespace, ns manage namespaces

pprof provide golang pprof outputs for containerd

run run a container

snapshots, snapshot manage snapshots

tasks, t, task manage tasks

install install a new package

oci OCI tools

deprecations

shim interact with a shim directly

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--debug enable debug output in logs

--address value, -a value address for containerd's GRPC server (default: "/run/containerd/containerd.sock") [$CONTAINERD_ADDRESS]

--timeout value total timeout for ctr commands (default: 0s)

--connect-timeout value timeout for connecting to containerd (default: 0s)

--namespace value, -n value namespace to use with commands (default: "default") [$CONTAINERD_NAMESPACE]

--help, -h show help

--version, -v print the version-

Save image from Docker and import to containerd

docker save nginx:1.25 | xz -zv -T0 > nginx.1.25.tar.xz # same as ctr -n moby i export sudo ctr -n moby i export /dev/stdout docker.io/library/nginx:1.25 | xz -zv -T0 > nginx.1.25.tar.xz$ docker save nginx:1.25 | xz -zv -T0 > nginx.1.25.tar.xz 100 % 41.9 MiB / 182.0 MiB = 0.230 8.0 MiB/s 0:22 $ xz -dk nginx.1.25.tar.xz $ ls nginx.1.25.tar nginx.1.25.tar.xz $ sudo ctr i import nginx.1.25.tar # import to the default namespace unpacking docker.io/library/nginx:1.25 (sha256:7477fb7aa691ad976bdd0f12afd00c094e8bef473051e5125591f532efd21022)...done $ sudo ctr ns ls NAME LABELS default k8s.io moby $ sudo ctr i ls # same as `sudo ctr -n default i ls` REF TYPE DIGEST SIZE PLATFORMS LABELS docker.io/library/nginx:1.25 application/vnd.docker.distribution.manifest.v2+json sha256:7477fb7aa691ad976bdd0f12afd00c094e8bef473051e5125591f532efd21022 182.0 MiB linux/amd64 - -

Show the information about containerd plugins

$ sudo ctr plugin ls TYPE ID PLATFORMS STATUS io.containerd.content.v1 content - ok . . . io.containerd.snapshotter.v1 overlayfs linux/amd64 ok io.containerd.snapshotter.v1 zfs linux/amd64 skip io.containerd.metadata.v1 bolt - ok . . . io.containerd.grpc.v1 cri linux/amd64 ok $ sudo ctr plugin ls -d id==cri Type: io.containerd.grpc.v1 ID: cri Requires: io.containerd.event.v1 io.containerd.service.v1 io.containerd.warning.v1 Platforms: linux/amd64 Exports: CRIVersionAlpha v1alpha2 CRIVersion v1

3.1. containerd/config

containerd is meant to be a simple daemon to run on any system. It provides a minimal config with knobs to configure the daemon and what plugins are used when necessary.

$ containerd help

high performance container runtime

USAGE:

containerd [global options] command [command options] [arguments...]

VERSION:

1.6.27

DESCRIPTION:

containerd is a high performance container runtime whose daemon can be started

by using this command. If none of the *config*, *publish*, or *help* commands

are specified, the default action of the **containerd** command is to start the

containerd daemon in the foreground.

A default configuration is used if no TOML configuration is specified or located

at the default file location. The *containerd config* command can be used to

generate the default configuration for containerd. The output of that command

can be used and modified as necessary as a custom configuration.

COMMANDS:

config information on the containerd config

publish binary to publish events to containerd

oci-hook provides a base for OCI runtime hooks to allow arguments to be injected.

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--config value, -c value path to the configuration file (default: "/etc/containerd/config.toml")

--log-level value, -l value set the logging level [trace, debug, info, warn, error, fatal, panic]

--address value, -a value address for containerd's GRPC server

--root value containerd root directory

--state value containerd state directory

--help, -h show help

--version, -v print the versionWhile a few daemon level options can be set from CLI flags, the majority of containerd’s configuration is kept in the configuration file. In the containerd config file you will find settings for persistent and runtime storage locations as well as grpc, debug, and metrics addresses for the various APIs.

$ sudo containerd config dump # See the output of the final main config

. . .

root = "/var/lib/containerd"

state = "/run/containerd"

. . .-

rootwill be used to store any type of persistent data for containerd. Snapshots, content, metadata for containers and image, as well as any plugin data will be kept in this location.The root is also namespaced for plugins that containerd loads. Each plugin will have its own directory where it stores data. containerd itself does not actually have any persistent data that it needs to store, its functionality comes from the plugins that are loaded.

/var/lib/containerd/ ├── io.containerd.content.v1.content │ └── ingest ├── io.containerd.metadata.v1.bolt │ └── meta.db ├── io.containerd.runtime.v1.linux ├── io.containerd.runtime.v2.task ├── io.containerd.snapshotter.v1.btrfs ├── io.containerd.snapshotter.v1.native │ └── snapshots ├── io.containerd.snapshotter.v1.overlayfs │ └── snapshots └── tmpmounts -

statewill be used to store any type of ephemeral data. Sockets, pids, runtime state, mount points, and other plugin data that must not persist between reboots are stored in this location.run/containerd/ ├── containerd.sock ├── containerd.sock.ttrpc ├── io.containerd.runtime.v1.linux └── io.containerd.runtime.v2.task

Both the root and state directories are namespaced for plugins.

By the way, you can also type the command: containerd config default to print the output of the default config. The follow sample is used by Docker CE as default.

disabled_plugins = ["cri"]

#root = "/var/lib/containerd"

#state = "/run/containerd"

#subreaper = true

#oom_score = 0

#[grpc]

# address = "/run/containerd/containerd.sock"

# uid = 0

# gid = 0

#[debug]

# address = "/run/containerd/debug.sock"

# uid = 0

# gid = 0

# level = "info"

You need CRI support enabled to use containerd with Kubernetes. Make sure that cri is not included in the disabled_plugins list.

|

3.2. containerd/plugins

At the end of the day, containerd’s core is very small. The real functionality comes from plugins. Everything from snapshotters, runtimes, and content are all plugins that are registered at runtime. Because these various plugins are so different we need a way to provide type safe configuration to the plugins. The only way we can do this is via the config file and not CLI flags.

3.2.1. Built-in Plugins

containerd uses plugins internally to ensure that internal implementations are decoupled, stable, and treated equally with external plugins. To see all the plugins containerd has, use ctr plugins ls.

$ sudo ctr plugin ls

TYPE ID PLATFORMS STATUS

io.containerd.content.v1 content - ok

io.containerd.snapshotter.v1 aufs linux/amd64 error

io.containerd.snapshotter.v1 btrfs linux/amd64 error

io.containerd.snapshotter.v1 devmapper linux/amd64 error

io.containerd.snapshotter.v1 native linux/amd64 ok

io.containerd.snapshotter.v1 overlayfs linux/amd64 ok

io.containerd.snapshotter.v1 zfs linux/amd64 error

io.containerd.metadata.v1 bolt - ok

io.containerd.differ.v1 walking linux/amd64 ok

io.containerd.gc.v1 scheduler - ok

...From the output all the plugins can be seen as well those which did not successfully load. In this case aufs and zfs are expected not to load since they are not support on the machine. The logs will show why it failed, but you can also get more details using the -d option.

$ sudo ctr plugin ls -d id==aufs id==zfs

Type: io.containerd.snapshotter.v1

ID: aufs

Platforms: linux/amd64

Exports:

root /var/lib/containerd/io.containerd.snapshotter.v1.aufs

Error:

Code: Unknown

Message: aufs is not supported (modprobe aufs failed: exit status 1 "modprobe: FATAL: Module aufs not found in directory /lib/modules/5.10.0-9-amd64\n"): skip plugin

Type: io.containerd.snapshotter.v1

ID: zfs

Platforms: linux/amd64

Exports:

root /var/lib/containerd/io.containerd.snapshotter.v1.zfs

Error:

Code: Unknown

Message: path /var/lib/containerd/io.containerd.snapshotter.v1.zfs must be a zfs filesystem to be used with the zfs snapshotter: skip plugin3.2.2. Configuration

Plugins are configured using the [plugins] section of containerd’s config. Every plugin can have its own section using the pattern [plugins.<plugin id>].

[plugins]

# indentation (tabs and/or spaces) is allowed but not required

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "k8s.gcr.io/pause:3.5"

# <other paramters>

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

# <other paramters>

[plugins."io.containerd.grpc.v1.cri".containerd]

# <other paramters>

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

# <other paramters>

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

# <other paramters>

SystemdCgroup = true3.3. containerd/cri

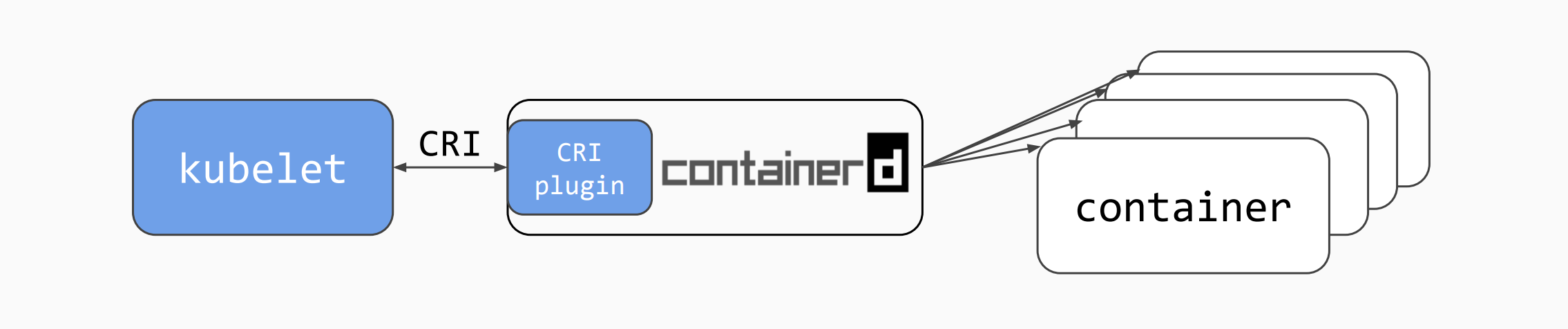

cri is a containerd built-in plugin implementation of Kubernetes Container Runtime Interface (CRI).

While OCI specs defines a single container, CRI (Container Runtime Interface) describes containers as workload(s) in a shared sandbox environment called a pod. Pods can contain one or more container workloads.

With it, you could run Kubernetes using containerd as the container runtime.

crictl is a command-line interface for CRI-compatible container runtimes.

$ sudo crictl pods

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME

f69d876947d10 About an hour ago Ready coredns-5dd5756b68-6zhnn kube-system 0 (default)

05b17a7b61b01 About an hour ago Ready kube-apiserver-node-1 kube-system 0 (default)

$ sudo crictl inspectp f69d876947d10 | head

{

"status": {

"id": "f69d876947d103f23b41ca677e498468aaef6a9d35e287c6dcd999cf62e40dbd",

"metadata": {

"attempt": 0,

"name": "coredns-5dd5756b68-6zhnn",

"namespace": "kube-system",

"uid": "f364d6dd-ba20-4ab6-8ebb-0053ac1b43e0"

},

"state": "SANDBOX_READY",3.4. containerd/namespaces

containerd offers a fully namespaced API so multiple consumers can all use a single containerd instance without conflicting with one another, that allows multi-tenancy within a single daemon.

Consumers are able to have containers with the same names but with settings and/or configurations that vary drastically. For example, system or infrastructure level containers can be hidden in one namespace while user level containers are kept in another. Underlying image content is still shared via content addresses but image names and metadata are separate per namespace.

Namespaces allow various features, most notably, the ability for one client to create, edit, and delete resources without affecting another client. A resource can be anything from an: image, container, task, or snapshot.

When a client queries for a resource, they only see the resources that are part of their namespace.

-

list namespaces

$ sudo ctr ns ls # list namespaces NAME LABELS default k8s.io moby$ dockerd --help | grep containerd-namespace --containerd-namespace string Containerd namespace to use (default "moby") $ kubelet --help | grep containerd-namespace --containerd-namespace string containerd namespace (default "k8s.io") (DEPRECATED: This is a cadvisor flag that was mistakenly registered with the Kubelet. Due to legacy concerns, it will follow the standard CLI deprecation timeline before being removed.) -

pull image to namespace

alice(create it if not existed)$ sudo ctr -n alice image pull docker.io/library/nginx:1.25 docker.io/library/nginx:1.25: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:104c7c5c54f2685f0f46f3be607ce60da7085da3eaa5ad22d3d9f01594295e9c: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:48a84a0728cab8ac558f48796f901f6d31d287101bc8b317683678125e0d2d35: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:da761d9a302b21dc50767b67d46f737f5072fb4490c525b4a7ae6f18e1dbbf75: done |++++++++++++++++++++++++++++++++++++++| config-sha256:eea7b3dcba7ee47c0d16a60cc85d2b977d166be3960541991f3e6294d795ed24: done |++++++++++++++++++++++++++++++++++++++| . . . elapsed: 65.9s total: 66.8 M (1.0 MiB/s) unpacking linux/amd64 sha256:104c7c5c54f2685f0f46f3be607ce60da7085da3eaa5ad22d3d9f01594295e9c... done: 2.224968944s -

pull image to namespace

bob(create it if not existed)$ sudo ctr -n bob image pull docker.io/library/nginx:1.25 docker.io/library/nginx:1.25: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:104c7c5c54f2685f0f46f3be607ce60da7085da3eaa5ad22d3d9f01594295e9c: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:48a84a0728cab8ac558f48796f901f6d31d287101bc8b317683678125e0d2d35: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:da761d9a302b21dc50767b67d46f737f5072fb4490c525b4a7ae6f18e1dbbf75: done |++++++++++++++++++++++++++++++++++++++| config-sha256:eea7b3dcba7ee47c0d16a60cc85d2b977d166be3960541991f3e6294d795ed24: done |++++++++++++++++++++++++++++++++++++++| . . . elapsed: 2.2 s total: 0.0 B (0.0 B/s) unpacking linux/amd64 sha256:104c7c5c54f2685f0f46f3be607ce60da7085da3eaa5ad22d3d9f01594295e9c... done: 2.453252148sThe elapsed time of the same image pulled in the bobnamespace is only 2.2 s. -

run a container in namespace

alice$ sudo ctr -n alice run --null-io -d docker.io/library/nginx:1.25 nginx # run a container named `nginx` $ sudo ctr -n alice c ls # list containers CONTAINER IMAGE RUNTIME nginx docker.io/library/nginx:1.25 io.containerd.runc.v2 $ sudo ctr -n alice t ls # list tasks TASK PID STATUS nginx 43776 RUNNING -

run a container in namespace

bob$ sudo ctr -n bob run --null-io -d docker.io/library/nginx:1.25 nginx $ sudo ctr -n bob c ls CONTAINER IMAGE RUNTIME nginx docker.io/library/nginx:1.25 io.containerd.runc.v2 $ sudo ctr -n bob t ls TASK PID STATUS nginx 647098 RUNNINGThe container name (i.e. nginx) inbobis same as inalice. -

using the

nsenterto test the nginx endpoint in the container$ sudo ctr -n alice t ls TASK PID STATUS nginx-a 43776 RUNNING $ sudo nsenter -t 43776 -a lsns NS TYPE NPROCS PID USER COMMAND 4026531835 cgroup 3 1 root nginx: master process nginx -g daemon off; 4026531837 user 3 1 root nginx: master process nginx -g daemon off; 4026532706 mnt 3 1 root nginx: master process nginx -g daemon off; 4026532707 uts 3 1 root nginx: master process nginx -g daemon off; 4026532708 ipc 3 1 root nginx: master process nginx -g daemon off; 4026532709 pid 3 1 root nginx: master process nginx -g daemon off; 4026532711 net 3 1 root nginx: master process nginx -g daemon off; $ sudo nsenter -t 43776 -n curl -iI localhost HTTP/1.1 200 OK Server: nginx/1.25.2 Date: Tue, 22 Aug 2023 09:44:58 GMT Content-Type: text/html Content-Length: 615 Last-Modified: Tue, 15 Aug 2023 17:03:04 GMT Connection: keep-alive ETag: "64dbafc8-267" Accept-Ranges: bytes -

stop a container

$ sudo ctr -n alice c ls CONTAINER IMAGE RUNTIME nginx docker.io/library/nginx:1.25 io.containerd.runc.v2 $ sudo ctr -n alice t ls TASK PID STATUS nginx 653417 RUNNING // stop a container $ sudo ctr -n alice t kill -a nginx $ sudo ctr -n alice t ls TASK PID STATUS nginx 653417 STOPPED // remove a stopped task $ sudo ctr -n alice t rm nginx $ sudo ctr -n alice t ls TASK PID STATUS $ sudo ctr -n alice c ls CONTAINER IMAGE RUNTIME nginx docker.io/library/nginx:1.25 io.containerd.runc.v2 // restart a container $ sudo ctr -n alice t start --null-io -d nginx $ sudo ctr -n alice t ls TASK PID STATUS nginx 655518 RUNNING // stop and remove a container $ sudo ctr -n alice t kill -a nginx $ sudo ctr -n alice t rm nginx $ sudo ctr -n alice c rm nginx $ sudo ctr -n alice c ls CONTAINER IMAGE RUNTIME // stop all containers in k8s.io namespace // 9) SIGKILL 15) SIGTERM $ sudo ctr -n k8s.io tasks list -q | xargs -r -I {} sudo ctr -n k8s.io tasks kill -s 9 -a {} // or $ for task in $(sudo ctr -n k8s.io tasks list -q); do sudo ctr -n k8s.io tasks kill -a "$task"; done // or $ sudo crictl stop $(sudo crictl ps -q) -

clean a namespace

$ sudo ctr ns rm alice ERRO[0000] unable to delete alice error="namespace \"alice\" must be empty, but it still has images, blobs, containers, snapshots on \"overlayfs\" snapshotter: failed precondition" ctr: unable to delete alice: namespace "alice" must be empty, but it still has images, blobs, containers, snapshots on "overlayfs" snapshotter: failed precondition $ sudo ctr -n alice i rm docker.io/library/nginx:1.25 docker.io/library/nginx:1.25 $ sudo ctr ns rm alice alice $ sudo ctr ns ls NAME LABELS bob k8s.io moby

3.5. contianerd/proxy

The contianerd daemon uses the HTTP_PROXY, HTTPS_PROXY, and NO_PROXY environmental variables in its start-up environment to configure HTTP or HTTPS proxy behavior.

-

Create a systemd drop-in directory for the containerd service:

sudo mkdir -p /etc/systemd/system/containerd.service.d -

Create a file called

20-http-proxy.confat the above directory that adds theHTTP_PROXYenvironment variable:[Service] Environment="HTTP_PROXY=http://proxy.example.com:80/"Or, if you are behind an HTTPS proxy server, adds the

HTTPS_PROXYenvironment variable:[Service] Environment="HTTP_PROXY=http://proxy.example.com:80/" Environment="HTTPS_PROXY=https://proxy.example.com:443/"If you have internal Docker registries that you need to contact without proxying you can specify them via the

NO_PROXYenvironment variable:[Service] Environment="HTTP_PROXY=http://proxy.example.com:80/" Environment="HTTPS_PROXY=https://proxy.example.com:443/" Environment="NO_PROXY=localhost,127.0.0.1,docker-registry.somecorporation.com"The

NO_PROXYenvironment variable specifies URLs that should be excluded from proxying (on servers that should be contacted directly).This should be a comma-separated list of hostnames, domain names, or a mixture of both. Asterisks can be used as wildcards, but other clients may not support that. Domain names may be indicated by a leading dot. For example:

NO_PROXY="*.aventail.com,home.com,.seanet.com"says to contact all machines in the ‘aventail.com’ and ‘seanet.com’ domains directly, as well as the machine named ‘home.com’. If

NO_PROXYisn’t defined,no_PROXYandno_proxyare also tried, in that order.You can also use the systemctl edit containerdto editoverride.confat/etc/systemd/system/containrd.service.dfor the containerd service. -

Flush changes:

sudo systemctl daemon-reload -

Verify that the configuration has been loaded:

$ systemctl status containerd.service ● containerd.service - containerd container runtime Loaded: loaded (/lib/systemd/system/containerd.service; enabled; preset: enabled) Drop-In: /etc/systemd/system/containerd.service.d └─20-http-proxy.conf $ systemctl show --property Environment containerd.service --full --no-pager Environment=HTTP_PROXY=http://proxy.example.com:80/ HTTPS_PROXY=https://proxy.example.com:443/ NO_PROXY=localhost,127.0.0.1,docker-registry.somecorporation.com -

Restart containerd:

sudo systemctl restart containerd

4. crictl

crictl is a command-line interface for CRI-compatible container runtimes. You can use it to inspect and debug container runtimes and applications on a Kubernetes node. crictl and its source are hosted in the cri-tools repository.

To solve the above problem, please specify the or set the the |

-

crictl image listisctr -n=k8s.io image list$ sudo ctr -n k8s.io i ls REF TYPE DIGEST SIZE PLATFORMS LABELS docker.io/library/busybox:latest application/vnd.docker.distribution.manifest.list.v2+json sha256:e7157b6d7ebbe2cce5eaa8cfe8aa4fa82d173999b9f90a9ec42e57323546c353 758.9 KiB linux/386,linux/amd64,linux/arm/v5,linux/arm/v6,linux/arm/v7,linux/arm64/v8,linux/mips64le,linux/ppc64le,linux/riscv64,linux/s390x io.cri-containerd.image=managed docker.io/library/busybox@sha256:e7157b6d7ebbe2cce5eaa8cfe8aa4fa82d173999b9f90a9ec42e57323546c353 application/vnd.docker.distribution.manifest.list.v2+json sha256:e7157b6d7ebbe2cce5eaa8cfe8aa4fa82d173999b9f90a9ec42e57323546c353 758.9 KiB linux/386,linux/amd64,linux/arm/v5,linux/arm/v6,linux/arm/v7,linux/arm64/v8,linux/mips64le,linux/ppc64le,linux/riscv64,linux/s390x io.cri-containerd.image=managed k8s.gcr.io/pause:3.2 application/vnd.docker.distribution.manifest.v2+json sha256:2a7b365f500c323286ac47e9e32af9bd50ee65de7fe2a27355eb5987c8df9ad8 669.7 KiB linux/amd64 io.cri-containerd.image=managed sha256:7138284460ffa3bb6ee087344f5b051468b3f8697e2d1427bac1a20c8d168b14 application/vnd.docker.distribution.manifest.list.v2+json sha256:e7157b6d7ebbe2cce5eaa8cfe8aa4fa82d173999b9f90a9ec42e57323546c353 758.9 KiB linux/386,linux/amd64,linux/arm/v5,linux/arm/v6,linux/arm/v7,linux/arm64/v8,linux/mips64le,linux/ppc64le,linux/riscv64,linux/s390x io.cri-containerd.image=managed sha256:80d28bedfe5dec59da9ebf8e6260224ac9008ab5c11dbbe16ee3ba3e4439ac2c application/vnd.docker.distribution.manifest.v2+json sha256:61e45779fc594fcc1062bb9ed2cf5745b19c7ba70f0c93eceae04ffb5e402269 669.7 KiB linux/amd64 io.cri-containerd.image=managed $ sudo crictl image ls IMAGE TAG IMAGE ID SIZE docker.io/library/busybox latest 7138284460ffa 1.46MB k8s.gcr.io/pause 3.2 80d28bedfe5de 686kB -

create a pod sandbox and run a container

container-config.json{ "metadata": { "name": "busybox" }, "image":{ "image": "busybox" }, "command": [ "top" ], "log_path":"busybox.0.log", "linux": { } }pod-config.json{ "metadata": { "name": "nginx-sandbox", "namespace": "default", "attempt": 1, "uid": "hdishd83djaidwnduwk28bcsb" }, "log_directory": "/tmp", "linux": { } }$ sudo crictl run container-config.json pod-config.json b08ad7b8517d0e37853f3a7211fbc7ba283a7b34cff5bd0ae108e9d956034a24 $ sudo crictl pods POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME 91ff0a7d5e81a 15 seconds ago Ready nginx-sandbox default 1 (default) $ sudo crictl ps CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID b08ad7b8517d0 busybox 15 seconds ago Running busybox 0 91ff0a7d5e81a $ sudo crictl stopp 91ff0a7d5e81a Stopped sandbox 91ff0a7d5e81a $ sudo crictl rmp 91ff0a7d5e81a Removed sandbox 91ff0a7d5e81a -

To stop all running containers managed by containerd if using

crictl:$ sudo crictl stop $(sudo crictl ps -q) 0509b1b0646b70ed6e78e370730881d1a500d4a335f7198f0150ae16e22ebb48 9d24fcbb60a5627572e29c115f649d0e72c96bc96f8feb185280269f9179cd23 009a342cb16c1cbc20a4ae99679d11ba7263695d1d820a84ec4d4f8baef79e35 ...

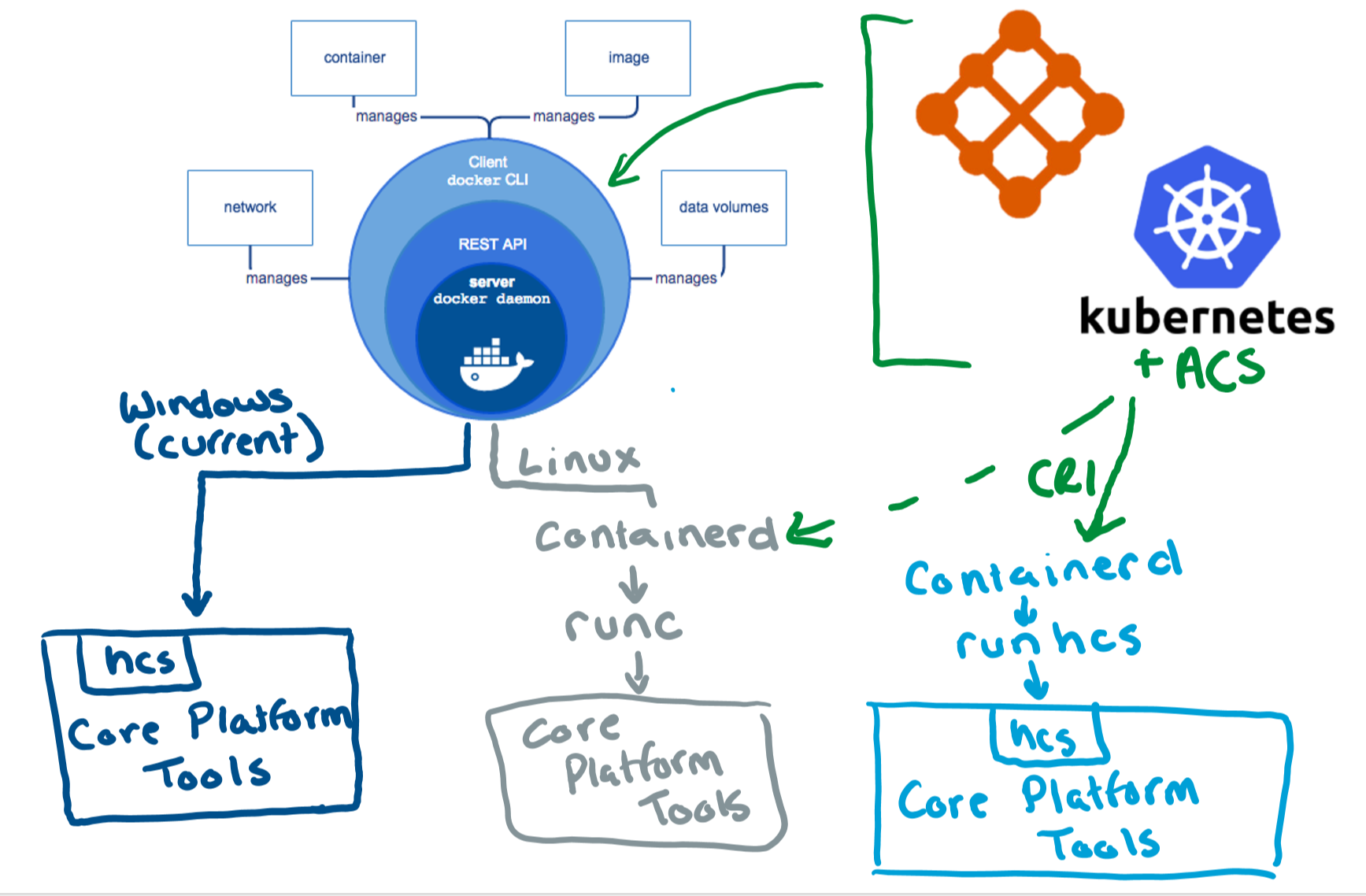

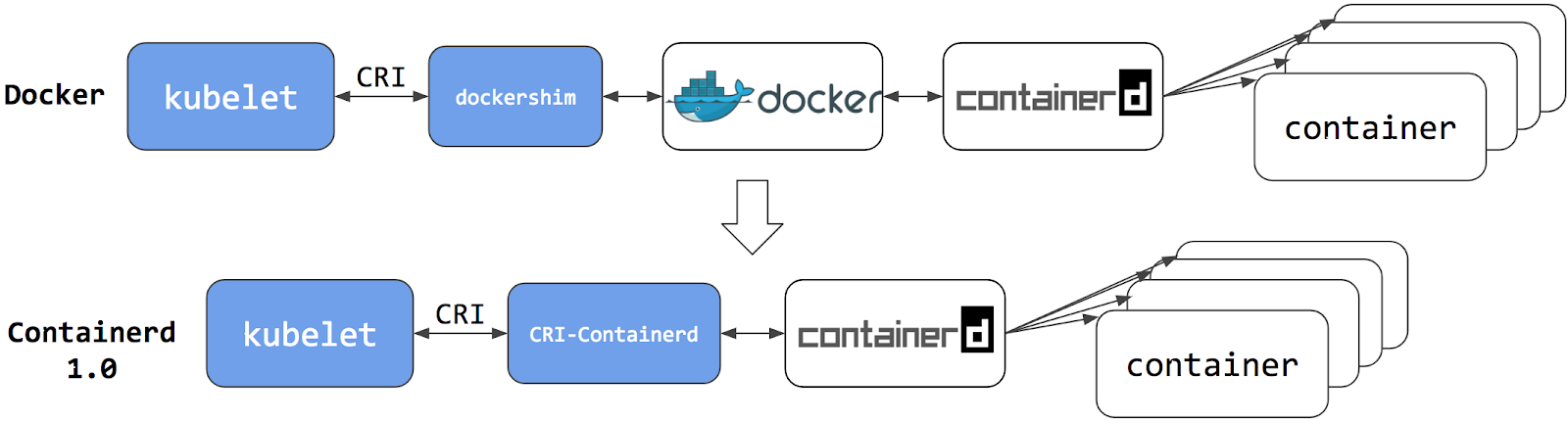

5. docker and dockershim

dockershim is a Docker CRI implementation for kubelet to interact with dockerd to manage containers.

|

dockershim deprecation was announced as a part of the Kubernetes v1.20 release.

|

| Introduce experimental support for containerd as the content store (replacing the existing storage drivers) of the Docker 24.0. |

Developers can still use the Docker platform to build, share, and run containers on Kubernetes!

If you’re using Docker, you’re already using containerd.

$ dockerd --help | grep containerd

--containerd string containerd grpc address

--containerd-namespace string Containerd namespace to use (default "moby")

--containerd-plugins-namespace string Containerd namespace to use for plugins (default "plugins.moby")

--cri-containerd start containerd with cri

$ docker info

Server Version: 24.0.7

Storage Driver: overlayfs

driver-type: io.containerd.snapshotter.v1

Cgroup Driver: systemd

Cgroup Version: 2

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

containerd version: a1496014c916f9e62104b33d1bb5bd03b0858e59

runc version: v1.1.11-0-g4bccb38The images Docker builds are compliant with OCI (Open Container Initiative), are fully supported on containerd, and will continue to run great on Kubernetes.

Docker’s runtime is built upon containerd while providing a great developer experience around it. For production environments that benefit from a minimal container runtime, such as Kubernetes, and may have no need for Docker’s great developer experience, it’s reasonable to directly use lightweight runtimes like containerd.

containerd uses snapshotters instead of the classic storage drivers for storing image and container data. While the overlay2 driver still remains the default driver for Docker Engine, you can opt in to using containerd snapshotters as an experimental feature.

|

If you’re using Docker, you’ll find that the |

There are two ways to configure the Docker daemon to use a proxy server.

-

Configuring the daemon through a configuration file or CLI flags

{ "proxies": { "http-proxy": "http://proxy.example.com:3128", "https-proxy": "https://proxy.example.com:3129", "no-proxy": "*.test.example.com,.example.org,127.0.0.0/8" } } -

Setting environment variables on the system

[Service] Environment="HTTP_PROXY=http://proxy.example.com:3128" Environment="HTTPS_PROXY=https://proxy.example.com:3129" Environment="NO_PROXY=localhost,127.0.0.1,docker-registry.example.com,.corp"

6. Sandbox image and pause container

It is recommended to keep the sandbox_image of containerd consistent with the pod-infra-container-image (also known as the pause container image) of the kubelet.

Both images are used to create the pause container, which serves as the "parent container" for all other containers in a Kubernetes pod. Ensuring that these images match helps maintain consistency and avoid potential issues within your Kubernetes environment.

The pause container holds the network namespace and other shared resources for all containers within a pod.

Having a consistent pause container image ensures that all components of your Kubernetes cluster use the same image, reducing the likelihood of conflicts and maintaining a unified environment.

To make sure both configurations are using the same image, follow these steps:

-

Configure the

sandbox_imagein containerd’s configuration file, usually located at/etc/containerd/config.toml. For example:[plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.k8s.io/pause:3.9"$ sudo crictl info -o go-template --template '{{.config.sandboxImage}}' registry.k8s.io/pause:3.9 -

Configure the

pod-infra-container-imagein the kubelet’s configuration file or command-line flags. For example, add the following flag to the kubelet’s command-line options:--pod-infra-container-image=registry.k8s.io/pause:3.9$ sudo cat /var/lib/kubelet/kubeadm-flags.env KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.9"or set the

pod-infra-container-imagein the kubelet’s configuration file (usually/var/lib/kubelet/config.yaml):pod-infra-container-image: "registry.k8s.io/pause:3.9"

After making these changes, restart the containerd and kubelet services to apply the new configurations.

By keeping the sandbox_image and pod-infra-container-image consistent, you can ensure that your Kubernetes cluster operates smoothly and avoids potential issues related to using different pause container images.

Here is a pod with multiple containers:

# multi.yml

apiVersion: v1

kind: Pod

metadata:

namespace: default

name: multi-c

spec:

containers:

- image: nginx:1.25

name: nginx

- image: qqbuby/net-tools:1.0

name: net-tools

command:

- sleep

- 3650d$ kubectl apply -f multi.yml

pod/multi-c created

// inter-coontainers communication in the same pod using localhost

$ kubectl exec multi-c -c net-tools -- curl -iIs localhost

HTTP/1.1 200 OK

Server: nginx/1.25.3

Date: Sat, 03 Feb 2024 07:30:40 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 24 Oct 2023 13:46:47 GMT

Connection: keep-alive

ETag: "6537cac7-267"

Accept-Ranges: bytes

// locate the scheduled node of the pod (e.g node-2)

$ kubectl get po multi-c -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

multi-c 2/2 Running 0 23m 10.244.1.19 node-2 <none> <none>

// switch to the node-2, show find the pod using the `crictl`

$ sudo crictl pods --name multi-c

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME

862626e506f99 25 minutes ago Ready multi-c default 0 (default)

// find the pause container

$ sudo ctr -n k8s.io c ls | grep 862626e506f99

862626e506f99825c2afc234ce21a3e561203b2657dd4b5db8c83a858654f7c0 registry.k8s.io/pause:3.9 io.containerd.runc.v2

// find the process/task id of the pause container

$ sudo ctr -n k8s.io t ls | grep 862626e506f99

862626e506f99825c2afc234ce21a3e561203b2657dd4b5db8c83a858654f7c0 14760 RUNNING

// show the hostname and network of the container

$ sudo nsenter -t 14760 -n -u hostname

multi-c

$ sudo nsenter -t 14760 -n -u ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 12:28:a3:72:18:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.244.1.19/24 brd 10.244.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::1028:a3ff:fe72:1888/64 scope link

valid_lft forever preferred_lft forever

// find the others containers in the sandbox

$ sudo crictl ps -p 862626e506f99

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

637a1a11e00ed 0fb85279f52dc 44 minutes ago Running net-tools 0 862626e506f99 multi-c

6794ce521b789 b690f5f0a2d53 44 minutes ago Running nginx 0 862626e506f99 multi-c

$ sudo ctr -n k8s.io t ls | egrep '637a1a11e00ed|6794ce521b789'

637a1a11e00edc406243154bb8e3636181ae2c4e398d98edb94580cd5a4747e2 15105 RUNNING

6794ce521b789b619885ba91583a5e7a5cf95491fb8719c656cf2f97c01b729a 14949 RUNNING

// show the hostname and network of the containers

$ sudo nsenter -t 15105 -n -u hostname

multi-c

$ sudo nsenter -t 15105 -n -u ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 12:28:a3:72:18:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.244.1.19/24 brd 10.244.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::1028:a3ff:fe72:1888/64 scope link

valid_lft forever preferred_lft forever

$ sudo nsenter -t 14949 -n -u hostname

multi-c

$ sudo nsenter -t 14949 -n -u ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 12:28:a3:72:18:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.244.1.19/24 brd 10.244.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::1028:a3ff:fe72:1888/64 scope link

valid_lft forever preferred_lft forever

// As we see, both of these 3 containers share the same:

// 1. hostname `multi-c`,

// 2. vnet `eth0@if7`

// 3. and address `10.244.1.19/24`.

// switch back the control panel node, and delete the pod

$ kubectl delete -f multi.yml

pod "multi-c" deleted7. References

-

https://docs.microsoft.com/en-us/virtualization/windowscontainers/deploy-containers/containerd

-

https://stackoverflow.com/questions/61738905/how-to-list-docker-containers-using-runc

-

https://github.com/containerd/containerd/blob/main/docs/ops.md

-

https://github.com/containerd/containerd/blob/main/docs/PLUGINS.md

-

https://github.com/containerd/cri/blob/release/1.4/docs/config.md

-

https://kubernetes.io/blog/2018/05/24/kubernetes-containerd-integration-goes-ga/

-

https://kubernetes.io/docs/setup/production-environment/container-runtimes/

-

https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/configure-cgroup-driver/