AI and Large Language Models

- 1. What is AI?

- 2. Large language models

- 3. What is Azure OpenAI?

- Appendix A: FAQ

- A.1. Large Language Model (LLM) Platforms: A Comparison

- A.2. How does RAG work like GPT, Gemini, ERNIE?

- A.3. What’s vector search and embedding?

- A.3.1. What’s its relationship with LLM, like Gemini or GPT?

- A.3.2. What are the popular tools or databases that support Vector Search?

- A.3.3. I’m new to search engine. show the learn path about vector search.

- A.3.4. Should I also need to learn ML and DL?

- A.3.5. Papers [Gemini]

- A.3.6. How to read a paper? [Gemini]

- References

1. What is AI?

Simply put, AI is software that imitates human behaviors and capabilities. Key workloads include: [1]

-

Machine learning - This is often the foundation for an AI system, and is the way we "teach" a computer model to make predictions and draw conclusions from data.

-

Computer vision - Capabilities within AI to interpret the world visually through cameras, video, and images.

-

Natural language processing - Capabilities within AI for a computer to interpret written or spoken language, and respond in kind.

-

Document intelligence - Capabilities within AI that deal with managing, processing, and using high volumes of data found in forms and documents.

-

Knowledge mining - Capabilities within AI to extract information from large volumes of often unstructured data to create a searchable knowledge store.

-

Generative AI - Capabilities within AI that create original content in a variety of formats including natural language, image, code, and more.

1.1. Machine Learning

Machine Learning is the foundation for most AI solutions.

-

Since the 1950’s, researchers, often known as data scientists, have worked on different approaches to AI.

-

Most modern applications of AI have their origins in machine learning, a branch of AI that combines computer science and mathematics.

How machine learning works?

-

The answer is, from data.

In today’s world, we create huge volumes of data as we go about our everyday lives. From the text messages, emails, and social media posts we send to the photographs and videos we take on our phones, we generate massive amounts of information. More data still is created by millions of sensors in our homes, cars, cities, public transport infrastructure, and factories.

-

Data scientists can use all of that data to train machine learning models that can make predictions and inferences based on the relationships they find in the data.

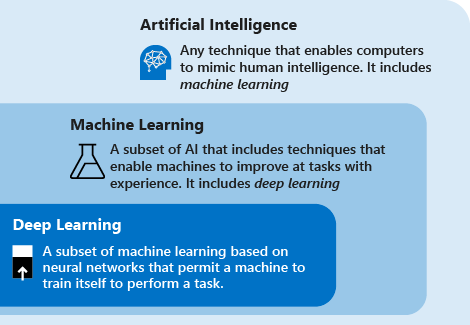

Deep learning, machine learning, and AI

1.2. Computer Vision

Computer Vision is an area of AI that deals with visual processing.

-

Image Analysis: capabilities for analyzing images and video, and extracting descriptions, tags, objects, and text.

-

Face: capabilities that enable you to build face detection and facial recognition solutions.

-

Optical Character Recognition (OCR): capabilities for extracting printed or handwritten text from images, enabling access to a digital version of the scanned text.

1.3. Natural language processing (NLP)

Natural language processing (NLP) is the area of AI that deals with creating software that understands written and spoken language.

-

Analyze and interpret text in documents, email messages, and other sources.

-

Interpret spoken language, and synthesize speech responses.

-

Automatically translate spoken or written phrases between languages.

-

Interpret commands and determine appropriate actions.

1.4. Document Intelligence

Document Intelligence is the area of AI that deals with managing, processing, and using high volumes of a variety of data found in forms and documents.

Document intelligence enables us to create software that can automate processing for contracts, health documents, financial forms and more.

1.5. Knowledge Mining

Knowledge mining is the term used to describe solutions that involve extracting information from large volumes of often unstructured data to create a searchable knowledge store.

1.6. Generative AI?

Generative artificial intelligence (generative AI, GenAI, or GAI) is artificial intelligence capable of generating text, images, videos, or other data using generative models, often in response to prompts.

Improvements in transformer-based deep neural networks, particularly large language models (LLMs), enabled an AI boom of generative AI systems in the early 2020s. These include chatbots such as ChatGPT, Copilot, Gemini and LLaMA, text-to-image artificial intelligence image generation systems such as Stable Diffusion, Midjourney and DALL-E, and text-to-video AI generators such as Sora.

the free encyclopedia

Artificial Intelligence (AI) imitates human behavior by using machine learning to interact with the environment and execute tasks without explicit directions on what to output. [2]

Generative AI describes a category of capabilities within AI that create original content.

-

People typically interact with generative AI that has been built into chat applications. One popular example of such an application is ChatGPT, a chatbot created by OpenAI, an AI research company that partners closely with Microsoft.

-

Generative AI applications take in natural language input, and return appropriate responses in a variety of formats including natural language, image, code, audio, and video.

2. Large language models

A large language model (LLM) is a computational model notable for its ability to achieve general-purpose language generation and other natural language processing tasks such as classification. Based on language models, LLMs acquire these abilities by learning statistical relationships from text documents during a computationally intensive self-supervised and semi-supervised training process. LLMs can be used for text generation, a form of generative AI, by taking an input text and repeatedly predicting the next token or word.

the free encyclopedia

Generative AI applications are powered by large language models (LLMs), which are a specialized type of machine learning model that you can use to perform natural language processing (NLP) tasks, including:

-

Determining sentiment or otherwise classifying natural language text.

-

Summarizing text.

-

Comparing multiple text sources for semantic similarity.

-

Generating new natural language.

2.1. Transformer models

Machine learning models for natural language processing have evolved over many years. Today’s cutting-edge large language models are based on the transformer architecture, which builds on and extends some techniques that have been proven successful in modeling vocabularies to support NLP tasks - and in particular in generating language.

Transformer models are trained with large volumes of text, enabling them to represent the semantic relationships between words and use those relationships to determine probable sequences of text that make sense.

Transformer models with a large enough vocabulary are capable of generating language responses that are tough to distinguish from human responses.

Transformer model architecture consists of two components, or blocks:

-

An encoder block that creates semantic representations of the training vocabulary.

-

A decoder block that generates new language sequences.

In practice, the specific implementations of the architecture vary – for example,

-

the Bidirectional Encoder Representations from Transformers (BERT) model developed by Google to support their search engine uses only the encoder block, while

-

the Generative Pretrained Transformer (GPT) model developed by OpenAI uses only the decoder block.

2.1.1. Tokenization

The first step in training a transformer model is to decompose the training text into tokens - in other words, identify each unique text value. With a sufficiently large set of training text, a vocabulary of many thousands of tokens could be compiled. For the sake of simplicity, we can think of each distinct word in the training text as a token (though in reality, tokens can be generated for partial words, or combinations of words and punctuation).

2.1.2. Embeddings

To create a vocabulary that encapsulates semantic relationships between the tokens, we define contextual vectors, known as embeddings, for them.

-

Vectors are multi-valued numeric representations of information, for example

[10, 3, 1]in which each numeric element represents a particular attribute of the information. -

For language tokens, each element of a token’s vector represents some semantic attribute of the token.

-

The specific categories for the elements of the vectors in a language model are determined during training based on how commonly words are used together or in similar contexts.

It can be useful to think of the elements in a token embedding vector as coordinates in multidimensional space, so that each token occupies a specific "location."

-

The closer tokens are to one another along a particular dimension, the more semantically related they are.

-

In other words, related words are grouped closer together.

2.1.3. Attention

The encoder and decoder blocks in a transformer model include multiple layers that form the neural network for the model. One of the types of layers that is used in both blocks are attention layers.

-

Attention is a technique used to examine a sequence of text tokens and try to quantify the strength of the relationships between them.

-

In particular, self-attention involves considering how other tokens around one particular token influence that token’s meaning.

-

In an encoder block, each token is carefully examined in context, and an appropriate encoding is determined for its vector embedding. The vector values are based on the relationship between the token and other tokens with which it frequently appears.

-

In a decoder block, attention layers are used to predict the next token in a sequence. For each token generated, the model has an attention layer that takes into account the sequence of tokens up to that point. The model considers which of the tokens are the most influential when considering what the next token should be.

Remember that the attention layer is working with numeric vector representations of the tokens, not the actual text.

-

In a decoder, the process starts with a sequence of token embeddings representing the text to be completed.

-

During training, the goal is to predict the vector for the final token in the sequence based on the preceding tokens.

-

The attention layer assigns a numeric weight to each token in the sequence so far. It uses that value to perform a calculation on the weighted vectors that produces an attention score that can be used to calculate a possible vector for the next token.

In practice, a technique called multi-head attention uses different elements of the embeddings to calculate multiple attention scores.

-

A neural network is then used to evaluate all possible tokens to determine the most probable token with which to continue the sequence.

-

The process continues iteratively for each token in the sequence, with the output sequence so far being used regressively as the input for the next iteration – essentially building the output one token at a time.

What all of this means, is that a transformer model such as GPT-4 (the model behind ChatGPT and Bing) is designed to take in a text input (called a prompt) and generate a syntactically correct output (called a completion).

-

In effect, the “magic” of the model is that it has the ability to string a coherent sentence together.

-

This ability doesn’t imply any “knowledge” or “intelligence” on the part of the model; just a large vocabulary and the ability to generate meaningful sequences of words.

-

What makes a large language model like GPT-4 so powerful however, is the sheer volume of data with which it has been trained (public and licensed data from the Internet) and the complexity of the network.

-

This enables the model to generate completions that are based on the relationships between words in the vocabulary on which the model was trained; often generating output that is indistinguishable from a human response to the same prompt.

3. What is Azure OpenAI?

Azure OpenAI Service is Microsoft’s cloud solution for deploying, customizing, and hosting large language models, which is a result of the partnership between Microsoft and OpenAI. The service combines Azure’s enterprise-grade capabilities with OpenAI’s generative AI model capabilities. [3][4]

Azure OpenAI is available for Azure users and consists of four components:

-

Pre-trained generative AI models

-

Customization capabilities; the ability to fine-tune AI models with your own data

-

Built-in tools to detect and mitigate harmful use cases so users can implement AI responsibly

-

Enterprise-grade security with role-based access control (RBAC) and private networks

Azure OpenAI Service provides REST API access to OpenAI’s powerful language models which can be easily adapted to specific task including but not limited to content generation, summarization, image understanding, semantic search, and natural language to code translation. Users can access the service through REST APIs, Python SDK, or web-based interface in the Azure OpenAI Studio. [6]

3.1. Models

Azure OpenAI supports many models that can serve different needs. These models include:

-

GPT-4 models are the latest generation of generative pretrained (GPT) models that can generate natural language and code completions based on natural language prompts.

The latest most capable Azure OpenAI models, GPT-4 Turbo, is a large multimodal model (accepting text or image inputs and generating text) that can solve difficult problems with greater accuracy than any of OpenAI’s previous models. [5]

-

GPT 3.5 models can generate natural language and code completions based on natural language prompts.

In particular, GPT-35-turbo models are optimized for chat-based interactions and work well in most generative AI scenarios.

-

Embeddings models convert text into numeric vectors, and are useful in language analytics scenarios such as comparing text sources for similarities.

-

DALL-E (/ˈdɑːli/) models are used to generate images based on natural language prompts.

-

Whisper models can be used for speech to text. [5]

-

Text to speech models, currently in preview, can be used to synthesize text to speech. [5]

3.2. Prompts & completions

The completions endpoint is the core component of the API service which provides access to the model’s text-in, text-out interface. Users simply need to provide an input prompt containing the English text command, and the model will generate a text completion. [6]

Here’s an example of a simple prompt and completion:

Prompt: """ count to 5 in a for loop """

Completion: for i in range(1, 6): print(i)

3.3. Tokens

-

Text tokens [6]

Azure OpenAI processes text by breaking it down into tokens. Tokens can be words or just chunks of characters. For example, the word “hamburger” gets broken up into the tokens “ham”, “bur” and “ger”, while a short and common word like “pear” is a single token. Many tokens start with a whitespace, for example “ hello” and “ bye”.

The total number of tokens processed in a given request depends on the length of your input, output and request parameters. The quantity of tokens being processed will also affect your response latency and throughput for the models.

-

Image tokens (GPT-4 Turbo with Vision)

The token cost of an input image depends on two main factors: the size of the image and the detail setting (low or high) used for each image.

3.4. Prompt engineering

The GPT-3, GPT-3.5 and GPT-4 models from OpenAI are prompt-based. With prompt-based models, the user interacts with the model by entering a text prompt, to which the model responds with a text completion. This completion is the model’s continuation of the input text. [6]

While these models are extremely powerful, their behavior is also very sensitive to the prompt, that makes prompt engineering an important skill to develop.

Prompt engineering is a technique that is both art and science, which involves designing prompts for generative AI models, that utilizes in-context learning (zero shot and few shot) and, with iteration, improves accuracy and relevancy in responses, optimizing the performance of the model. [7]

| Note that with the Chat Completion API few-shot learning examples are typically added to the messages array in the form of example user/assistant interactions after the initial system message. [8] |

Prompt construction can be difficult. In practice, the prompt acts to configure the model weights to complete the desired task, but it’s more of an art than a science, often requiring experience and intuition to craft a successful prompt.

3.5. RAG (Retrieval Augmented Generation)

RAG (Retrieval Augmented Generation) is a method that integrates external data into a Large Language Model prompt to generate relevant responses. [7]

-

It is particularly beneficial when using a large corpus of unstructured text based on different topics.

-

It allows for answers to be grounded in the organization’s knowledge base (KB), providing a more tailored and accurate response.

RAG is also advantageous when answering questions based on an organization’s private data or when the public data that the model was trained on might have become outdated, that helps ensure that the responses are always up-to-date and relevant, regardless of the changes in the data landscape.

3.6. Fine-tuning

Fine-tuning, specifically supervised fine-tuning in this context, is an iterative process that adapts an existing large language model to a provided training set in order to improve performance, teach the model new skills, or reduce latency. [7]

3.7. Chat Completions vs. Completions

The Chat Completions format was designed specifically for multi-turn conversations, but can be made similar to the completions format for nonchat scenarios by constructing a request using a single user message. For example, one can translate from English to French with the following completions prompt: [9][10]

Translate the following English text to French: "{text}"And an equivalent chat prompt would be:

[{"role": "user", "content": 'Translate the following English text to French: "{text}"'}]Likewise, the completions API can be used to simulate a chat between a user and an assistant by formatting the input accordingly.

The difference between these APIs is the underlying models that are available in each.

| Model families | API endpoint | |

|---|---|---|

Newer models (2023–) |

gpt-4, gpt-4-turbo-preview, gpt-3.5-turbo |

|

Updated LEGACY models (2023) |

gpt-3.5-turbo-instruct, babbage-002, davinci-002 |

|

OpenAI Chat Completions API

Chat models take a list of messages as input and return a model-generated message as output. Although the chat format is designed to make multi-turn conversations easy, it’s just as useful for single-turn tasks without any conversation. An example Chat Completions API call looks like the following: An example Chat Completions API response looks as follows: To learn more, you can view the full API reference documentation for the Chat API. |

|

Azure OpenAI Chat Completions API

An example Chat Completions API in Azure OpenAI call looks like the following: The response from the API will be similar to the following JSON: To learn more, you can view the full Azure OpenAI Service REST API reference for the Chat API. |

3.8. Code generation

GPT models are able to take natural language or code snippets and translate them into code. The OpenAI GPT models are proficient in over a dozen languages, such as C#, JavaScript, Perl, PHP, and is most capable in Python. [11]

GPT models have been trained on both natural language and billions of lines of code from public repositories. The models are able to generate code from natural language instructions such as code comments, and can suggest ways to complete code functions.

Part of the training data for GPT-3 included programming languages, so it’s no surprise that GPT models can answer programming questions if asked. What’s unique about the Codex model family is that it’s more capable across more languages than GPT models.

OpenAI partnered with GitHub to create GitHub Copilot, which they call an AI pair programmer. GitHub Copilot integrates the power of OpenAI Codex into a plugin for developer environments like Visual Studio Code.

Appendix A: FAQ

A.1. Large Language Model (LLM) Platforms: A Comparison

| Platform | Model Families | Representative Products | Key Features | RAG Functionality | Pros | Cons | Documentation Quality | Supported SDKs |

|---|---|---|---|---|---|---|---|---|

OpenAI |

GPT-n (e.g., GPT-3, GPT-4+) |

ChatGPT |

Text generation, translation, writing different creative text formats, code generation |

Limited (integrations in progress) |

Powerful text generation, user-friendly interface (ChatGPT) |

Limited control over factual accuracy, potential for bias in outputs |

Moderate |

Python, Node.js |

Azure OpenAI |

GPT-n (based on OpenAI) |

Azure OpenAI Service |

Similar to OpenAI’s offerings |

Integrated with Azure AI Search for retrieval-augmented generation (RAG) |

Easy integration with Azure services, access to Microsoft’s computing power |

Limited control over model (based on OpenAI’s offerings), potential for bias in outputs |

Moderate |

Python, Java, C#, JavaScript |

Google AI |

LaMDA, PaLM, T5, Gemini (Bard) |

LaMDA, Gemini (Bard) |

Text generation, translation, question answering, chatbot interactions |

Not publicly available for RAG integration |

Powerful for various tasks (PaLM), focus on conversational abilities (LaMDA, Gemini) |

Limited public access to some models (e.g., PaLM), potential for bias in outputs |

High |

Python, Java |

Meta |

BlenderBot 3, Jurassic-1 Jumbo, Llama |

BlenderBot 3, Llama |

Focus on chatbots, strong performance in benchmarks |

Not currently available |

Promising for chatbots, good benchmark performance |

Limited public information on model capabilities, potential for bias in outputs |

Moderate |

Python (PyTorch Hub) |

Anthropic |

Claude 3 (various models) |

N/A |

Focus on safety and responsible use, multiple models for various tasks |

Not publicly available |

Strong focus on safety and ethical considerations |

Limited public access, early development stage |

Low |

Not publicly available yet |

Alibaba DashScope |

Proprietary models + Third-party models (limited info) |

Tongyi Qianwen, Ali NLG |

Text generation, machine translation, NLP tasks (limited public info) |

Not publicly available |

Focus on domestic market, potential for customization, third-party model support |

Limited transparency on models and capabilities, potential for language bias |

Low (limited public info) |

Java, Python (limited information available) |

Baidu Qianfan |

ERNIE (Wénxīn Yīyán) + Third-party models (limited info) |

Baidu Qianfan (text generation, translation, code generation, chatbot interactions) |

Text generation, translation, code generation, chatbot interactions |

Not directly supported (potential internal solutions for information retrieval) |

Powerful models (WuDao 2.0), user-friendly interface (Qianfan), third-party model support |

Limited public information on RAG implementation, potential for bias in outputs |

Moderate |

Python, Java, Go, Node.js |

Huawei Pangu |

Proprietary models |

(no public product yet) |

Focus on three-layer architecture: foundational LLM, industry-specific models, scenario-specific models |

Not applicable (no public product) |

Focus on customization for specific industries and use cases (based on announcements) |

Limited public information on capabilities, early access might be restricted |

Not applicable (no public product) |

Not applicable (no public product yet) |

A.2. How does RAG work like GPT, Gemini, ERNIE?

RAG (Retrieval-Augmented Generation) differs fundamentally from large language models (LLMs) like GPT, Gemini, ERNIE, and others in its approach to generating text. Here’s a breakdown:

LLMs (GPT, Gemini, ERNIE):

-

Function: LLMs are trained on massive amounts of text data. This allows them to learn complex statistical relationships between words and phrases. When given a prompt or query, they use this knowledge to generate text that is statistically similar to the text they were trained on.

-

Process: Here’s a simplified view of how LLMs work:

-

Input: You provide a prompt or question.

-

Internal Representation: The LLM converts the input into an internal representation, like a series of numbers.

-

Prediction: The LLM predicts the next word or phrase in the sequence based on the internal representation and its knowledge of language patterns.

-

Output: The LLM continues predicting words or phrases, building a coherent text response based on the prompt or question.

-

-

Focus: LLMs excel at generating different creative text formats, translating languages, writing different kinds of creative content, and answering your questions in an informative way. They rely solely on their internal knowledge base for generating text.

RAG (Retrieval-Augmented Generation):

-

Function: RAG combines retrieval techniques with LLM capabilities. It retrieves relevant information from an external source (like a search engine or document database) and feeds that information to an LLM for text generation.

-

Process: Here’s a simplified view of how RAG works:

-

Input: You provide a prompt or question.

-

Retrieval System: An information retrieval system searches for relevant documents or information based on the prompt.

-

Information Extraction: Key information from the retrieved documents is extracted.

-

Feeding the LLM: The prompt, along with the extracted information, is fed to an LLM.

-

Text Generation: The LLM uses the prompt and extracted information to generate a text response.

-

-

Focus: RAG aims to improve the factual accuracy and grounding of the generated text by incorporating external information. It’s particularly valuable for tasks where access to relevant information is crucial.

Key Differences:

Here’s a table summarizing the key differences:

| Feature | LLM (GPT, Gemini, ERNIE) | RAG |

|---|---|---|

Data Source |

Massive text corpus |

External source (search engine, document database) + LLM’s internal knowledge |

Information Retrieval |

No |

Yes |

Focus |

Statistical similarity, fluency |

Factual accuracy, grounding |

In essence, LLMs are self-contained text generation machines, while RAG leverages external information to enhance the quality of the generated text.

A.3. What’s vector search and embedding?

Feature |

Full-Text Search |

Keyword Search |

Vector Search |

Search Method |

Scans entire document content |

Matches specific keywords |

Uses vector embeddings for semantic similarity |

Strengths |

More comprehensive, finds documents with similar meaning |

Simple, fast |

Finds similar data points even without exact keywords |

Weaknesses |

Less efficient for large datasets, might return irrelevant results |

Misses relevant documents with different phrasing |

Requires complex infrastructure, computationally expensive (large datasets) |

Ideal Use Cases |

Searching large document collections, finding documents related to a topic |

Finding documents with specific terminology |

Efficient search for similar data points (documents, images) based on meaning |

Vector search and embedding are two techniques that work together to efficiently search through large amounts of data, particularly textual data. Here’s a breakdown of each concept:

-

Vector Embedding:

-

Imagine representing data points (like words, documents, images) as points in a high-dimensional space.

-

Vector embedding is the process of converting these data points into numerical vectors that capture their semantic meaning and relationships.

-

These vectors are like unique fingerprints that encode the essence of the data point.

-

Techniques like word2vec, GloVe, and transformers are used to create these embeddings.

-

-

Vector Search:

-

Once you have data points converted into vectors, you can perform vector search.

-

This involves comparing a query vector (an embedding of your search term) to the document vectors in your collection.

-

The documents whose vectors are closest to the query vector are considered the most relevant results.

-

Vector search algorithms like cosine similarity are used to measure the closeness between vectors.

-

Benefits of using vector search and embedding:

-

Efficiency: Compared to traditional keyword search, vector search can find similar data points much faster, especially for large datasets.

-

Semantic understanding: Vector search goes beyond exact keyword matches and retrieves results based on meaning and context.

-

Handling synonyms and variations: Similar words or phrases with different wording will have close vectors, allowing for broader and more relevant searches.

Applications of vector search and embedding:

-

Search engines: Can improve search results by finding semantically similar documents, even if they don’t contain the exact keywords.

-

Recommendation systems: Recommend products, articles, or music similar to what a user has liked in the past.

-

Chatbots and virtual assistants: Understand the user’s intent better and provide more relevant responses.

-

Anomaly detection: Identify data points that deviate significantly from the norm, potentially indicating fraud or errors.

-

Image retrieval: Find similar images based on their content, not just their filenames or captions.

Here’s an analogy to understand it better:

Imagine a library with books on various topics. Traditional keyword search is like looking for a specific book title. Vector search and embedding are like browsing the library by genre or topic. You can find relevant books even if they don’t have the exact keywords you were looking for.

A.3.1. What’s its relationship with LLM, like Gemini or GPT?

LLMs (Large Language Models) like Gemini and GPT-3 are a powerful tool for generating text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. However, they primarily focus on processing and understanding the meaning of text data itself.

Vector search and embedding, on the other hand, are complementary techniques that help LLMs find and retrieve relevant information more efficiently. Here’s how they relate:

-

Finding the data: LLMs are excellent at understanding and processing textual information. But when it comes to finding specific data points within a vast collection, they can be less efficient. This is where vector search comes in.

-

Embeddings bridge the gap: Vector embeddings act as a bridge between the textual world that LLMs understand and the numerical world of vector search. By converting text data (documents, queries) into numerical vectors, vector search algorithms can efficiently find similar data points based on their semantic meaning.

-

LLMs leverage the results: Once a vector search retrieves the most relevant data points (documents, articles, code) based on the query, the LLM can take over. It can process the retrieved information, analyze it in detail and provide a comprehensive answer or complete the task at hand.

Analogy:

Imagine you’re a researcher and you need to find information for a project. LLM is like a highly skilled assistant who understands your research topic and the kind of information you need. But, if the information is scattered across a massive library, your assistant might get overwhelmed searching through everything. Here’s where vector search comes in. It acts like a sophisticated library catalog system that can quickly point you to the most relevant books (data points) based on your research topic. With this curated list, your assistant (LLM) can then delve deeper into those resources and provide you with the insights you need.

In essence, LLMs and vector search/embedding work together to create a more powerful and efficient system for processing information and retrieving relevant data.

A.3.2. What are the popular tools or databases that support Vector Search?

Here are some popular tools and databases that support Vector Search:

Vector Databases:

-

Pinecone: A managed vector database service with a focus on ease of use and scalability. It offers a user-friendly interface and integrates well with various machine learning frameworks.

-

Milvus: An open-source vector database known for its high performance and flexibility. It supports various similarity search algorithms and offers features like multilingual search and data partitioning.

-

Weaviate: An open-source vector database that allows you to store not only vector embeddings but also the original data objects. This can be helpful for tasks like visualizing search results or managing metadata.

-

Faiss (Facebook AI Similarity Search): A popular open-source library for efficient similarity search on GPUs and CPUs. While not a full-fledged database itself, Faiss is often used as the underlying engine for vector search functionalities within other tools.

-

MongoDB Atlas Vector Search: This is a managed vector search offering built on top of the popular MongoDB database platform. It allows you to leverage MongoDB’s existing functionalities for data storage and management alongside vector search capabilities.

Libraries and Tools:

-

ScaNN (Scalable Nearest Neighbors): An open-source library by Google Research that offers efficient and scalable algorithms for approximate nearest neighbor search. It’s a good option for large-scale datasets where exact similarity might not be crucial.

-

Annoy (Approximate Nearest Neighbors Optimized for Yandex): Another open-source library offering approximate nearest neighbor search functionality. It’s known for its memory efficiency and can be a good choice for resource-constrained environments.

Remember, this is not an exhaustive list, and new tools and databases are emerging all the time. It’s always a good idea to research and compare different options based on your specific requirements.

A.3.3. I’m new to search engine. show the learn path about vector search.

Learning Path for Vector Search Beginners

Welcome to the world of vector search! Here’s a roadmap to guide you through the basics and get you started with this exciting technology.

Step 1: Grasp the Fundamentals

-

Understand Text Search Limitations: Traditional search engines rely on keyword matching, which can be limiting. Start by understanding the challenges of keyword-based search, especially when dealing with synonyms, context, and variations in phrasing.

-

Demystify Vector Embeddings: These are the magic behind vector search! They’re numerical representations of data (text, images) that capture their meaning and relationships. Explore concepts like word2vec, GloVe, and transformers, which are techniques used to create embeddings. Resources like https://jalammar.github.io/illustrated-word2vec/ or https://nlp.stanford.edu/projects/glove/ can provide a good introduction.

Step 2: Deep Dive into Vector Search

-

Explore the Core Concepts: Learn how vector search works! This involves converting queries and data points into vectors, then using algorithms like cosine similarity to find the closest matches. Resources like https://learn.microsoft.com/en-us/azure/search/vector-search-overview or https://learn.microsoft.com/en-us/azure/search/vector-search-overview offer good explanations.

-

Benefits and Applications: Discover the advantages of vector search over traditional methods. See how it’s used in search engines, recommendation systems, chatbots, anomaly detection, and image retrieval. Articles like https://www.algolia.com/blog/ai/what-is-vector-search/ or https://engineering.fb.com/2017/03/29/data-infrastructure/faiss-a-library-for-efficient-similarity-search/ can be helpful.

Step 3: Explore Tools and Techniques

-

Popular Vector Search Tools: Investigate some of the leading tools and databases that support vector search. This includes Pinecone, Milvus, Weaviate, Faiss, and MongoDB Atlas Vector Search. https://www.pinecone.io/ offers a good starting point.

-

Experiment with Libraries: If you’re comfortable with coding, try out open-source libraries like ScaNN (Scalable Nearest Neighbors) or Annoy (Approximate Nearest Neighbors Optimized for Yandex). They provide efficient algorithms for vector search. Tutorials on these libraries can be found online.

Step 4: Practice and Refine

-

Find a Project: Apply your newfound knowledge! Look for a small project where you can experiment with vector search. This could be building a simple search engine for documents, a recommendation system for movies, or a chatbot with improved understanding.

-

Refine your Skills: As you work on your project, keep learning and exploring. There are many online resources, tutorials, and communities dedicated to vector search. Don’t hesitate to seek help and feedback from experienced users.

Additional Resources:

-

Books: "Deep Learning with Python" by Francois Chollet (Chapter 18 covers embeddings)

-

Online Courses: Platforms like Coursera, edX, and Udacity might offer courses related to vector search or information retrieval.

-

Blogs: Many companies and research institutions working on vector search publish informative blog posts. Look for blogs from Pinecone, Milvus, Facebook AI, and Google AI.

Remember, this is a beginner-friendly path, and you can adjust it based on your interests and pace. The key is to start with the basics, explore the practical applications, and don’t be afraid to experiment!

A.3.4. Should I also need to learn ML and DL?

Whether you need to learn Machine Learning (ML) and Deep Learning (DL) to delve into Large Language Models (LLMs) depends on your specific goals:

If you want to use LLMs:

-

Basic understanding is helpful: Familiarizing yourself with core ML concepts like training data, evaluation metrics, and different types of models will be beneficial. You’ll encounter these terms when using or interacting with LLMs.

-

No need to be an expert: You don’t necessarily need to become an ML or DL expert to utilize LLMs for various tasks like text generation, translation, or writing different creative content formats. Many user-friendly LLM APIs and tools are available that don’t require in-depth knowledge of the underlying algorithms.

If you want to build or modify LLMs:

-

ML & DL are crucial: Understanding ML and DL is essential if you want to develop or customize LLMs. These fields provide the foundation for training and fine-tuning LLM models, as well as exploring cutting-edge research areas.

Learning Path:

Here’s a potential learning path based on your goals:

-

Focus on using LLMs: Start with a basic understanding of ML through online resources, tutorials, or introductory courses. This will give you a good foundation for working with LLMs and interpreting their outputs.

-

Aiming to build or modify LLMs: Invest time in learning ML and DL. Numerous online courses, textbooks, and tutorials can equip you with the necessary skills.

Additional Resources:

-

Machine Learning Crash Course by Google: https://developers.google.com/machine-learning/crash-course (Great introduction to ML concepts)

-

Fast.ai Practical Deep Learning for Coders: https://course.fast.ai/ (Practical approach to learning DL with coding exercises)

-

Deep Learning Book by Ian Goodfellow, Yoshua Bengio, and Aaron Courville: https://www.deeplearningbook.org/ (Comprehensive textbook for in-depth DL knowledge)

Remember, the field of AI, ML, and LLMs is constantly evolving. Start with manageable goals and gradually expand your knowledge as you gain experience and confidence!

A.3.5. Papers [Gemini]

There are many research papers that can deepen your understanding of Large Language Models (LLMs). Here’s a breakdown of how to approach learning about LLMs through research papers:

Start with Foundational Papers:

-

Attention Is All You Need (Vaswani et al., 2017): This groundbreaking paper introduced the Transformer architecture, a core building block for many modern LLMs.

-

A Primer on Neural Language Processing (Jurafsky & Martin, 2014): This comprehensive textbook provides a solid foundation in NLP concepts essential for understanding LLMs.

Explore Specific LLM Implementations:

-

GPT-3: Language Models are Few-Shot Learners (Brown et al., 2020): This paper describes the capabilities and training methods behind GPT-3, a highly influential LLM.

-

PaLM: Scaling Language Models with Pathways (Chowdhery et al., 2022): This paper introduces PaLM, a powerful LLM from Google AI, focusing on its training methodology and capabilities.

Delve into Specific LLM Applications:

-

Learning to Code with Large Language Models (Chen et al., 2021): This paper explores how LLMs can be used for code generation and programming tasks.

-

Reasoning with Large Language Models (McCoy et al., 2022): This paper delves into the challenges and potential of LLMs for reasoning and logical tasks.

Finding Resources:

These are just a few examples, and the specific papers you choose will depend on your interests and goals. Here are some resources to help you find relevant research papers:

-

ArXiv: A leading repository for pre-print research papers in various fields, including NLP and AI (arxiv.org)

-

Google Scholar: A powerful search engine for academic publications (scholar.google.com)

-

AI Open Access Papers with Code (The A List): A curated list of influential AI papers with their corresponding code (allenai.org)

Additional Tips:

-

Start with introductory papers: Begin with foundational papers to establish a solid understanding of core NLP concepts.

-

Explore blog posts and summaries: Many blogs and websites provide summaries or explanations of research papers, making them easier to digest.

-

Join online communities: Engage in discussions and get recommendations from other researchers or enthusiasts in online forums or communities.

Remember, the field of LLMs is evolving rapidly, so staying updated with current research is crucial. Enjoy your exploration of the fascinating world of LLMs!

A.3.6. How to read a paper? [Gemini]

Here’s a breakdown of how to effectively approach reading a research paper, particularly in the field of AI and Large Language Models (LLMs):

Preparation:

-

Identify your goal: Before diving in, clarify your purpose for reading the paper. Are you aiming for a general understanding, specific details, or critical evaluation? This will guide your focus while reading.

-

Gather background knowledge: Familiarize yourself with the general area of research. Skim the introduction or abstract to understand the paper’s topic and its contribution to the field. Consider searching for related blog posts or introductory articles for basic concepts.

Active Reading:

-

Start with the abstract and introduction: The abstract provides a concise overview of the paper’s purpose, methodology, key findings, and conclusions. The introduction elaborates on the context, motivation, and research question(s) addressed in the paper.

-

Survey the structure: Most research papers follow a similar format: Introduction, Related Work, Methodology, Results, Discussion, Conclusion, and References. Skimming these sections gives you a roadmap of the paper’s content.

-

Focus on key sections: Depending on your goal, prioritize specific sections. For a general understanding, focus on the Introduction, Results, and Conclusion. For a deeper dive, delve into the Methodology section to understand how the research was conducted.

-

Engage with the content: Don’t just passively read. Take notes, highlight important points, and write down any questions or confusions you have.

Critical Analysis:

-

Evaluate the methodology: Consider the data used, the training approach, and the evaluation metrics. Are they appropriate for the research question? Are there any limitations or biases to be aware of?

-

Analyze the results: Pay close attention to the figures, tables, and data visualizations. Do the results support the conclusions? Are there alternative explanations or interpretations?

-

Consider the broader context: How does this research contribute to the field? Does it align with existing knowledge or challenge current understanding? Are there any ethical implications to consider?

Additional Tips:

-

Use online resources: Many research papers are accompanied by supplementary materials like code, datasets, or presentations. Utilize these resources to gain a deeper understanding.

-

Consult online communities: Engage in discussions about the paper in online forums or communities related to AI or LLMs. This can help clarify concepts and gain different perspectives.

-

Don’t be afraid to re-read: Complex research papers often require multiple readings to fully grasp the content. Don’t hesitate to revisit confusing sections or consult online resources for clarification.

By following these steps and actively engaging with the material, you can effectively read and understand research papers in the field of AI and LLMs, even if you’re new to the subject. Remember, it’s a journey of exploration and learning!

References

-

[1] https://learn.microsoft.com/en-us/training/modules/get-started-ai-fundamentals/

-

[2] https://learn.microsoft.com/en-us/training/modules/fundamentals-generative-ai/

-

[3] https://learn.microsoft.com/en-us/training/modules/explore-azure-openai/

-

[4] https://learn.microsoft.com/en-us/training/modules/fundamentals-generative-ai/4-azure-openai

-

[5] https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/models

-

[6] https://learn.microsoft.com/en-us/azure/ai-services/openai/overview

-

[7] https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/customizing-llms

-

[8] https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/prompt-engineering

-

[9] https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/chatgpt

-

[10] https://platform.openai.com/docs/guides/text-generation/chat-completions-vs-completions

-

[11] https://learn.microsoft.com/en-us/training/modules/explore-azure-openai/6-understand-openai-code-generation

-

[12] https://en.wikipedia.org/wiki/Generative_artificial_intelligence