SQL (pronounced /ˌɛsˌkjuˈɛl/ S-Q-L; or alternatively as /ˈsiːkwəl/ "sequel") stands for Structured Query Language, which is both an ANSI and ISO standard language that was designed to query and manage data in relational database management systems (RDBMSs).

An RDBMS is a database management system based on the relational model (a semantic model for representing data), which in turn is based on two mathematical branches: set theory and predicate logic.

| "NULL marker" or just "NULL" (/nʌl/) is not a NULL value but rather a marker for a missing value. |

SQL comprises several sub-languages for managing different aspects of a database:

-

Data Definition Language (DDL) defines and manages the structure of database objects with statements such as

CREATE,ALTER, andDROP. -

Data Manipulation Language (DML) retrieves and modifies data using statements like

SELECT,INSERT,UPDATE,DELETE, andMERGE. -

Data Control Language (DCL) manages data access and user permissions through statements such as

GRANTandREVOKE. -

Transaction Control Language (TCL) controls the lifecycle of transactions with statements like

COMMITandROLLBACK.

Microsoft provides T-SQL as a dialect of, or an extension to, SQL in SQL Server—its on-premises RDBMS flavor, and in Azure SQL and Azure Synapse Analytics—its cloud-based RDBMS flavors.

T-SQL is based on standard SQL, but it also provides some nonstandard or proprietary extensions. Moreover, T-SQL does not implement all of standard SQL.

To run T-SQL code against a database, a client application needs to connect to a SQL Server instance and be in the context of, or use, the relevant database.

-

In both SQL Server and Azure SQL Managed Instance, the application can still access objects from other databases by adding the database name as a prefix.

-

Azure SQL Database does not support cross-database/three-part name queries.

SQL Server supports a feature called contained databases that breaks the connection between a database user and an instance-level login.

-

The user (Windows or SQL authenticated) is fully contained within the specific database and is not tied to a login at the instance level.

-

When connecting to SQL Server, the user needs to specify the database to connect, and the user cannot subsequently switch to other user databases.

Unless specified otherwise, all T-SQL references to the name of a database object can be a four-part name in the following form:

-- Machine -> * Servers (instances) -> * Databases -> * Schemas -> * Tables, * Views

server_name.[database_name].[schema_name].object_name

| database_name.[schema_name].object_name

| schema_name.object_name

| object_name- 1. Data Integrity

- 2. Logical Query Processing

- 3. Predicates and Operators

- 4. Query Tuning

- 4.1. SQL Server Internals

- 4.2. Data Retrieval Strategies

- 4.2.1. Unordered Clustered Index Scan or Table Scan

- 4.2.2. Unordered Covering Nonclustered Index Scan

- 4.2.3. Ordered Clustered Index Scan

- 4.2.4. Ordered Covering Nonclustered Index Scan

- 4.2.5. Nonclustered Index Seek + Range Scan + Lookups

- 4.2.6. Unordered Nonclustered Index Scan + Lookups

- 4.2.7. Clustered Index Seek + Range Scan

- 4.2.8. Covering Nonclustered Index Seek + Range Scan

- 4.3. Cardinality Estimates

- 4.4. Join Algorithms

- 4.5. Tied Rows and Sorting

- 4.6. Execution Plans Analysis

- 5. Joins

- 6. Subqueries

- 7. Table Expressions

- 8. UNION, UNION ALL, INTERSECT, and EXCEPT

- 9. Data Analysis

- 10. INSERT, DELETE, TRUNCATE, UPDATE, and MERGE

- 11. System-Versioned Temporal Tables

- 12. Transactions and Concurrency

- 13. Programmable Objects

- 14. JSON

- 15. Vectors and embeddings

- Appendix A: Data Types

- References

1. Data Integrity

SQL provides several mechanisms for enforcing data integrity:

-

PRIMARY KEYconstraint -

FOREIGN KEYconstraint with actions likeCASCADE,SET NULL,RESTRICT -

NOT NULLconstraint -

CHECKconstraint -

UNIQUEconstraint -

DEFAULTconstraint -

Triggers

-

Stored procedures

USE TSQLV6;

DROP TABLE IF EXISTS dbo.Employees;

CREATE TABLE dbo.Employees (

empid INT NOT NULL,

firstname VARCHAR(30) NOT NULL,

lastname VARCHAR(30) NOT NULL,

hiredate DATE NOT NULL,

mgrid INT NULL,

ssn VARCHAR(20) NOT NULL,

salary MONEY NOT NULL

);1.1. PRIMARY KEY

A primary key constraint enforces the uniqueness of rows and also disallows NULLs in the constraint attributes.

-

Each unique combination of values in the constraint attributes can appear only once in the table—in other words, only in one row.

-

An attempt to define a primary key constraint on a column that allows NULLs will be rejected by the RDBMS.

-

Each table can have only one primary key.

ALTER TABLE dbo.Employees

ADD CONSTRAINT PK_Employees

PRIMARY KEY (empid);To enforce the uniqueness of the logical primary key constraint, SQL Server will create a unique index behind the scenes.

-

A unique index is a physical object used by SQL Server to enforce uniqueness.

-

Indexes (not necessarily unique ones) are also used to speed up queries by avoiding sorting and unnecessary full table scans (similar to indexes in books).

1.2. UNIQUE

A unique constraint enforces the uniqueness of rows, allowing to implement the concept of alternate keys from the relational model in a database.

Unlike with primary keys, multiple unique constraints can be defined within the same table.

Also, a unique constraint is not restricted to columns defined as NOT NULL.

ALTER TABLE dbo.Employees

ADD CONSTRAINT UNQ_Employees_ssn

UNIQUE(ssn);For the purpose of enforcing a unique constraint, SQL Server handles NULLs just like non-NULL values.

-

Consequently, for example, a single-column unique constraint allows only one NULL in the constrained column.

However, the SQL standard defines NULL-handling by a unique constraint differently, like so: “A unique constraint on T is satisfied if and only if there do not exist two rows R1 and R2 of T such that R1 and R2 have the same non-NULL values in the unique columns.”

-

In other words, only the non-NULL values are compared to determine whether duplicates exist.

-

Consequently, a standard single-column unique constraint would allow multiple NULLs in the constrained column.

1.3. FOREIGN KEY

A foreign key enforces referential integrity.

-

It is defined on one or more attributes in what’s called the referencing table and points to candidate key (primary key or unique constraint) attributes in what’s called the referenced table.

-

Note that the referencing and referenced tables can be one and the same.

-

The foreign key’s purpose is to restrict the values allowed in the foreign key columns to those that exist in the referenced columns.

DROP TABLE IF EXISTS dbo.Orders;

CREATE TABLE dbo.Orders (

orderid INT NOT NULL,

empid INT NOT NULL,

custid VARCHAR(10) NOT NULL,

orderts DATETIME2 NOT NULL,

qty INT NOT NULL,

CONSTRAINT PK_Orders

PRIMARY KEY (orderid)

);-- enforce an integrity rule that restricts the values supported by the empid column in the Orders table to the values that exist in the empid column in the Employees table.

ALTER TABLE dbo.Orders

ADD CONSTRAINT FK_Orders_Employees

FOREIGN KEY(empid)

REFERENCES dbo.Employees(empid);-- restrict the values supported by the mgrid column in the Employees table to the values that exist in the empid column of the same table.

ALTER TABLE dbo.Employees

ADD CONSTRAINT FK_Employees_Employees

FOREIGN KEY(mgrid)

REFERENCES dbo.Employees(empid);| Note that NULLs are allowed in the foreign key columns (mgrid in the last example) even if there are no NULLs in the referenced candidate key columns. |

1.4. CHECK

A check constraint is used to define a predicate that a row must meet to be entered into the table or to be modified.

ALTER TABLE dbo.Employees

ADD CONSTRAINT CHK_Employees_salary

CHECK(salary > 0.00);| Note that a check constraint rejects an attempt to insert or update a row when the predicate evaluates to FALSE. The modification will be accepted when the predicate evaluates to either TRUE or UNKNOWN. |

1.5. DEFAULT

A default constraint is associated with a particular attribute.

-

It’s an expression that is used as the default value when an explicit value is not specified for the attribute when inserting a row.

ALTER TABLE dbo.Orders

ADD CONSTRAINT DFT_Orders_orderts

DEFAULT(SYSDATETIME()) FOR orderts;When done, run the following code for cleanup:

DROP TABLE IF EXISTS dbo.Orders, dbo.Employees;2. Logical Query Processing

The logical query processing in standard SQL defines how a query should be processed and the final result achieved.

(5) SELECT (5-2) DISTINCT (7) TOP(<top_specification>) (5-1) <select_list>

(1) FROM (1-J) <left_table> <join_type> JOIN <right_table> ON <on_predicate>

| (1-A) <left_table> <apply_type> APPLY <right_input_table> AS <alias>

| (1-P) <left_table> PIVOT(<pivot_specification>) AS <alias>

| (1-U) <left_table> UNPIVOT(<unpivot_specification>) AS <alias>

(2) WHERE <where_predicate>

(3) GROUP BY <group_by_specification>

(4) HAVING <having_predicate>

(6) ORDER BY <order_by_list>

(7) OFFSET <offset_specification> ROWS FETCH NEXT <fetch_specification> ROWS ONLY;-

The database engine is free to physically process a query differently by rearranging processing phases, as long as the final result would be the same as that dictated by logical query processing.

-

The database engine’s query optimizer can—and in fact, often does—apply many transformation rules and shortcuts in the physical processing of a query as part of query optimization.

USE TSQLV6;

SELECT empid, YEAR (orderdate) AS orderyear, COUNT(*) AS numorder

FROM Sales.Orders

WHERE custid = 71

GROUP BY empid, YEAR (orderdate)

HAVING COUNT(*) > 1

ORDER BY empid, orderyear;

If an identifier is irregular—for example, if it has embedded spaces or special characters, starts with a digit, or is a reserved keyword—it must be delimited. There are a couple of ways to delimit identifiers in T-SQL. One is the standard SQL form using double quotes—for example, "Order Details". Another is the T-SQL- specific form using square brackets—for example, [Order Details].

|

In most programming languages, the lines of code are processed in the order that they are written. In SQL, things are different. Even though the SELECT clause appears first in the query, it is logically processed almost last. The clauses are logically processed in the following order:

FROM Sales.Orders

WHERE custid = 71

GROUP BY empid, YEAR(orderdate)

HAVING COUNT(*) > 1

SELECT empid, YEAR(orderdate) AS orderyear, COUNT(*) AS numorders

ORDER BY empid, orderyear

FROM → WHERE → GROUP BY → HAVING → SELECT → Expressions → DISTINCT → ORDER BY → TOP/OFFSET-FETCH

|

2.1. FROM

The FROM clause is the very first query clause that is logically processed, which is used to specify the names of the tables to query and table operators that operate on those tables.

FROM Sales.Orders2.2. WHERE

In the WHERE clause, a predicate, or logical expression is specified to filter the rows returned by the FROM phase.

FROM Sales.Orders

WHERE custid = 71

T-SQL uses three-valued predicate logic, where logical expressions can evaluate to TRUE, FALSE, or UNKNOWN. With three-valued logic, saying “returns TRUE” is not the same as saying “does not return FALSE.” The WHERE phase returns rows for which the logical expression evaluates to TRUE, and it doesn’t return rows for which the logical expression evaluates to FALSE or UNKNOWN.

|

2.3. GROUP BY

The GROUP BY phase is used to arrange the rows returned by the previous logical query processing phase in groups determined by the elements, or expressions.

FROM Sales.Orders

WHERE custid = 71

GROUP BY empid, YEAR(orderdate)-

If the query is a grouped query, all phases subsequent to the

GROUP BYphase— includingHAVING,SELECT, andORDER BY—operate on groups as opposed to operating on individual rows. -

Each group is ultimately represented by a single row in the final result of the query.

-

All expressions specified in clauses that are processed in phases subsequent to the

GROUP BYphase are required to guarantee returning a scalar (single value) per group.SELECT empid, YEAR(orderdate) AS orderyear, freight -- sum(freight) AS totalfreight FROM Sales.Orders WHERE custid = 71 GROUP BY empid, YEAR(orderdate);Msg 8120, Level 16, State 1, Line 1 Column 'Sales.Orders.freight' is invalid in the select list because it is not contained in either an aggregate function or the GROUP BY clause. Total execution time: 00:00:00.016-

Expressions based on elements that participate in the

GROUP BYclause meet the requirement because, by definition, each such element represents a distinct value per group. -

Elements that do not participate in the

GROUP BYclause are allowed only as inputs to an aggregate function such asCOUNT,SUM,AVG,MIN, orMAX.-

Note that all aggregate functions that are applied to an input expression ignore NULLs.

The

COUNT(*)function isn’t applied to any input expression; it just counts rows irrespective of what those rows contain.-

For example, consider a group of five rows with the values

30, 10, NULL, 10, 10in a column calledqty. -

The expression

COUNT(*)returns5because there are five rows in the group, whereasCOUNT(qty)returns4because there are four known (non-NULL) values.

-

-

To handle only distinct (unique) occurrences of known values, specify the

DISTINCTkeyword before the input expression to the aggregate function, likeCOUNT(DISTINCT qty),AVG(DISTINCT qty)and so on.

-

-

2.4. HAVING

Whereas the WHERE clause is a row filter, the HAVING clause is a group filter.

-

Only groups for which the

HAVINGpredicate evaluates toTRUEare returned by theHAVINGphase to the next logical query processing phase. -

Groups for which the predicate evaluates to

FALSEorUNKNOWNare discarded. -

The

HAVINGclause is processed after the rows have been grouped, so aggregate functions can be referred to in theHAVINGfilter predicate.SELECT empid, YEAR(orderdate) AS orderyear, SUM(freight) AS totalfreight FROM Sales.Orders WHERE custid = 71 GROUP BY empid, YEAR(orderdate) -- filters only groups (employee and order year) with more than one row, and total freight with more than 500.0 HAVING COUNT(*) > 1 AND SUM(freight) > 500.0 ORDER BY empid, YEAR(orderdate)1 2021 711.13 2 2022 672.16 4 2022 651.83 6 2021 628.31 7 2022 1231.56

2.5. SELECT

The SELECT clause is where to specify the attributes (columns) to return in the result table of the query.

SELECT empid, YEAR(orderdate) AS orderyear, COUNT(*) AS numorders

FROM Sales.Orders

WHERE custid = 71

GROUP BY empid, YEAR(orderdate)

HAVING COUNT(*) > 1-

The

SELECTclause is processed after theFROM,WHERE,GROUP BY, andHAVINGclauses, which means that aliases assigned to expressions in theSELECTclause do not exist as far as clauses that are processed before theSELECTclause are concerned.It’s a typical mistake to try and refer to expression aliases in clauses that are processed before the SELECT clause, such as in the following example in which the attempt is made in the WHERE clause:

SELECT orderid, YEAR(orderdate) AS orderyear FROM Sales.Orders WHERE orderyear > 2021;Msg 207, Level 16, State 1, Line 3 Invalid column name 'orderyear'.One way around this problem is to repeat the expression

YEAR(orderdate)in both theWHEREandSELECTclauses:SELECT orderid, YEAR(orderdate) AS orderyear FROM Sales.Orders WHERE YEAR(orderdate) > 2021;

In addition to supporting the AS clause, T-SQL supports the form <expression> AS <alias>, and also supports the forms <alias> = <expression> (“alias equals expression”) and <expression> <alias> (“expression space alias”).

|

|

In relational theory, a relational expression is applied to one or more input relations using operators from relational algebra, and returns a relation as output, that is, a relation in SQL is a table, and a relational expression in SQL is a table expression. |

|

Recall that a relation’s body is a set of tuples, and a set has no duplicates. Unlike relational theory, which is based on mathematical set theory, SQL is based on multiset theory.

|

2.6. ORDER BY

In terms of logical query processing, ORDER BY comes after SELECT.

-

With T-SQL, elements can also be specified in the

ORDER BYclause that do not appear in theSELECTclause, meaning to sort by something that don’t necessarily want to be returned.SELECT empid, firstname, lastname, country FROM HR.Employees ORDER BY hiredate;SELECT empid, firstname, lastname, country FROM HR.Employees ORDER BY CASE country WHEN 'USA' THEN 1 WHEN 'CHN' THEN 2 WHEN 'JPN' THEN 3 WHEN 'DEU' THEN 4 WHEN 'CAN' THEN 5 WHEN 'KOR' THEN 6 ELSE 7 END, empid; -- tie-breaker -

However, when the

DISTINCTclause is specified, theORDER BYare restricted to list only elements that appear in theSELECTlist.SELECT DISTINCT empid, firstname, lastname, country FROM HR.Employees ORDER BY hiredate;Msg 145, Level 15, State 1, Line 1 ORDER BY items must appear in the select list if SELECT DISTINCT is specified. -

ASCis the default sort order.NULLvalues are treated as the lowest possible values.

|

One of the most important points to understand about SQL is that a table—be it an existing table in the database or a table result returned by a query—has no guaranteed order. That’s because a table is supposed to represent a set of rows (or multiset, if it has duplicates), and a set has no order.

|

2.7. TOP

The TOP filter is a proprietary T-SQL feature that can be used to limit the number or percentage of rows queried returns. It relies on two elements as part of its specification: one is the number or percent of rows to return, and the other is the ordering.

SELECT TOP (5)

orderid, orderdate, custid, empid

FROM Sales.Orders

ORDER BY orderdate DESC;

Note that the TOP filter is handled after DISTINCT.

|

The TOP can use option with the PERCENT keyword, in which case SQL Server calculates the number of rows to return based on a percentage of the number of qualifying rows, rounded up.

SELECT TOP (1) PERCENT

orderid, orderdate, custid, empid

FROM Sales.Orders

ORDER BY orderdate DESC;The query returns nine rows because the Orders table has 830 rows, and 1 percent of 830, rounded up, is 9.

11074 2022-05-06 73 7

11075 2022-05-06 68 8

11076 2022-05-06 9 4

11077 2022-05-06 65 1

11070 2022-05-05 44 2

11071 2022-05-05 46 1

11072 2022-05-05 20 4

11073 2022-05-05 58 2

11067 2022-05-04 17 1In the above query, notice that the ORDER BY list is not unique (because no primary key or unique constraint is defined on the orderdate column).

-

In other words, the ordering is not strict total ordering. Multiple rows can have the same order date.

-

In such a case, the ordering among rows with the same order date is undefined, which makes the query nondeterministic—more than one result can be considered correct.

-

In case of ties, SQL Server filters rows based on optimization choices and physical access order.

-

Note that when using the TOP filter in a query without an

ORDER BYclause, the ordering is completely undefined—SQL Server returns whichevernrows it happens to physically access first, wherenis the requested number of rows. -

To make the query be deterministic, a strict total ordering is needed; in other words, add a tie-breaker.

SELECT TOP (5) orderid, orderdate, custid, empid FROM Sales.Orders ORDER BY orderdate DESC, orderid DESC; -- the row with the greater order ID value will be preferred.11077 2022-05-06 65 1 11076 2022-05-06 9 4 11075 2022-05-06 68 8 11074 2022-05-06 73 7 11073 2022-05-05 58 2 -

Instead of adding a tie-breaker to the

ORDER BYlist, a request can be made to return all ties by adding theWITH TIESoption.SELECT TOP (5) WITH TIES orderid, orderdate, custid, empid FROM Sales.Orders ORDER BY orderdate DESC;-

SQL Server first returned the

TOP (5)rows based onorderdateDESCordering, and it also returned all other rows from the table that had the same orderdate value as in the last of the five rows that were accessed. -

Using the

WITH TIESoption, the selection of rows is deterministic, but the presentation order among rows with the same order date isn’t.11077 2022-05-06 65 1 11076 2022-05-06 9 4 11075 2022-05-06 68 8 11074 2022-05-06 73 7 11073 2022-05-05 58 2 11072 2022-05-05 20 4 11071 2022-05-05 46 1 11070 2022-05-05 44 2

-

The TOP filter is very useful, but it has two shortcomings—it’s not standard, and it doesn’t support a skipping capability.

|

2.8. OFFSET-FETCH

T-SQL also supports a standard, TOP-like filter, called OFFSET-FETCH, which does support a skipping option, which makes it very useful for paging purposes.

According to the SQL standard, the OFFSET-FETCH filter is considered an extension to the ORDER BY clause. With the OFFSET clause indicates how many rows to skip, and with the FETCH clause indicates how many rows to filter after the skipped rows.

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders

ORDER BY orderdate, orderid

OFFSET 50 ROWS FETCH NEXT 25 ROWS ONLY;

-- OFFSET 50 ROWS;

-- OFFSET 0 ROWS FETCH NEXT 25 ROWS ONLY;

Note that a query that uses OFFSET-FETCH must have an ORDER BY clause. Also, contrary to the SQL standard, T-SQL doesn’t support the FETCH clause without the OFFSET clause. However, OFFSET without FETCH is allowed to skip the indicated number of rows and returns all remaining rows in the result.

|

In the syntax for the OFFSET- FETCH filter, the singular and plural forms ROW and ROWS, and the forms FIRST and NEXT are interchangeable to phrase the filter in an intuitive, English-like manner.

=== OVER

|

A window function is a function that, for each row in the underlying query, operates on a window (set) defined with an OVER clause of rows that is derived from the underlying query result, and computes a scalar (single) result value.

SELECT orderid, custid, freight,

ROW_NUMBER() OVER(PARTITION BY custid

ORDER BY freight) AS rownum

FROM Sales.Orders

ORDER BY custid, freight;-

For each row in the underlying query, the

OVERclause exposes to the function a subset of the rows from the underlying query’s result set. -

The

OVERclause can restrict the rows in the window by using an optional window partition clause (PARTITION BY). -

It can define ordering for the calculation (if relevant) using a window order clause (

ORDER BY)—not to be confused with the query’s presentationORDER BYclause.

| Window functions are defined by the SQL standard, and T-SQL supports a subset of the features from the standard. |

2.9. CASE

A CASE expression, based on the SQL standard, is a scalar expression that returns a value based on conditional logic.

Note that CASE is an (scalar) expression and not a statement; that is, it returns a value and it is allowed wherever scalar expressions are allowed, such as in the SELECT, WHERE, HAVING, and ORDER BY clauses and in CHECK constraints.

|

There are two forms of CASE expressions: simple and searched.

-

The simple CASE expression has a single test value or expression right after the

CASEkeyword that is compared with a list of possible values or expressions, in theWHENclauses.-

If no value in the list is equal to the tested value, the

CASEexpression returns the value that appears in theELSEclause (orNULLif anELSEclause is not present).SELECT supplierid, COUNT(*) AS numproducts, CASE COUNT(*) % 2 WHEN 0 THEN 'Even' WHEN 1 THEN 'Odd' ELSE 'Unknown' END AS countparity FROM Production.Products GROUP BY supplierid;

-

-

The searched CASE expression returns the value in the

THENclause that is associated with the firstWHENpredicate that evaluates toTRUE.-

If none of the

WHENpredicates evaluates toTRUE, theCASEexpression returns the value that appears in theELSEclause (orNULLif anELSEclause is not present).SELECT orderid, custid, freight, CASE WHEN freight < 1000.00 THEN 'Less than 1000' WHEN freight <= 3000.00 THEN 'Between 1000 and 3000' WHEN freight > 3000.00 THEN 'More than 3000' ELSE 'Unknown' END AS valuecategory FROM Sales.Orders;

-

3. Predicates and Operators

T-SQL has language elements in which predicates can be specified—for example, query filters such as WHERE and HAVING, the JOIN operator’s ON clause, CHECK constraints, and others.

T-SQL uses three-valued predicate logic, where logical expressions can evaluate to TRUE, FALSE, or UNKNOWN.

|

3.1. Predicates: IN, BETWEEN, LIKE, EXISTS, and IS NULL

-

The

INpredicate is used to check whether a value, or scalar expression, is equal to at least one of the elements in a set.SELECT orderid, empid, orderdate FROM Sales.Orders WHERE orderid IN(10248, 10249, 10250); -

The

BETWEENpredicate is used to to check whether a value falls within a specified range, INCLUSIVE of the two delimiters of the range.SELECT orderid, empid, orderdate FROM Sales.Orders WHERE orderid BETWEEN 10300 AND 10310; -

The

LIKEpredicate is used to check whether a character string value meets a specified pattern.SELECT empid, firstname, lastname FROM HR.Employees WHERE lastname LIKE N'D%';Notice the use of the letter Nto prefix the string'D%';it stands for National and is used to denote that a character string is of a Unicode data type (NCHARorNVARCHAR), as opposed to a regular character data type (CHARorVARCHAR). -

The

EXISTSorNOT EXISTSpredicate is used to test for the presence or absence of rows in a subquery.SELECT custid, companyname FROM Sales.Customers AS C WHERE EXISTS (SELECT * FROM Sales.Orders AS O WHERE O.custid = C.custid); -

The

IS NULLand its oppositeIS NOT NULLpredicates are used to test forNULLvalues.SELECT empid, firstname, lastname, mgrid FROM HR.Employees WHERE mgrid IS NULL;

3.2. Three-Valued Logic (3VL)

SQL uses three-valued logic (3VL), where expressions can evaluate to one of three states: TRUE, FALSE, or NULL (also called UNKNOWN). It is critical to understand that WHERE and HAVING clauses only accept rows where the condition is TRUE, discarding rows that are FALSE or NULL.

The logical operators AND, OR, and NOT behave as follows:

-

NOTOperator:-

NOT TRUEresults inFALSE. -

NOT FALSEresults inTRUE. -

NOT NULLresults inNULL.

-

-

ANDOperator (Pessimistic): It returnsTRUEonly if both sides areTRUE. It is pessimistic because if one side isFALSE, the result isFALSE, even if the other side isNULL.-

TRUE AND NULLresults inNULL. -

FALSE AND NULLresults inFALSE. -

NULL AND NULLresults inNULL.

-

-

OROperator (Optimistic): It returnsTRUEif either side isTRUE. It is optimistic because if one side isTRUE, the result isTRUE, even if the other side isNULL.-

TRUE OR NULLresults inTRUE. -

FALSE OR NULLresults inNULL. -

NULL OR NULLresults inNULL.

-

3.3. Equality and Distinctness

In SQL, the way NULL values are compared depends on the context, leading to two different types of comparison logic:

-

Equality-based Comparison is the standard comparison used in predicates like

WHEREandJOIN ON. It treatsNULLas an unknown value. Because one unknown cannot be said to be equal to another, the expressionNULL = NULLevaluates toUNKNOWN, notTRUE. -

Distinctness-based Comparison is used by operators that need to group rows or find duplicates, such as

GROUP BY,UNION,INTERSECT, andEXCEPT. For these operations, twoNULLvalues are treated as not distinct from each other (i.e., they are considered identical) which ensures that rows withNULLvalues in the same columns are correctly identified as duplicates.The formal SQL standard predicate for this logic is IS [NOT] DISTINCT FROM. It provides a way to compare values while treating twoNULLvalues as equivalent. For example,NULL IS NOT DISTINCT FROM NULLevaluates toTRUE. It is important to note that T-SQL does not support this predicate, even though its set operators use the underlying distinctness logic.

3.4. Comparison Operators: =, >, <, >=, <=, <>, and ALL, SOME, ANY

-

T-SQL supports the comparison operators:

=,>,<,>=,<=,<>,!=,!>, and!<, of which the last three are not standard and should be avoided using.SELECT orderid, empid, orderdate FROM Sales.Orders WHERE orderdate >= '20220101'; -

The

<>(not equal) operator is used to check whether a value is not equal to another value.SELECT orderid, empid, orderdate FROM Sales.Orders WHERE orderdate <> '20220101'; -

The

ALLkeyword is used with a comparison operator to compare a scalar value with every value in a list or result set returned by a subquery. The condition isTRUEif the comparison isTRUEfor all values in the list.-- Example: Find products whose list price is greater than ALL list prices in the 'Road Bikes' category. SELECT Name, ListPrice FROM Production.Product WHERE ListPrice > ALL (SELECT ListPrice FROM Production.Product WHERE ProductSubcategoryID = 1); -

The

SOMEorANYkeyword (they are synonyms) is used with a comparison operator to compare a scalar value with any value in a list or result set returned by a subquery. The condition isTRUEif the comparison isTRUEfor at least one value in the list.-- Example: Find products whose list price is greater than SOME list prices in the 'Mountain Bikes' category. SELECT Name, ListPrice FROM Production.Product WHERE ListPrice > SOME (SELECT ListPrice FROM Production.Product WHERE ProductSubcategoryID = 2);It’s important to distinguish between

NOT INand<> ANY:-

NOT INmeans "not equal to value A AND not equal to value B AND not equal to value C…" -

<> ANYmeans "not equal to value A OR not equal to value B OR not equal to value C…"

For example, if a subquery returns

(1, 2, 3):-

value NOT IN (1, 2, 3)is true ifvalueis not 1 AND not 2 AND not 3. -

value <> ANY (1, 2, 3)is true ifvalueis not 1 OR not 2 OR not 3.

-

3.5. Logical Operators: OR, AND, and NOT

-

The logical operators

OR,AND, andNOTare used to combine logical expressions.SELECT orderid, empid, orderdate FROM Sales.Orders WHERE orderdate >= '20220101' AND empid NOT IN(1, 3, 5);

3.6. Arithmetic Operators: +, -, *, /, and %

-

T-SQL supports the four obvious arithmetic operators:

+,-,*, and/, and also supports the%operator (modulo), which returns the remainder of integer division.SELECT orderid, productid, qty, unitprice, discount, qty * unitprice * (1 - discount) AS val FROM Sales.OrderDetails;Note that the data type of a scalar expression involving two operands is determined in T-SQL by the operand with the higher data-type precedence.

-

If both operands are of the same data type, the result of the expression is of the same data type as well.

-

If the two operands are of different types, the one with the lower precedence is promoted to the one that is higher.

WITH Numbers AS ( SELECT 5 AS IntValue, 2 AS IntDivisor, 5.0 AS FloatValue ) SELECT IntValue / IntDivisor AS IntegerDivisionResult, -- Integer division CAST(IntValue AS NUMERIC(12, 2)) / CAST(IntDivisor AS NUMERIC(12, 2)) AS DecimalDivisionResult, -- Decimal division with casting FloatValue / IntDivisor AS DecimalDivisionFromFloatResult -- Division with a float FROM Numbers;

-

|

The

|

4. Query Tuning

-

To simulate a cold cache scenario for query performance measurement, run a manual checkpoint to write dirty buffers to disk and then drop all clean buffers from cache.

CHECKPOINT; DBCC DROPCLEANBUFFERS;DBCC DROPCLEANBUFFERSshould only be used isolated test environments as it can severely impact server performance. -

To see the estimated plan in SSMS/ADS, highlight the query and clicking the

Display Estimated Execution Plan(Ctrl+L) button on the SQL Editor toolbar. -

To see the actual plan, enable the

Include Actual Execution Plan(Ctrl+M) button and click theExecutebutton (Alt+X) to execute the query with runtime details like actual rows and executions per operator. -

To get execution plans in XML format, use

SET STATISTICS XML ON(actual plan with execution) orSET SHOWPLAN_XML ON(estimated plan without execution). -

To get execution plans in text/tabular format, use

SET STATISTICS PROFILE ON(actual plan with execution) orSET SHOWPLAN_ALL ON(estimated plan without execution).Rows Executes StmtText StmtId NodeId Parent PhysicalOp LogicalOp 1 1 select count(*) from TableName 1 1 0 NULL NULL 0 0 |--Compute Scalar(DEFINE:([Expr1003]=CONVERT_IMPLICIT(int,[Expr1004],0))) 1 2 1 Compute Scalar Compute Scalar 1 1 |--Stream Aggregate(DEFINE:([Expr1004]=Count(*))) 1 3 2 Stream Aggregate Aggregate 12366 1 |--Index Scan(OBJECT:([DatabaseName].[dbo].[TableName].[IX_TableName_Id])) 1 4 3 Index Scan Index Scan -

To get query performance statistics, use

SET STATISTICS IO, TIME ONfor I/O and time information.(1 row affected) Table 'Table1'. Scan count 1, logical reads 81, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. (4 rows affected) SQL Server Execution Times: CPU time = 0 ms, elapsed time = 2 ms.

4.1. SQL Server Internals

A page is an 8-KB unit where SQL Server stores data. With disk-based tables, the page is the smallest I/O unit that SQL Server can read or write. An extent is a unit that contains eight contiguous pages.

-

A table can be organized in one of two ways—either as a heap or as a B- tree (HOBT), technically as a B-tree when it has a clustered index defined on it and as a heap when it doesn’t.

-

A heap is a table that has no clustered index, which means that the data is laid out as a bunch of pages and extents without any order.

-

SQL Server maps the data that belongs to a heap using one or more bitmap pages called index allocation maps (IAMs).

-

An allocation order scan is a heap scan that uses IAM pages to determine which pages and extents belong to the heap and reads them in physical file order, typically resulting in sequential reads when data is not cached.

-

4.1.1. Indexes

-

Indexes on SQL Server disk-based tables are B-trees (a special case of balanced trees), and their leaf level has a doubly linked list, enabling forward and backward scans of the leaf-level index records (entries).

-

A clustered index is structured as a B-tree, and it maintains the entire table’s data, not a copy, in its leaf level.

-

At the leaf level of the clustered index, the physical order in which data pages are stored on disk may not correspond to the sorted logical order of the index keys due to page splits.

-

If page

xpoints to next pagey, and pageyappears before pagexin the file, pageyis considered an out-of-order page.

-

-

A nonclustered index is also structured as a B-tree, in contrast to a clustered index, a leaf row in a nonclustered index contains only the index key columns and a row locator value representing a particular data row.

-

With the nonclustered index seek or range scan, it is more efficient because because leaf rows store only the key columns and a row locator, so more rows fit per page and fewer pages are read.

If the nonclustered index is covering (e.g., via INCLUDE), leaf rows are wider, but avoiding lookups can still reduce total I/O. -

When using multiple predicates, the order of key columns in a nonclustered index is crucial for performance, as it determines whether qualifying rows are stored contiguously in the index leaf, maximizing seeks and minimizing scans.

-

When have multiple equality predicates, place the columns from the predicates in any order in the index key list.

-

When have at most one range predicate, place the columns from the equality predicates first in the key list and the column from the range predicate last.

-

When have multiple range predicates, place the column from the most selective range predicate before the columns from the remaining range predicates.

-

-

-

An index scan is a scan performed on the leaf level of a B-tree index in the sorted order of the index key, using a doubly linked list for inter-page navigation and a row-offset array for intra-page order, supporting both full ordered scans and range scans.

-

An index scan is necessary when the query filters on a non-leading column of the index key to scan a larger portion of the index (or even the entire index) to find the matching entries.

-

-

An index seek is performed when SQL Server needs to find a certain key or range of keys at the leaf level of the index, then scanning forward or backward within that range.

-

An index seek is possible when the query filters on the leading column (or a prefix of the leading columns) of the index key to navigate the B-tree from the root node down to the specific leaf page(s) containing the matching values.

-

-

In SQL Server, the direction of key columns can be indicated in an index definition (ascending by default).

CREATE UNIQUE NONCLUSTERED INDEX [idx] ON [schema1].[Table1] ( [col1], -- same as [col1] ASC [col2] DESC )-

The storage engine currently processes parallel scans only in the forward direction; backward scans are processed serially.

-

If parallelism is a critical factor in the performance of the query, arrange a descending index.

-

-

A filtered index is an index on a subset of rows from the underlying table defined based on a predicate.

CREATE NONCLUSTERED INDEX idx_USA_orderdate ON Sales.Orders(orderdate) INCLUDE(orderid, custid, requireddate) WHERE shipcountry = N'USA'; -

A covering index is an index that contains all the columns required by the query, avoiding lookups to the base table.

-

A clustered index is a covering index because the leaf row is the complete data row.

-

A nonclustered index can be a covering index with an

INCLUDEclause listing all non-key columns required by the query.CREATE INDEX idx_nc_cid_i_oid_eid_sid_od_flr ON dbo.Orders(custid) INCLUDE(orderid, empid, shipperid, orderdate, filler);

-

-

A columnstore index stores data by columns rather than by rows, which leads to substantial performance advantages for analytical queries.

-

A nonclustered columnstore index is a secondary index created on an existing table that is stored in the traditional rowstore format.

CREATE NONCLUSTERED COLUMNSTORE INDEX idx_nc_cs ON dbo.Fact(key1, key2, key3, measure1, measure2, measure3, measure4); -

A clustered columnstore index is the primary storage for the table, with data physically stored in columnstore format.

CREATE CLUSTERED COLUMNSTORE INDEX idx_cl_cs ON dbo.FactCS;

-

|

A general and highly recommended common naming convention format is: For indexes on multiple columns, separate the column names with underscores, and avoid listing

|

4.1.2. Execution Plans

In SQL Server, the relational engine, like a brain including the optimizer, produces execution plans for queries, while the storage engine, like muscles, carries out these instructions, sometimes choosing the best of several options based on performance and consistency.

-

When the plan shows a table scan operator, the storage engine has only one option: to use an allocation order scan.

-

When the plan shows an ordered index scan operator (clustered or nonclustered), the storage engine can use only an index order scan.

-

When the plan shows an unordered index scan operator, the storage engine has two options to scan the data: allocation order scan and index order scan.

-

An allocation order scan can return multiple occurrences of rows and skip rows resulting from splits that take place during the scan.

-

The storage engine opts for this option when the index size is greater than 64 pages and the request is running under the Read Uncommitted isolation level.

-

When the query is running under the default Read Committed isolation level or higher, the storage engine will opt for an index order scan to prevent such phenomena from happening because of splits.

-

-

An index order scan is safer in the sense that it won’t read multiple occurrences of the same row or skip rows because of splits.

-

If an index key is modified after the row was read by an index order scan and the row is moved to a point in the leaf that the scan hasn’t reached yet, the scan will read the row a second time or never reach that row.

-

It can happen in Read Uncommitted, Read Committed, and even Repeatable Read because the update was done to a row that was not yet read, but cannot happen under the isolation levels Serializable, Read Committed Snapshot, and Snapshot.

-

-

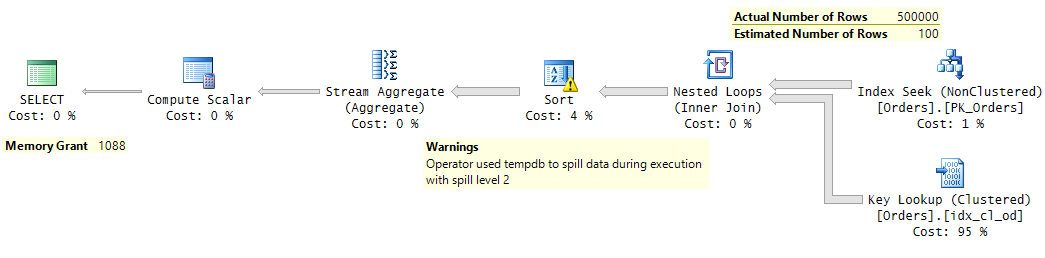

4.1.3. Cardinality Estimates

A query optimizer, the main component in the relational engine (also known as the query processor), is responsible for generating physical execution plans for the queries.

A cardinality estimator, that makes cardinality estimates of the number of rows returned by each operator, is employed by the optimizer to make decisions about access methods, join and aggregation algorithms, and memory allocation for sort and hash operations.

-

It is not a simple task to make accurate cardinality estimations without actually running the query and without a time machine.

-

Underestimations will tend to result in the following (not an exhaustive list):

-

For filters, preferring an index seek and lookups to a scan.

-

For aggregates, joins, and distinct, preferring order-based algorithms to hash-based ones.

-

For sort and hash operations, there might be spills to tempdb as a result of an insufficient memory grant.

-

Preferring a serial plan over a parallel one.

Figure 1. Plan with underestimated cardinality

Figure 1. Plan with underestimated cardinality

-

-

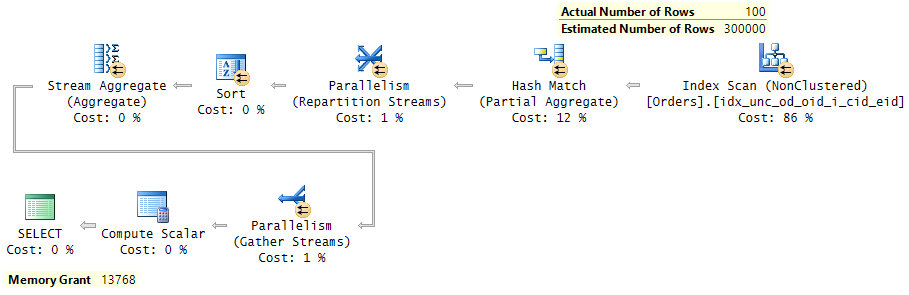

Overestimations will tend to result in pretty much the inverse of underestimations (again, not an exhaustive list):

-

For filters, preferring a scan to an index seek and lookups.

-

For aggregates, joins, and distinct, preferring hash-based algorithms to order-based ones.

-

For sort and hash operations, there won’t be spills, but very likely there will be a larger memory grant than needed, resulting in wasting memory.

-

Preferring a parallel plan over a serial one.

Figure 2. Plan with overestimated cardinality

Figure 2. Plan with overestimated cardinality

-

-

-

SQL Server relies on statistics about the data in its cardinality estimates.

-

Whenever creating an index, SQL Server creates statistics using a full scan of the data.

-

When additional statistics are needed, SQL Server might create them automatically using a sampled percentage of the data.

-

SQL Server creates three main types of statistics: header, density vectors, and a histogram.

-

4.1.4. Parallel Query Execution

Parallel query execution (intraquery parallelism or parallelism) uses multiple processor cores to simultaneously process smaller chunks of data, leveraging modern hardware’s increased computing power for efficient large-data processing.

Parallel processing, splitting work across multiple processor cores, can be implemented using two main models:

-

a factory-line model (where each core performs a single action on data passed between cores) and

-

a stream-based model (where each core processes a subset of data through all required operations).

While the factory-line model might seem intuitively better for human tasks, database systems like SQL Server use stream-based models.

-

Processors can efficiently switch between tasks as long as data is in local cache, and minimizing data movement between memory and storage is crucial for performance.

-

Stream-based models can scale much better than factory-line models with large datasets, distributing rows across cores as evenly as possible using various algorithms for parallel execution of all operations on each subset of data.

A query plan will be either entirely serial—processed using a single worker thread—or it will include one or more parallel branches, which are areas of the plan that are processed using multiple threads.

-

The query processor can merge parallel streams into a single stream or create parallel streams from a single stream, resulting in plans with interleaved serial and parallel zones.

-

All parallel zones in a plan use the same number of threads, known as the degree of parallelism (DOP), determined by server settings, hints, and runtime conditions.

-

A given set of threads might be reused by multiple zones over the course of the plan.

-

Parallel operators in the execution plan are marked with a circle icon with two arrows.

-

Within a parallel zone, each thread processes a unique stream of rows before passing them to the next zone (serial or parallel).

Parallel query plans rely on the Exchange (displayed as Parallelism) operator, which manages worker threads and data streams.

-

Each SQL Server query plan operator has, internally, two logical interfaces: a consumer interface, which takes rows from upstream, and a producer interface, which passes rows downstream.

-

While most operators handle their consumer and producer interfaces on the same thread and process single row streams, Exchange operators involve multiple threads and handle multiple streams, keeping other operators unaware of the parallelism.

-

The number of threads on each side of the exchange depends on the type of exchange:

A query plan can be read right-to-left (data flow) or left-to-right (operator logic). -

Gather Streams operators will have DOP threads on the consumer side and one thread on the producer side.

-

From a data-flow perspective, it merges multiple parallel streams into a single serial stream, marking the end of a parallel zone.

-

From an operator-logic perspective, it starts a parallel zone by invoking parallel worker threads.

-

-

Distribute Streams operators will have one thread on the consumer side and DOP threads on the producer side.

-

From a data-flow perspective, it splits a serial stream into multiple parallel streams, marking the start of a parallel zone.

-

From an operator-logic perspective, it marks the end of a parallel zone.

-

-

Repartition Streams operators will have DOP threads on each side of the exchange.

-

From both data-flow and operator-logic perspectives, it redistributes rows from multiple parallel streams onto different threads based on a new scheme, effectively joining two adjacent parallel zones.

-

-

Parallel query plans use five row distribution strategies across threads on the producer side of Distribute or Repartition exchanges:

-

Hash: Assigns rows to threads based on a hash function, grouping rows with the same hashed value on the same thread (e.g., grouping by ProductID for aggregation).

-

Round Robin: Distributes rows sequentially to each thread in a rotating fashion, often used outside Nested Loops where each row represents independent work.

-

Broadcast: Sends all rows to all threads, used for small row counts when all threads need the complete dataset (e.g., building a hash table).

-

Demand: Producer-side threads receive rows on request, currently used only with aligned partitioned tables.

-

Range: Assigns unique, non-overlapping key ranges to each thread, used only for index building.

4.2. Data Retrieval Strategies

SQL Server query optimizer uses various strategies to determine how the storage engine physically retrieves data from tables and indexes. Understanding these strategies, such as table scans, index seeks, and lookups, is crucial for diagnosing query performance and optimizing data access paths.

4.2.1. Unordered Clustered Index Scan or Table Scan

A table scan or an unordered clustered index scan involves a scan of all data pages that belong to the table.

-

Full table scans occur primarily in two cases: when all rows are required or when need only a subset of the rows but don’t have a good index to support the filter.

-

When the underlying table is a heap, the plan will show an operator called Table Scan.

SELECT * INTO dbo.Orders2 FROM dbo.Orders; ALTER TABLE dbo.Orders2 ADD CONSTRAINT PK_Orders2 PRIMARY KEY NONCLUSTERED (orderid); GO -- table scan SELECT orderid, custid, empid, shipperid, orderdate, filler FROM dbo.Orderss; -

When the underlying table is a B- tree, the plan will show an operator called Clustered Index Scan with an Ordered: False property.

-

The fact that the

Orderedproperty of the Clustered Index Scan operator indicatesFalsemeans that as far as the relational engine is concerned, the data does not need to be returned from the operator in key order. -

It is up to the storage engine to determine to employ allocation order scan or index order scan.

-- clustered index scan SELECT orderid, custid, empid, shipperid, orderdate, filler FROM dbo.Orders;

-

4.2.2. Unordered Covering Nonclustered Index Scan

An unordered covering nonclustered index scan is a query access method to retrieve all necessary data for a query solely from the leaf level of a nonclustered index, without accessing the base table’s data rows.

-

An unordered covering nonclustered index scan is similar to an unordered clustered index scan.

-- unordered covering nonclustered index scan SELECT orderid -- PRIMARY KEY NONCLUSTERED (orderid) FROM dbo.Orders;

4.2.3. Ordered Clustered Index Scan

An ordered clustered index scan is a full scan of the leaf level of the clustered index that guarantees that the data will be returned to the next operator in index order.

-- ordered clustered index scan

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

ORDER BY orderdate; -- CLUSTERED INDEX (orderdate)4.2.4. Ordered Covering Nonclustered Index Scan

An ordered covering nonclustered index scan is similar to an unordered covering nonclustered index scan, but retrieves data in the order of the index keys.

-- ordered covering nonclustered index scan

SELECT orderid, orderdate

FROM dbo.Orders

ORDER BY orderid; -- PRIMARY KEY NONCLUSTERED (orderid)4.2.5. Nonclustered Index Seek + Range Scan + Lookups

A nonclustered index seek + range scan + lookups access method is typically used for small-range queries or point queries using a nonclustered index that doesn’t cover the query.

-

A point query uses equality conditions (

=) to target specific values, potentially retrieving zero, one, or multiple rows, while a range query uses range operators (<,>,<=,>=,BETWEEN) to retrieve rows within a specified interval. -

While the index is capable of supporting the filter, lookups will be required to obtain the remaining columns from the respective data rows due to the index’s non-covering nature.

-

If the target table is a heap, the lookups will be RID Lookups, each costing one page read.

-- nonclustered index seek + range scan + lookups against a heap SELECT orderid, custid, empid, shipperid, orderdate, filler FROM dbo.Orders2 -- heap WHERE orderid <= 25; -- PRIMARY KEY NONCLUSTERED (orderid) -

If the underlying table is a B-tree, the lookups will be Key Lookups, each costing as many reads as the number of levels in the clustered index.

-- nonclustered index seek + range scan + lookups against a B-tree SELECT orderid, custid, empid, shipperid, orderdate, filler FROM dbo.Orders -- B-tree WHERE orderid <= 25; -- PRIMARY KEY NONCLUSTERED (orderid)

-

4.2.6. Unordered Nonclustered Index Scan + Lookups

An unordered nonclustered index scan + lookups access method is typically used by the optimizer when the following conditions are in place:

-

The query has a selective filter.

-

There’s a nonclustered index that contains the filtered column (or columns), but the index isn’t a covering one.

-

The filtered columns are not leading columns in the index key list.

-- unordered nonclustered index scan + lookups -- missing index SELECT orderid, custid, empid, shipperid, orderdate, filler FROM dbo.Orders WHERE custid = 'C0000000001'; -- NONCLUSTERED INDEX (shipperid, orderdate, custid); -

It performs a full unordered scan of the leaf level of the index, followed by lookups for qualifying keys, a strategy that becomes less efficient than a full table scan for less selective queries due to the lookup overhead.

4.2.7. Clustered Index Seek + Range Scan

A clustered index seek + range scan access method is typically used by the optimizer for range queries where the filter based on the first key column (or columns) of the clustered index.

-- clustered index seek + range scan

SELECT orderid, custid, empid, shipperid, orderdate

FROM dbo.Orders

WHERE orderdate = '20140212'; -- CLUSTERED INDEX (orderdate);4.2.8. Covering Nonclustered Index Seek + Range Scan

A covering nonclustered index seek + range scan access method is similar to the access method clustered index seek + range scan, only it uses a nonclustered covering index.

-- nonclustered index seek + range scan

SELECT orderid, shipperid, orderdate, custid

FROM dbo.Orders

WHERE shipperid = 'C'

AND orderdate >= '20140101'

AND orderdate < '20150101'; -- NONCLUSTERED INDEX (shipperid, orderdate, custid);4.3. Cardinality Estimates

In graphical execution plans, cardinality estimates are visible on arrows between operators where estimated plans display predictions only while actual plans show both estimated and actual row counts side-by-side, enabling identification of underestimation or overestimation that causes suboptimal operator choices.

Cost percentages indicate each operator’s relative expense within the query based on estimated costs where high percentages identify predicted bottlenecks, but severe underestimation can cause actual execution costs to vastly exceed estimated total query cost (such as Sort 43597 of 16 showing 272481% cost due to 2,725x underestimation causing tempdb spills), making actual row counts and execution time more reliable indicators than estimated cost percentages.

Arrow thickness is proportional to actual row count where thicker arrows indicate more rows flowing (e.g., millions) and thinner arrows indicate fewer rows (e.g., hundreds or thousands), providing a visual indicator to quickly identify high-volume data flows and validate estimated versus actual row counts.

Estimation errors exceeding 10x ratio (actual ÷ estimated) indicate critical cardinality problems requiring investigation, with underestimation causing insufficient memory grants and poor join algorithm choices (e.g., Nested Loops instead of Hash Match for large datasets), while overestimation wastes resources through excessive parallelism and oversized memory allocations.

For Nested Loops operators, estimated rows represent one execution while actual rows sum all executions, meaning Est=10 rows with Executions=100 and Actual=1000 rows indicates accuracy (10×100=1000).

4.3.1. Statistics Architecture

SQL Server relies on statistics to estimate cardinality and generate optimal execution plans, creating statistics automatically when indexes are built (via full scan) or when additional statistics are needed (via sampling), with each statistics object containing three components: header, density vector, and histogram.

The header stores metadata including total row count, sampled row count, last update timestamp, and histogram steps, providing the optimizer with high-level information about data volume and statistics freshness.

CREATE INDEX idx_nc_cid_eid ON dbo.Orders(custid, empid);

DBCC SHOW_STATISTICS(N'dbo.Orders', N'idx_nc_cid_eid');-- Header

Name Updated Rows Rows Sampled Steps Density Average key length

-------------- ------------------- -------- ------------ ----- ----------- ------------------

idx_nc_cid_eid Oct 21 2014 7:17PM 1000000 1000000 187 0.02002646 18

-- Filter Information

String Index Filter Expression Unfiltered Rows

------------ ----------------- ---------------

YES NULL 1000000

-- Density Vector

All density Average Length Columns

------------ -------------- ---------------------------

5E-05 11 custid

1.050216E-06 15 custid, empid

1.000042E-06 18 custid, empid, orderdate

-- Histogram (187 steps total, showing first 5 and last 5)

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

-------------- ---------- ------- ------------------- --------------

C0000000001 0 46 0 1

C0000000130 6437 37 128 50.28906

C0000000252 6089 37 121 50.32232

C0000000310 2918 67 57 51.19298

C0000000539 11538 62 228 50.60526

...

C0000019486 6307 66 126 50.05556

C0000019704 10934 30 217 50.3871

C0000019783 4045 66 78 51.85897

C0000019904 5906 68 120 49.21667

C0000020000 4733 46 95 49.82106The density vector provides selectivity estimates for column prefixes where each entry represents the average uniqueness (inverse of distinct count) for left-based column combinations, with lower density indicating higher selectivity and better filtering capability.

In the example above, custid has density 5E-05 (0.00005), indicating approximately 20,000 distinct customer IDs (1/0.00005), while the compound key (custid, empid) has density 1.050216E-06, indicating approximately 952,584 distinct combinations, demonstrating increasing selectivity with additional columns.

The histogram contains up to 200 steps (187 in this example) representing the distribution of values in the leading index column only, with each step defining a range boundary where RANGE_HI_KEY is the upper bound value, EQ_ROWS is the count of rows equal to that value, RANGE_ROWS is the count of rows between the previous boundary and current boundary (exclusive), DISTINCT_RANGE_ROWS is the count of distinct values in that range, and AVG_RANGE_ROWS equals RANGE_ROWS/DISTINCT_RANGE_ROWS.

The optimizer uses histogram data to estimate cardinality for predicates on the leading column where equality predicates like custid = 'C0000000539' directly use EQ_ROWS (62 rows), range predicates like custid > 'C0000000310' sum RANGE_ROWS and EQ_ROWS from qualifying steps, and predicates on non-leading columns fall back to density vector estimates, often resulting in less accurate estimations.

4.3.2. Common Estimation Problems

-

Statistics staleness occurs when modifications exceed the auto-update threshold (typically 20% of rows), causing the histogram to become outdated and produce estimates based on obsolete data distributions.

-- Table has 1,000,000 rows, auto-update threshold = 200,000 modifications -- Statistics show: ProductID 100 has 1,000 rows (0.1%) -- After 150,000 deletions of ProductID 100 (below threshold, no auto-update) SELECT * FROM Orders WHERE ProductID = 100; -- Optimizer estimates: 1,000 rows (based on stale statistics) -- Actual: 0 rows (all deleted, but statistics not updated) -

Ascending or descending key patterns introduce estimation errors when new values fall outside the histogram’s range boundaries, as the optimizer cannot accurately predict row counts for values it has never observed.

-- Statistics were last updated on 2024-12-31 -- Histogram's maximum value (last RANGE_HI_KEY) is '2024-12-31' -- Now it's 2025-01-15, and you've inserted 10,000 new orders INSERT INTO Orders (OrderDate, ...) VALUES ('2025-01-01', ...), ('2025-01-02', ...), ... ('2025-01-15', ...) -- Query for recent data SELECT * FROM Orders WHERE OrderDate >= '2025-01-10'; -- Result: Optimizer guesses ~30% of total rows (300,000 estimated) -- Actual: 5,000 rows (60x estimation error) -

Multi-column predicates without corresponding multi-column statistics force the optimizer to assume column independence, producing inaccurate estimates when columns are correlated (e.g.,

WHERE City = 'Seattle' AND State = 'WA'assumes independence when cities strongly correlate with states).-- Statistics exist only on City and State separately, not as a pair -- Reality: All Seattle rows have State='WA' (1,000 rows) SELECT * FROM Customers WHERE City = 'Seattle' AND State = 'WA'; -- Optimizer assumes independence: selectivity(City) × selectivity(State) -- Estimates: 0.01 × 0.02 × 1M = 200 rows | Actual: 1,000 rows (5x error) -

Parameter sniffing causes plan reuse problems where the first execution’s parameters produce a cached plan that performs poorly for subsequent executions with different parameter distributions, particularly when different parameter values have drastically different selectivity.

CREATE PROC GetOrders @Status VARCHAR(20) AS SELECT * FROM Orders WHERE Status = @Status; -- First execution: @Status = 'Pending' (100 rows) → generates Index Seek plan EXEC GetOrders 'Pending'; -- Fast (uses cached seek plan) -- Later execution: @Status = 'Shipped' (900,000 rows) → reuses same seek plan EXEC GetOrders 'Shipped'; -- Slow (seek+lookups instead of scan for 90% of table) -

Complex predicates involving

ORconditions, functions applied to columns (WHERE YEAR(OrderDate) = 2024), or non-SARGable expressions prevent histogram usage, forcing the optimizer to rely on less accurate density-based estimates.-- Non-SARGable: function applied to column prevents histogram lookup SELECT * FROM Orders WHERE YEAR(OrderDate) = 2024; -- Optimizer cannot use histogram on OrderDate, uses density estimate (~30%) -- SARGable alternative: uses histogram effectively SELECT * FROM Orders WHERE OrderDate >= '2024-01-01' AND OrderDate < '2025-01-01';

4.3.3. Troubleshooting and Resolution

Statistics maintenance requires proactive monitoring, timely updates with FULLSCAN, multi-column statistics for correlated columns, and dynamic thresholds via trace flag 2371.

-

Monitor statistics staleness using tiered thresholds (10%, 15%, 20%) to prioritize updates proactively, catching problems before they impact production rather than reacting after SQL Server’s 20% auto-update threshold is crossed.

-- Find statistics with tiered priority based on modification thresholds SELECT OBJECT_NAME(s.object_id) AS TableName, s.name AS StatsName, sp.last_updated, sp.rows, sp.rows_sampled, CAST(100.0 * sp.rows_sampled / NULLIF(sp.rows, 0) AS DECIMAL(10,2)) AS SamplePct, sp.modification_counter, CAST(100.0 * sp.modification_counter / NULLIF(sp.rows, 0) AS DECIMAL(10,2)) AS ModPct, CASE WHEN sp.modification_counter > sp.rows * 0.20 THEN 'Critical' WHEN sp.modification_counter > sp.rows * 0.15 THEN 'High' WHEN sp.modification_counter > sp.rows * 0.10 THEN 'Medium' ELSE 'Low' END AS Priority FROM sys.stats s CROSS APPLY sys.dm_db_stats_properties(s.object_id, s.stats_id) sp WHERE sp.modification_counter > sp.rows * 0.10 -- Proactive 10% threshold ORDER BY CASE WHEN sp.modification_counter > sp.rows * 0.20 THEN 1 WHEN sp.modification_counter > sp.rows * 0.15 THEN 2 WHEN sp.modification_counter > sp.rows * 0.10 THEN 3 ELSE 4 END, sp.modification_counter DESC;SQL Server triggers statistics updates at 20% modifications (default), but proactive monitoring at 10% provides early warning:

-

Critical (>20%) requires immediate attention as auto-update hasn’t triggered yet

-

High (15-20%) should be updated during next maintenance window

-

Medium (10-15%) can be scheduled for routine maintenance

-

Trace flag 2371 makes thresholds dynamic using

SQRT(1000 * row_count)(~5% at 1M rows, ~2% at 100M+ rows)

Auto-updates are query-triggered and synchronous by default (AUTO_UPDATE_STATISTICS_ASYNC OFF):

-

When a query hits stale statistics, SQL Server updates them before query execution, potentially blocking for seconds to minutes

-

With AUTO_UPDATE_STATISTICS_ASYNC ON, the first query uses stale statistics and returns immediately while updates run in background, avoiding blocking but risking suboptimal plans

-

Schedule manual updates during maintenance windows to avoid both blocking (sync mode) and stale-plan issues (async mode)

-- Check database-level statistics settings SELECT name AS DatabaseName, is_auto_create_stats_on AS AutoCreateStats, is_auto_update_stats_on AS AutoUpdateStats, is_auto_update_stats_async_on AS AutoUpdateStatsAsync FROM sys.databases WHERE name = DB_NAME(); -- Check if trace flag 2371 is enabled DBCC TRACESTATUS(2371);

-

-

Update statistics with

FULLSCANfor accurate distribution sampling and usePERSIST_SAMPLE_PERCENT = ONto preserve the sampling rate for future auto-updates, preventing SQL Server from downgrading to lower sampling percentages (typically 20-30%) on subsequent automatic updates.-- Full scan update with persistent sample rate UPDATE STATISTICS dbo.Orders idx_OrderDate WITH FULLSCAN, PERSIST_SAMPLE_PERCENT = ON; -- Update all statistics on a table UPDATE STATISTICS dbo.Orders WITH FULLSCAN; -

Create multi-column statistics for correlated predicates where the optimizer’s independence assumption produces inaccurate estimates, particularly for columns with strong correlations like City and State.

-- Create multi-column statistics for correlated columns CREATE STATISTICS Stats_City_State ON dbo.Customers(City, State) WITH FULLSCAN; -

Enable trace flag 2371 to use dynamic auto-update thresholds that scale with table size, replacing the fixed 20% threshold with a percentage that decreases as table size increases (e.g., 2% for tables with 100M+ rows).

-- Enable at instance level (requires restart or DBCC command) DBCC TRACEON(2371, -1); -- Add to startup parameters for persistence: -T2371

Query hints and plan guides provide tactical solutions for cardinality estimation problems.

-

Force recompilation to address parameter sniffing where cached plans become suboptimal for different parameter values.

-- Recompile on every execution SELECT * FROM Orders WHERE Status = @Status OPTION (RECOMPILE); -- Or at procedure level CREATE PROC GetOrders @Status VARCHAR(20) WITH RECOMPILE AS SELECT * FROM Orders WHERE Status = @Status; -

Optimize for specific parameter values when typical values are known and differ from the first execution’s parameters.

-- Optimize assuming 'Pending' is the most common value SELECT * FROM Orders WHERE Status = @Status OPTION (OPTIMIZE FOR (@Status = 'Pending')); -- Optimize for worst-case scenario (all possible values) OPTION (OPTIMIZE FOR (@Status UNKNOWN)); -

Switch cardinality estimation models when one produces consistently poor estimates for specific query patterns.

-- Force legacy CE (pre-SQL Server 2014) SELECT * FROM Orders WHERE ComplexFilter = @Value OPTION (USE HINT('FORCE_LEGACY_CARDINALITY_ESTIMATION')); -- Alternative using trace flag OPTION (QUERYTRACEON 9481); -

Override memory grants and parallelism when CE causes insufficient memory grants (tempdb spills) or excessive parallelism.

-- Prevent tempdb spills from underestimated memory grants SELECT * FROM LargeTable WHERE ComplexFilter = @Value OPTION (MIN_GRANT_PERCENT = 15); -- Limit parallelism when CE causes excessive core usage OPTION (MAXDOP 4); -- Combine multiple hints OPTION (RECOMPILE, MIN_GRANT_PERCENT = 10, MAXDOP 4); -

Apply hints via plan guides to avoid modifying application code in production scenarios where source code changes require lengthy deployment processes.

Query hints are tactical workarounds, not long-term solutions:

-

Use for production emergencies when immediate relief is needed without code deployment

-

Apply as temporary fixes while investigating root causes like stale statistics, missing indexes, or non-SARGable predicates

-

Consider plan guides for applying hints to unchanged application code

Trade-offs and cautions to consider:

-

Hints mask root causes rather than fixing underlying CE problems

-

OPTION (RECOMPILE)adds CPU overhead for recompilation on every execution -

Hints can become obsolete as data patterns change, requiring ongoing maintenance

-

Overrides optimizer decisions that may actually be better as data volume grows

-

Makes queries harder to maintain and understand for other developers

Best practice is using hints as temporary workarounds while fixing the underlying problems (update statistics, create proper indexes, rewrite non-SARGable predicates).

-

4.3.4. Cardinality Estimation Models

SQL Server’s CE evolved across versions with different optimization strategies:

-

Legacy CE (compatibility level 70-110) uses simpler assumptions

-

Modern CE (120+) employs improved correlation detection and exponential backoff for multiple predicates

-

CE 130 adds further refinements

The choice between models depends on workload characteristics where legacy CE may perform better for some queries while modern CE generally handles complex predicates more accurately.

4.3.5. Intelligent Query Processing

IQP features (SQL Server 2017+, compatibility level 140+) address CE limitations through runtime adaptation:

-

Adaptive joins switch join algorithms based on actual row counts during execution

-

Memory grant feedback adjusts grants between executions to prevent tempdb spills or memory waste

-

Table variable deferred compilation fixes the notorious 1-row estimate problem by deferring compilation until actual row counts are known

-

Scalar UDF inlining eliminates row-by-row function execution overhead

-

Parameter sensitivity plan optimization (SQL 2022, compatibility level 160) creates multiple cached plans for different parameter value ranges to eliminate parameter sniffing issues without requiring

OPTION (RECOMPILE)hints

4.4. Join Algorithms

The SQL Server query optimizer employs several physical join algorithms to combine rows from two input tables. The choice of algorithm is cost-based, meaning the optimizer selects the one with the lowest estimated execution cost, factoring in input size, index availability, and data sortedness.

4.4.1. Nested Loops Join

A Nested Loops join is a physical join operator that reads one row from the first (outer) input and, for that row, finds all matching rows in the second (inner) input. This process is repeated for every row in the outer input.

It is the most efficient algorithm under the following conditions:

-

The outer input table is small.

-

The inner input table is large but has a covering index on the join column.

This combination allows the optimizer to perform an efficient index seek on the inner table for each row from the outer table. While its low overhead makes it ideal for joining small data sets and for queries with highly selective predicates, its performance degrades rapidly as the number of rows in the outer input increases.

4.4.2. Hash Match Join (Hash Join)

A Hash Match join (or hash join) is highly effective for joining large, unsorted datasets and is primarily used for equi-joins. It processes data in two distinct phases:

-

Build Phase: The optimizer designates the smaller of the two inputs as the

build input. It reads these rows and constructs an in-memory hash table based on the join key. -

Probe Phase: It then reads the larger input, known as the

probe input, one row at a time. For each probe row, it computes a hash on the join key and looks for matches in the hash table created during the build phase.

The optimizer typically chooses a hash join when the inputs are large and not sorted, and when no useful indexes are available. The algorithm’s performance is heavily dependent on memory; if the build input is too large to fit in the available memory, the hash table will spill to tempdb, causing significant performance degradation.

4.4.3. Merge Join

A Merge join provides a highly efficient method for joining two inputs, provided both are already sorted on the join key. The algorithm simultaneously reads from both sorted inputs and merges the matching rows, similar to merging two sorted lists.

A merge join is optimal under these circumstances:

-

Both inputs are large.

-

Both inputs are sorted on the join column, often the result of a clustered index scan or a prior sort operation in the execution plan.

If one or both inputs are not sorted, the optimizer can add an explicit Sort operator, but this adds to the overall query cost. Consequently, a merge join is most effective when existing indexes or data structures provide pre-sorted data, making it a low-cost alternative to a hash join for large datasets. It is primarily used for equi-joins but can handle some non-equi-join scenarios.

4.4.4. Algorithm Selection

The query optimizer selects a join algorithm by estimating the I/O, CPU, and memory costs of each available strategy. This cost-based decision is influenced by several key factors, which combine to produce predictable performance patterns.

Selection Criteria:

-

The estimated number of rows (cardinality) in each input is the primary driver, with small outer inputs favoring Nested Loops while large inputs make Hash and Merge joins more attractive.

-

A useful index on the join key of the inner table makes Nested Loops highly efficient through index seeks, while clustered indexes that provide sorted data make Merge joins a strong candidate.

-

Pre-sorted data on the join key makes Merge joins the cheapest option by avoiding explicit sort operations.

-

Hash and Merge joins are designed for equi-joins (

=), whereas Nested Loops are more versatile and can handle non-equi-joins (e.g.,>,<,BETWEEN). -

Hash joins require sufficient memory for the build phase, as insufficient memory causes spills to

tempdbthat severely degrade performance.

Common Performance Patterns:

-

When the outer table is small and the inner table has a useful index, Nested Loops performs fast index seeks with minimal overhead.

-

For large, unsorted datasets, Hash joins are typically most efficient, assuming adequate memory is available to avoid spills.

-

When both inputs are large and already sorted, Merge joins provide optimal performance by avoiding both hashing overhead and repeated seeks.

-

When the optimizer’s cardinality estimates are inaccurate, it may choose a suboptimal plan (e.g., Nested Loops for large inputs), but modern SQL Server versions use Adaptive Joins to dynamically switch between Nested Loops and Hash joins during execution, mitigating this risk.

4.5. Tied Rows and Sorting

When an ORDER BY clause is used, SQL Server guarantees the result set is sorted according to the specified columns. However, this guarantee does not extend to rows with the same value in the ordering columns—known as tied rows. The order in which tied rows are returned is not guaranteed and can vary between query executions, leading to an unstable sort.

This instability occurs because the execution plan only guarantees the explicitly requested order. For tied rows, the database returns them in whatever order is most convenient for that specific execution, which can lead to unexpected behavior, particularly in pagination scenarios.

For instance, if a user is paging through a customer’s order history, an unstable sort could cause the same order to appear on multiple pages or for some orders to be skipped entirely, because the order of that customer’s orders shifted between page loads.

To ensure a consistent and predictable sort, the ORDER BY clause must uniquely identify every row, which can be achieved by adding a tie-breaker—a column or set of columns guaranteed to be unique, such as the table’s primary key.

For example, consider sorting orders by customer. A single customer can have multiple orders, creating tied rows.

-- Unstable sort: Order of rows for the same `custid` is not guaranteed.

SELECT custid, orderid, orderdate

FROM Sales.Orders

ORDER BY custid;By adding the unique orderid column as a tie-breaker, the sort becomes deterministic. A secondary sort by orderdate is also a good practice.

-- Stable sort: orderdate and orderid act as tie-breakers.

SELECT custid, orderid, orderdate

FROM Sales.Orders

ORDER BY custid, orderdate DESC, orderid DESC;This forces the optimizer to perform a secondary sort on orderdate and then orderid for any tied rows, resulting in a deterministic, or stable, sort that is consistent with every execution.

4.6. Execution Plans Analysis

In practice, the actual plan is preferred when the issue can be reproduced, Live Query Statistics are preferred for long-running queries, and estimated plans are preferred when execution is risky or not possible.

-

Actual execution plans show what happened during execution, including actual row counts, warnings, and runtime behavior.

-