- 1. Language AI

- 2. Tokens and Embeddings

- 3. Large Language Models

- 4. Text Classification

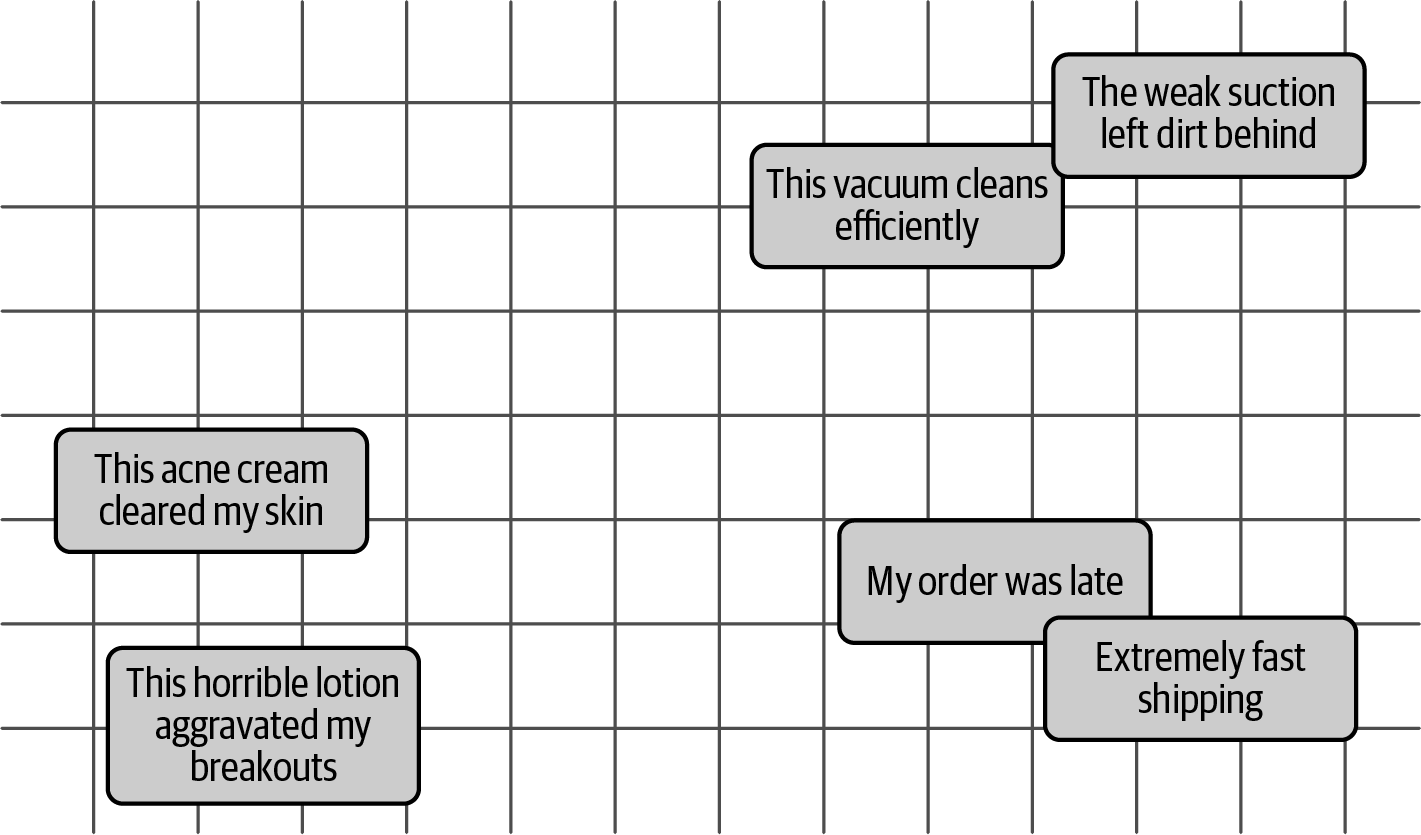

- 5. Text Clustering and Topic Modeling

- 6. Prompt Engineering

- 7. Advanced Text Generation Techniques and Tools

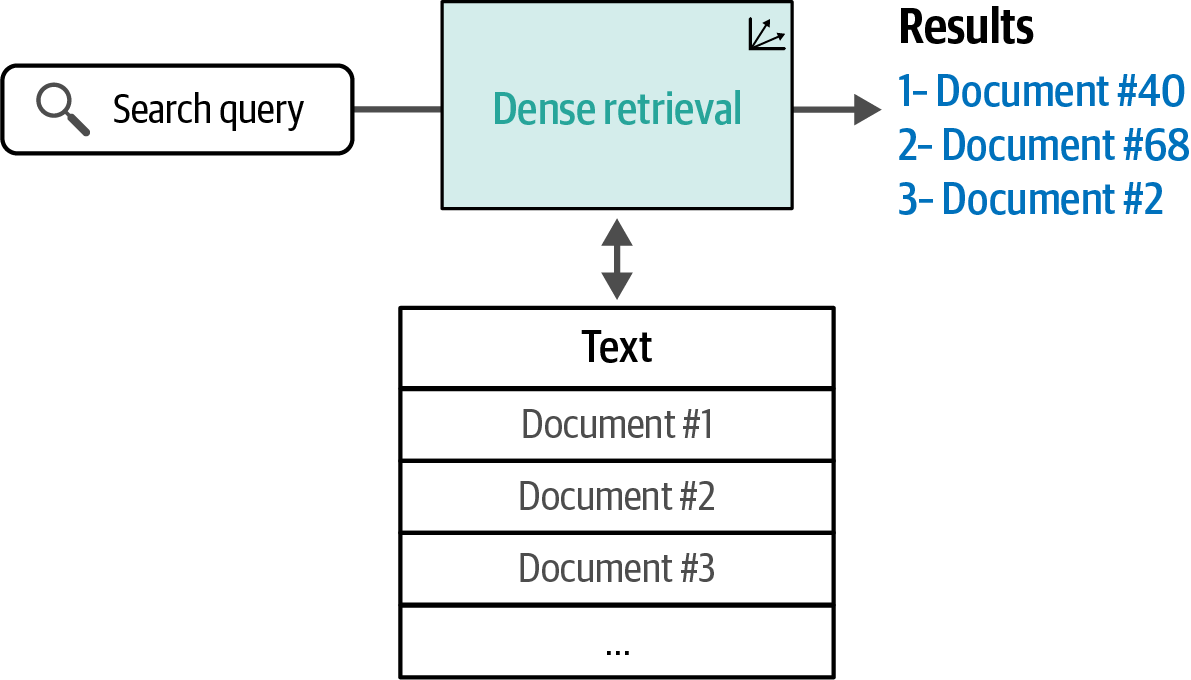

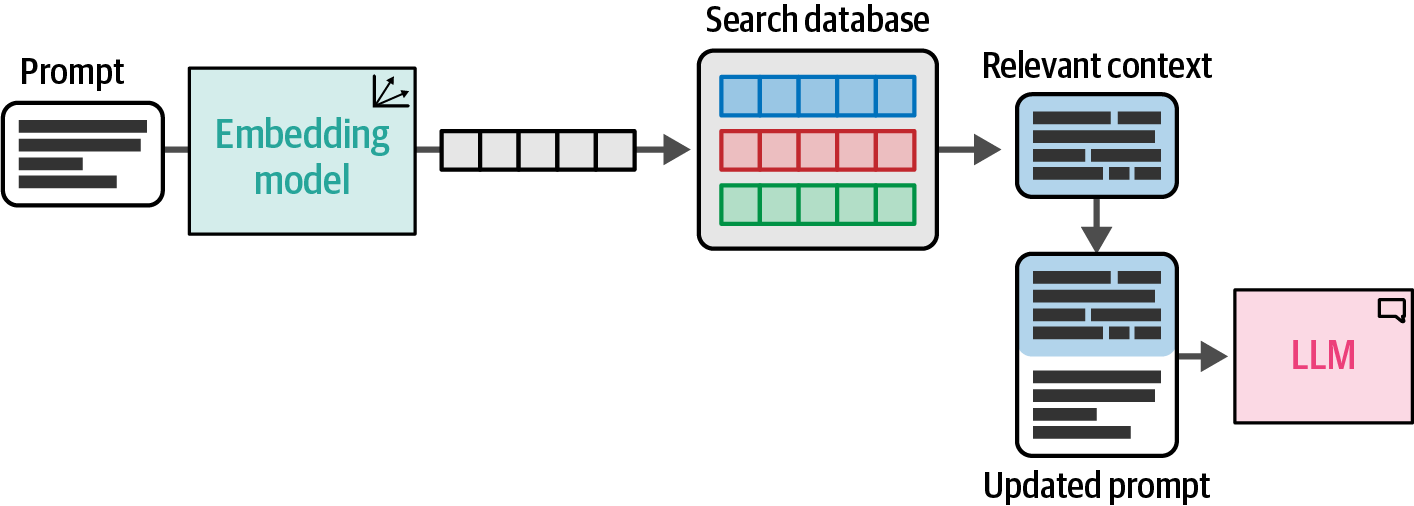

- 8. Semantic Search and Retrieval-Augmented Generation

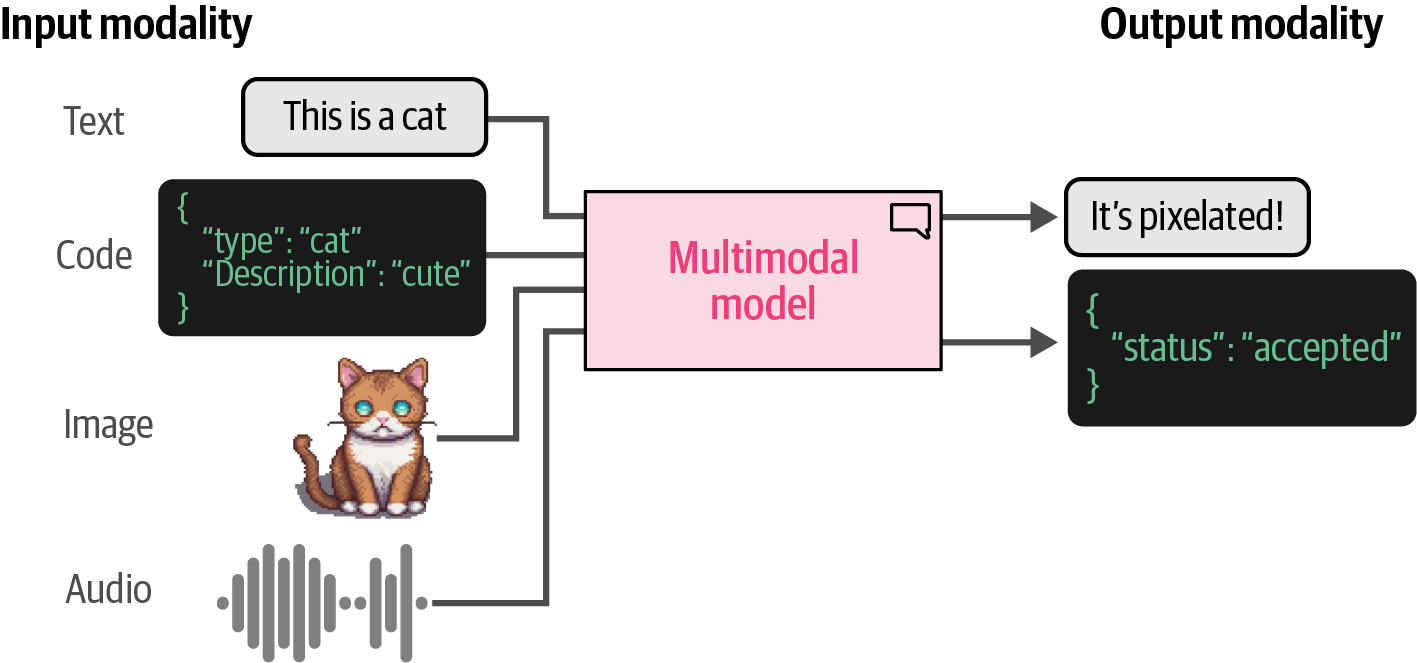

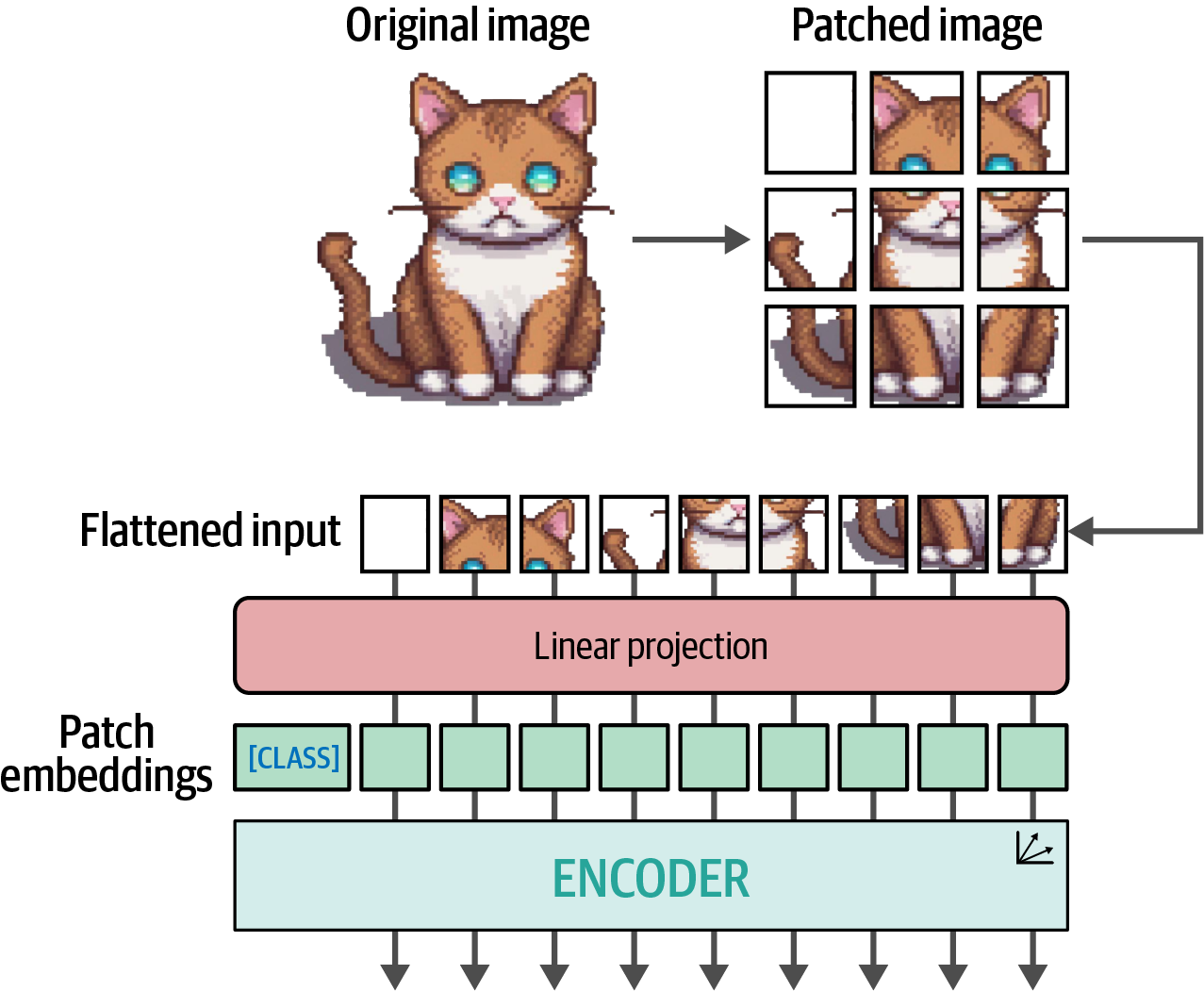

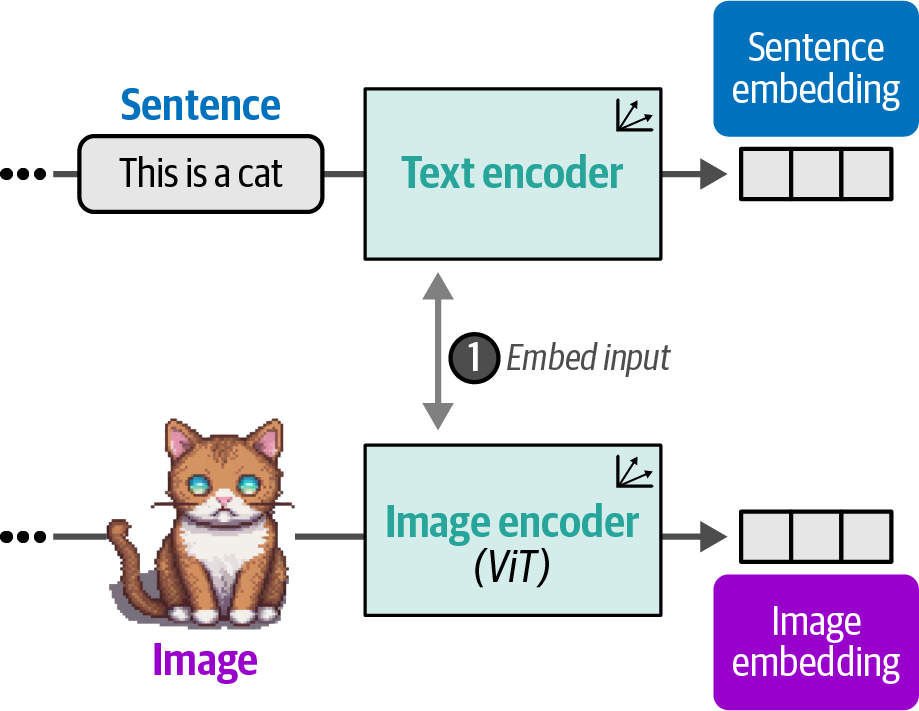

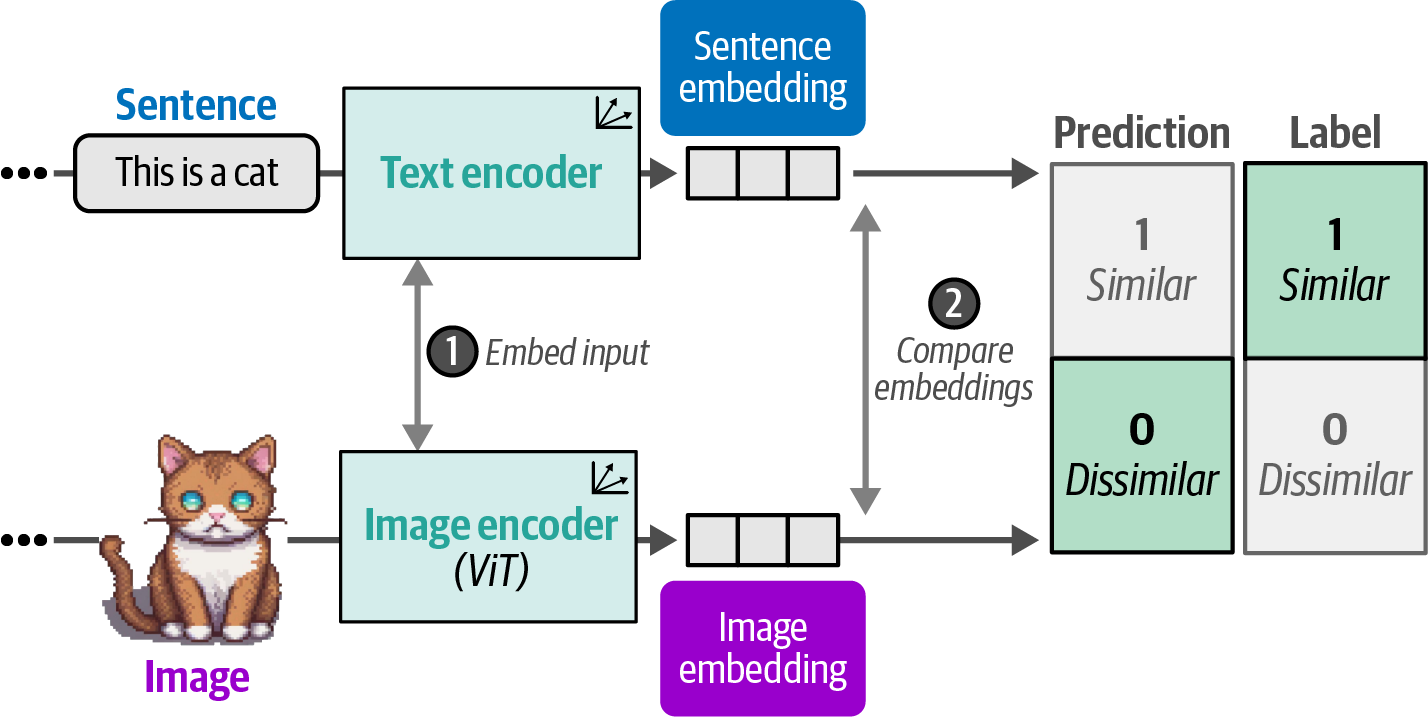

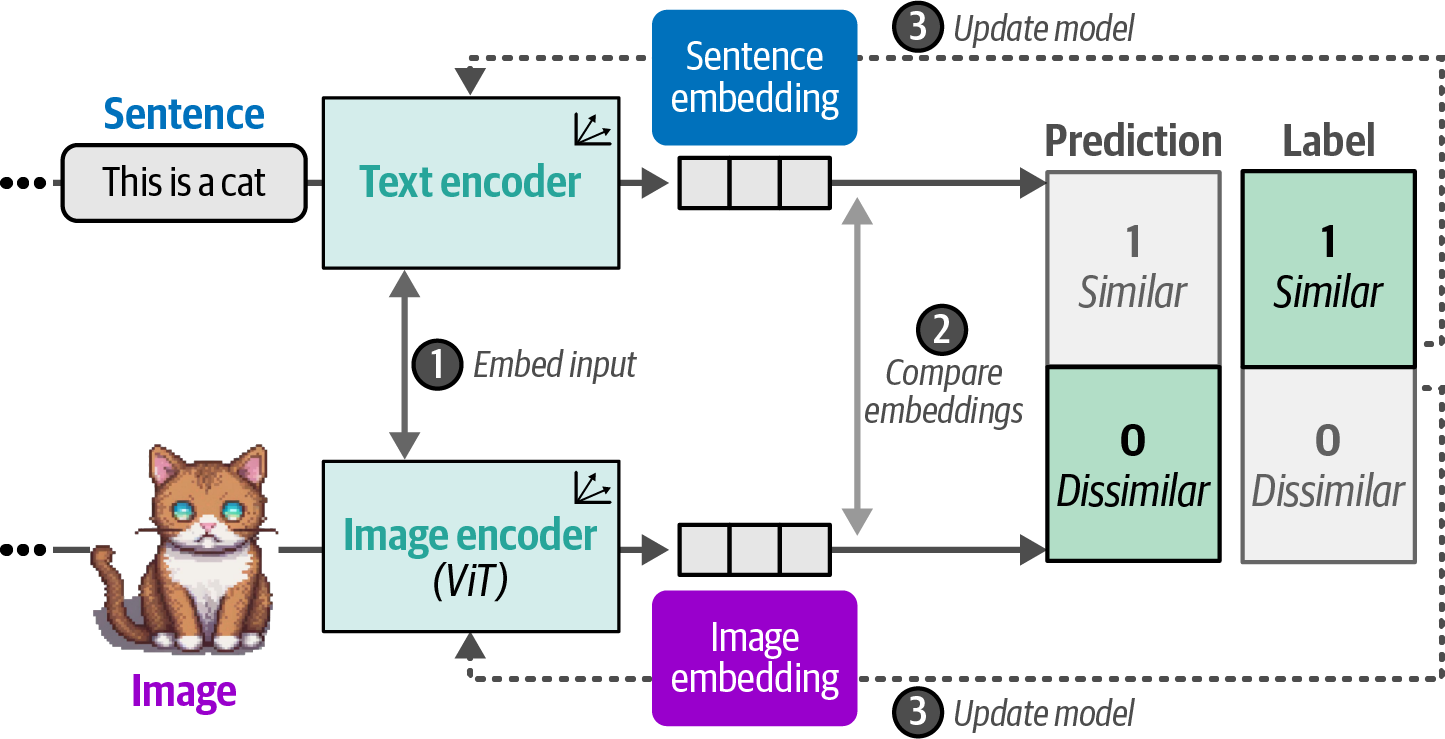

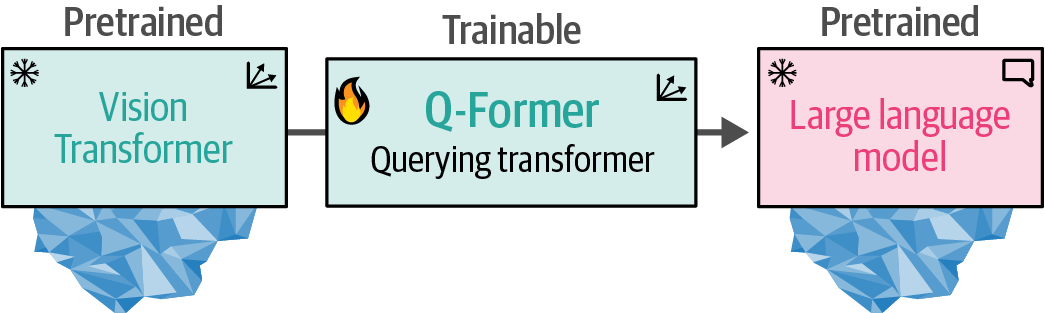

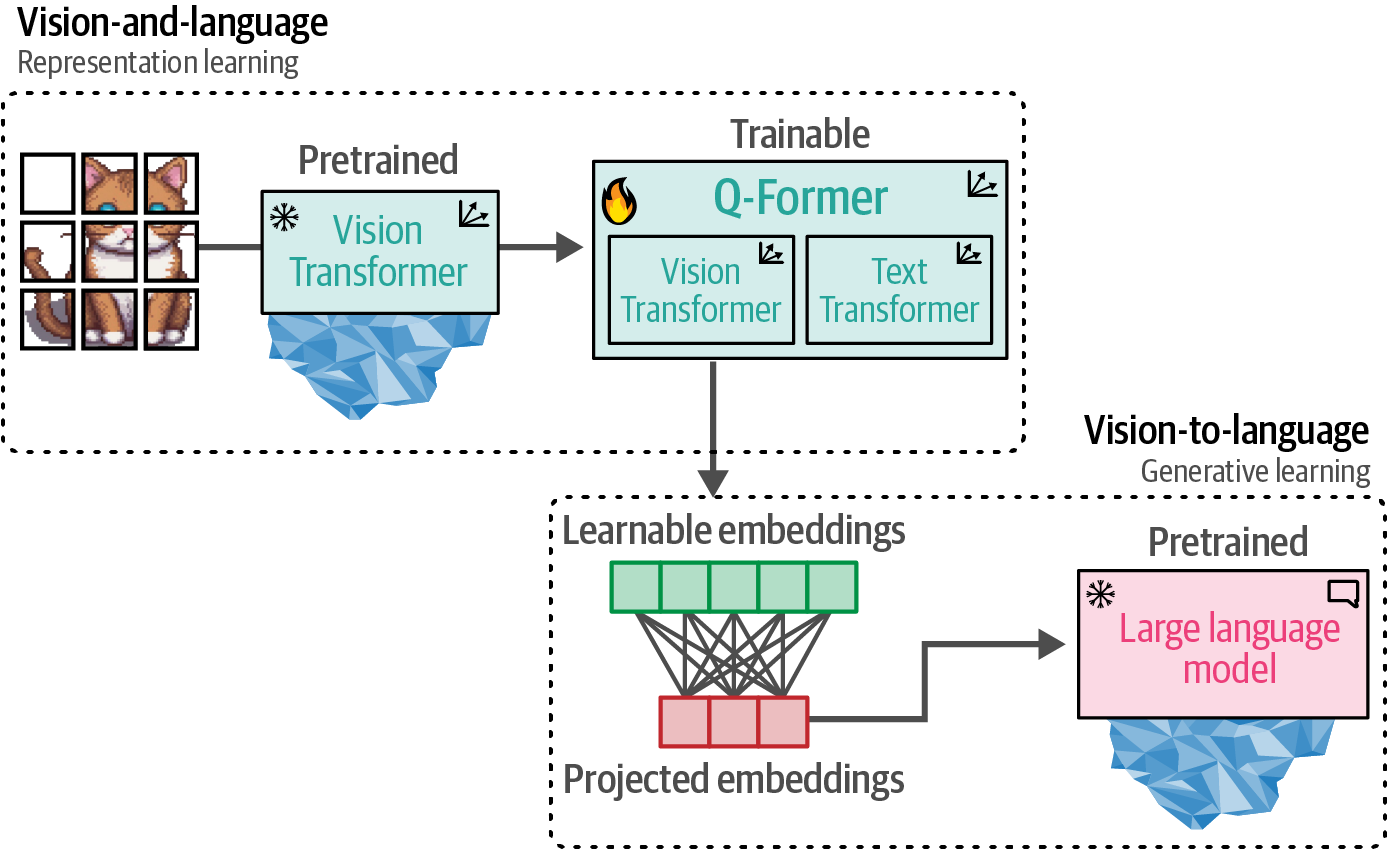

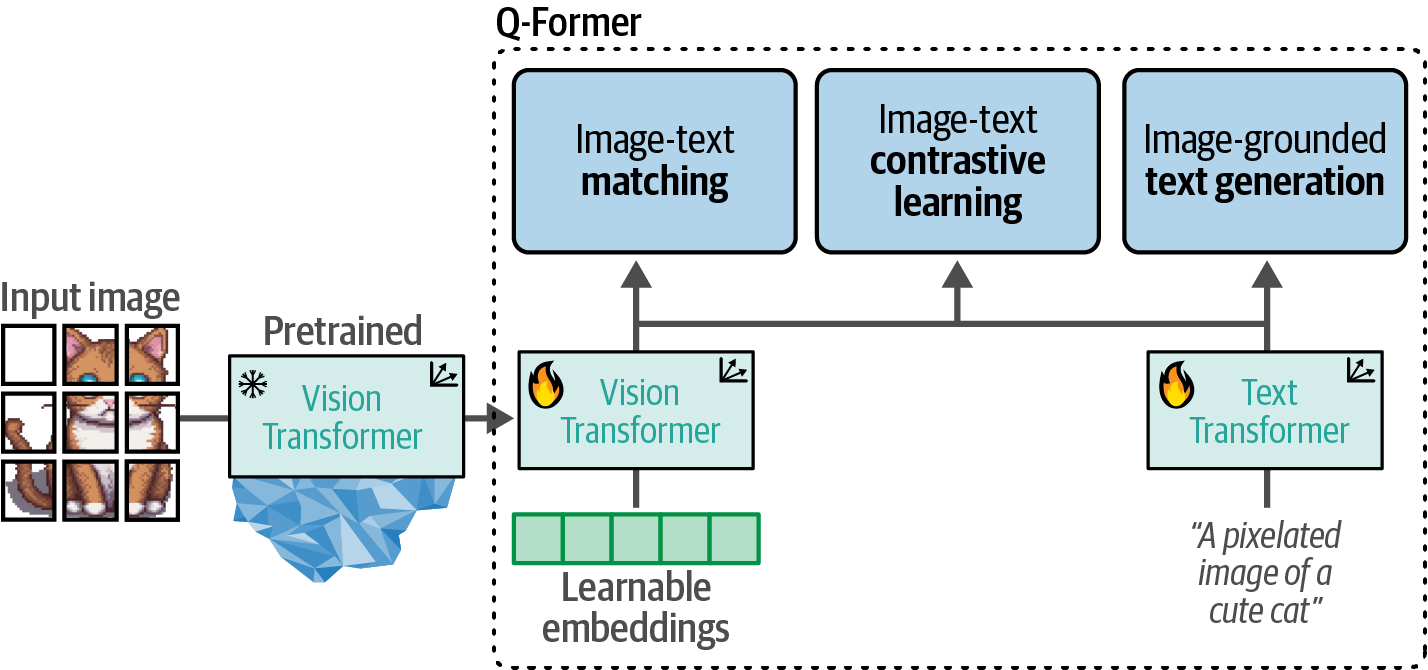

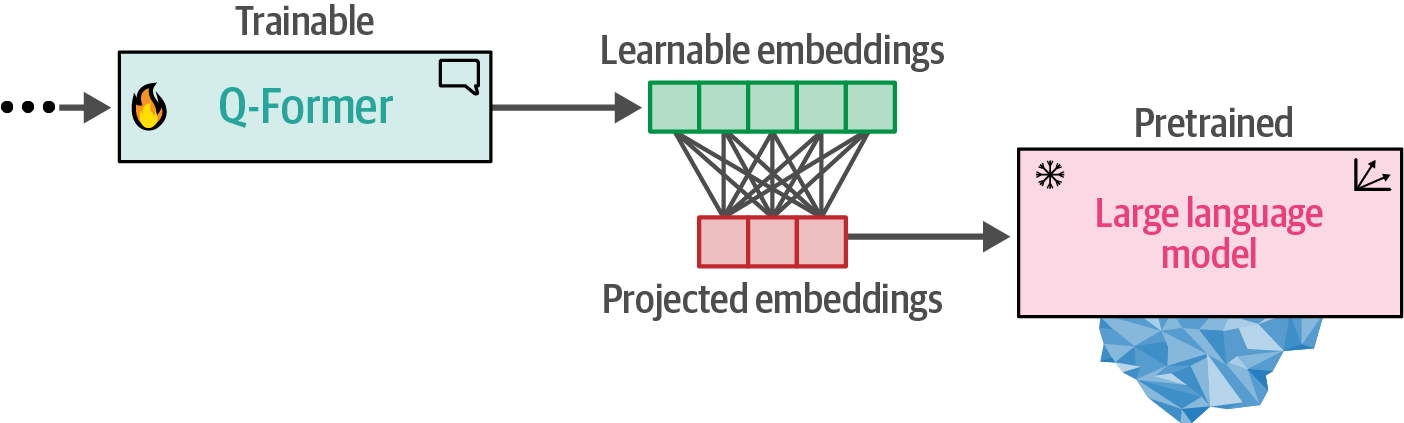

- 9. Multimodal Large Language Models

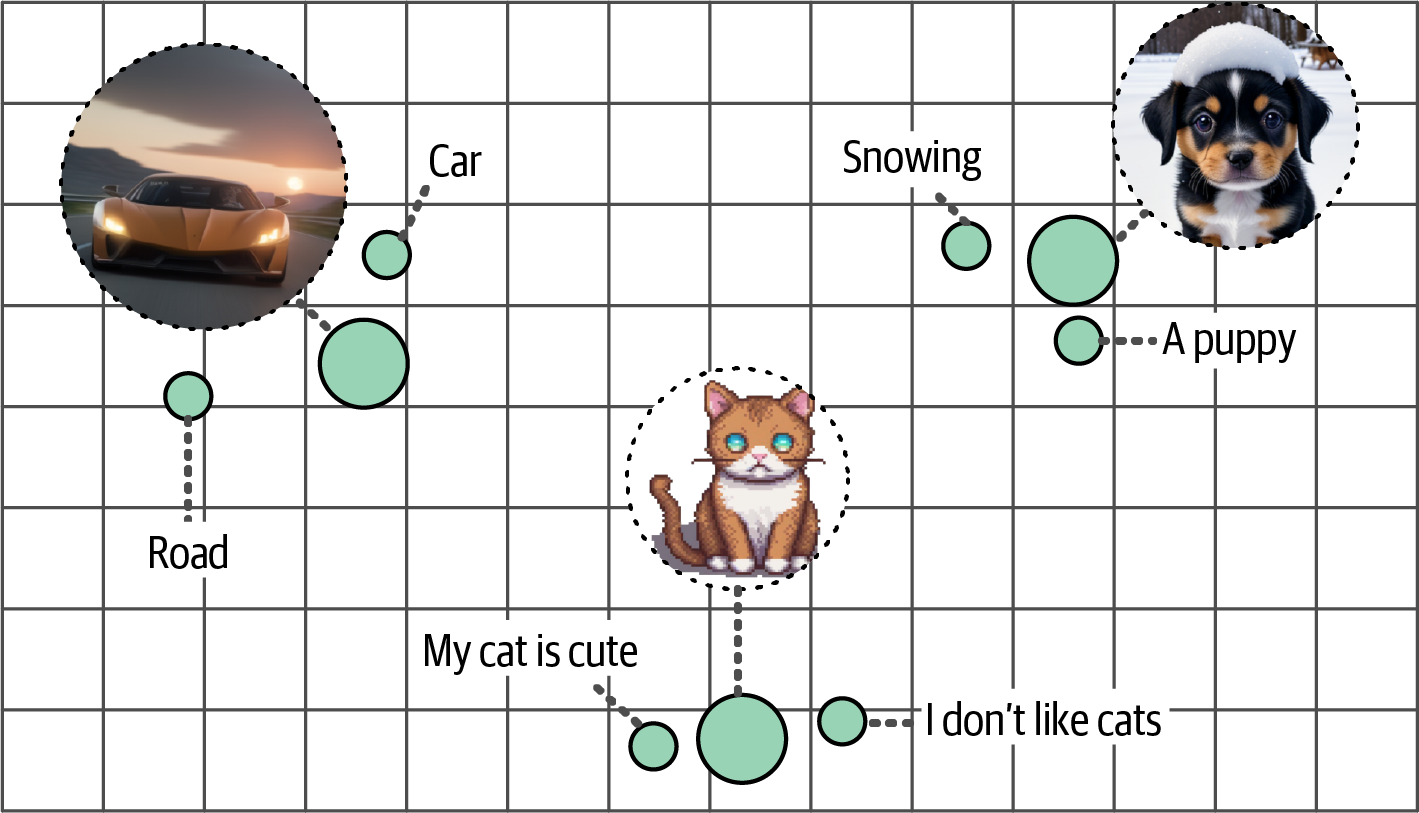

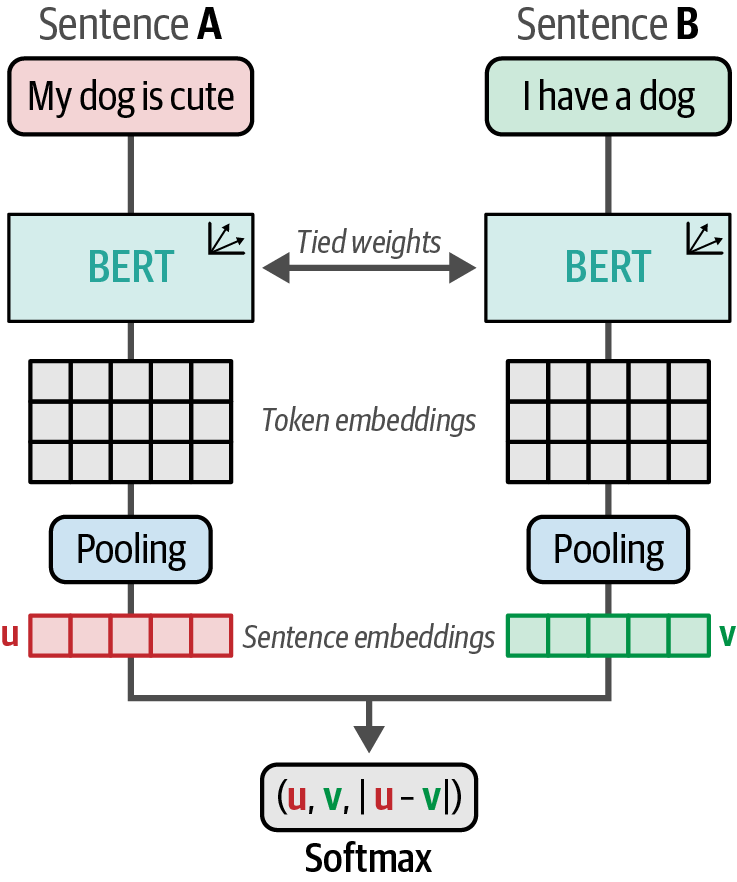

- 10. Creating and Fine-Tuning Text Embedding Models

- References

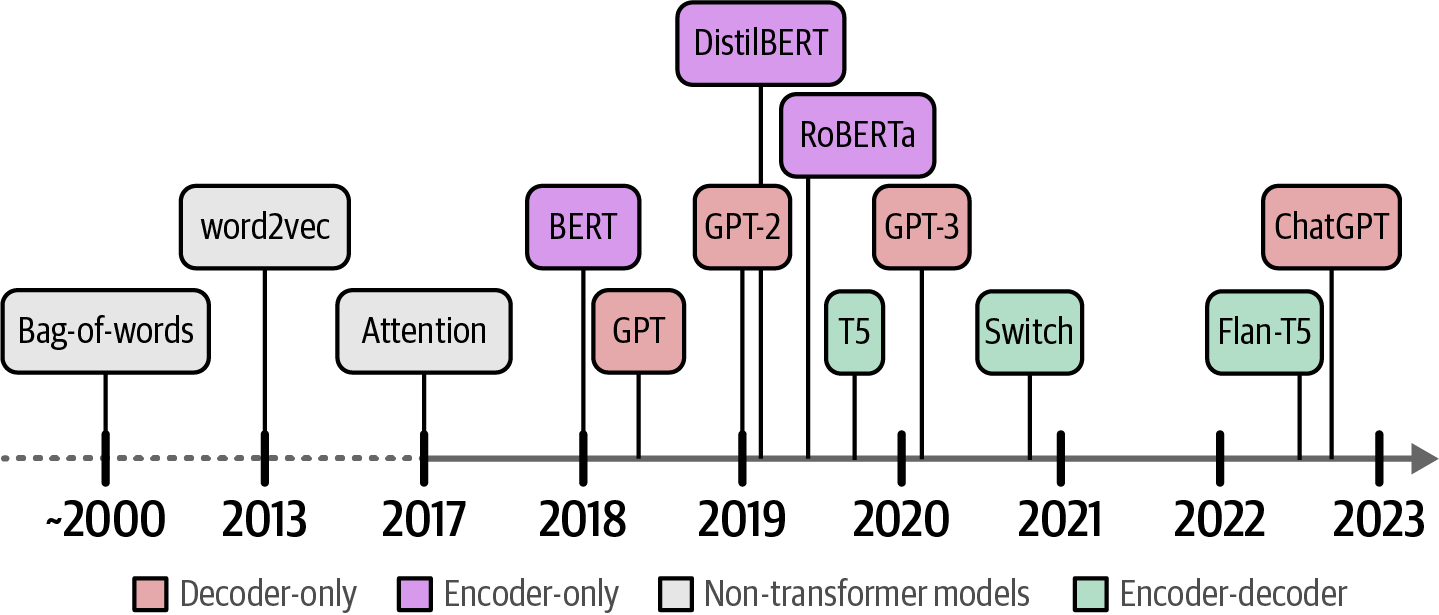

1. Language AI

|

Google Colab offers free, cloud-based GPU and TPU access for accelerated computation, subject to usage limits, and requires changing the runtime type to GPU to enable it. |

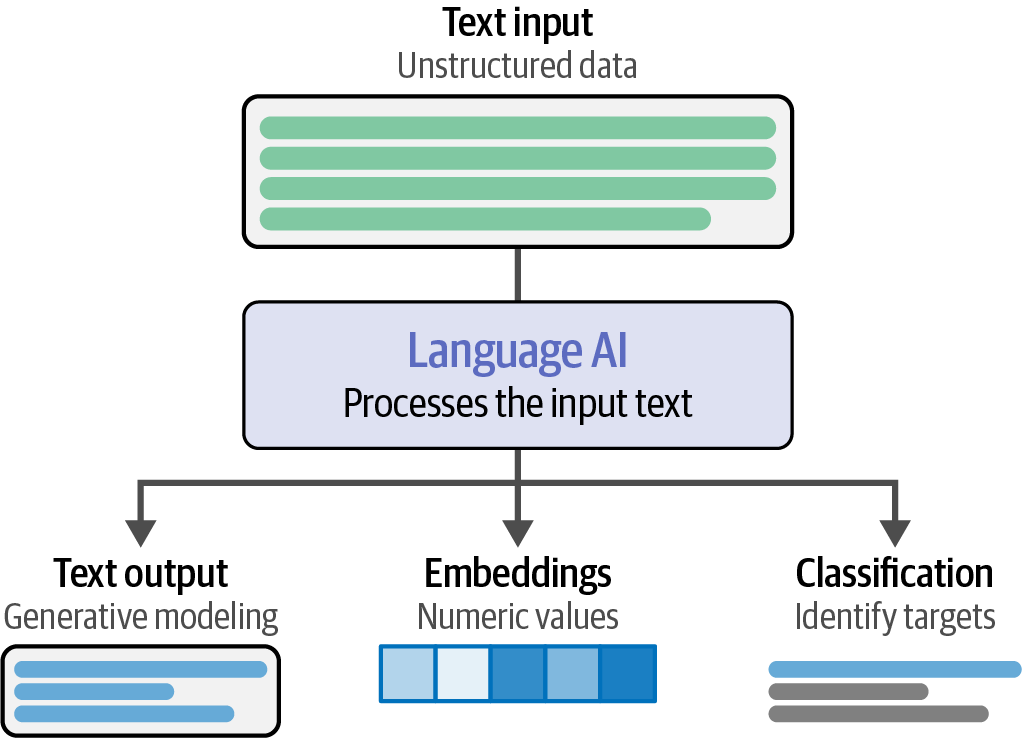

Artificial Intelligence (AI) is the science and engineering of creating intelligent machines, particularly intelligent computer programs, that can perform tasks similar to human intelligence.

Language AI is a subfield of AI focused on developing technologies that can understand, process, and generate human language, which is often used interchangeably with Natural Language Processing (NLP).

-

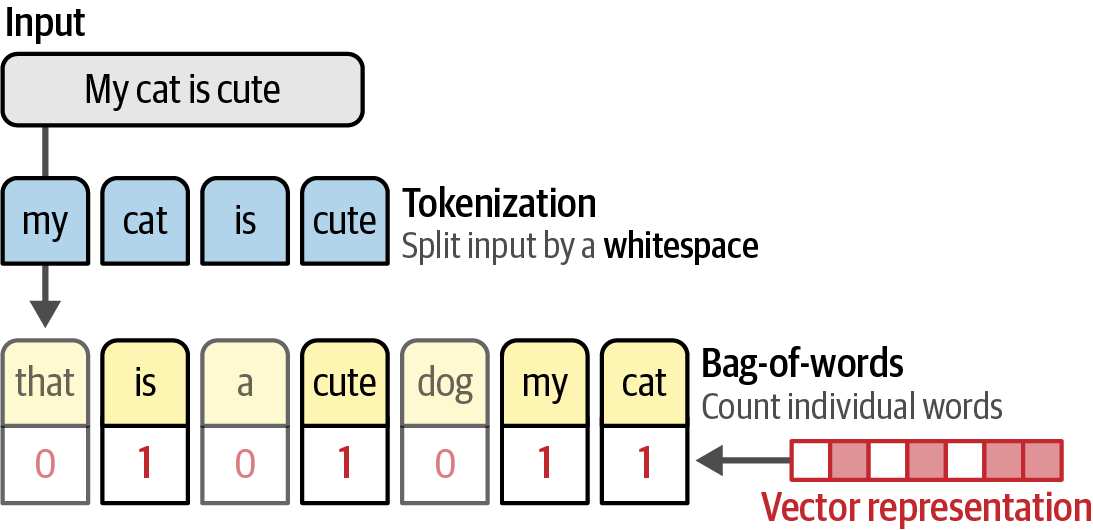

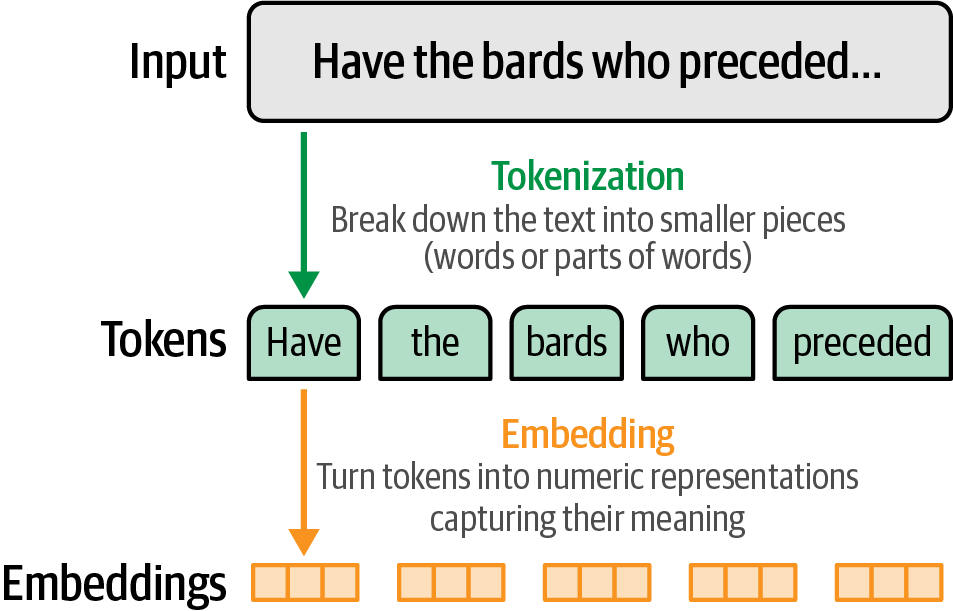

The Bag-of-Words, a representation model, converts text to numerical vectors by tokenizing it—splitting sentences into individual words or subwords (tokens)—creating a vocabulary, and counting token occurrences to form a vector representation (the 'bag of words').

Figure 3. A bag-of-words is created by counting individual words. These values are referred to as vector representations.

Figure 3. A bag-of-words is created by counting individual words. These values are referred to as vector representations. -

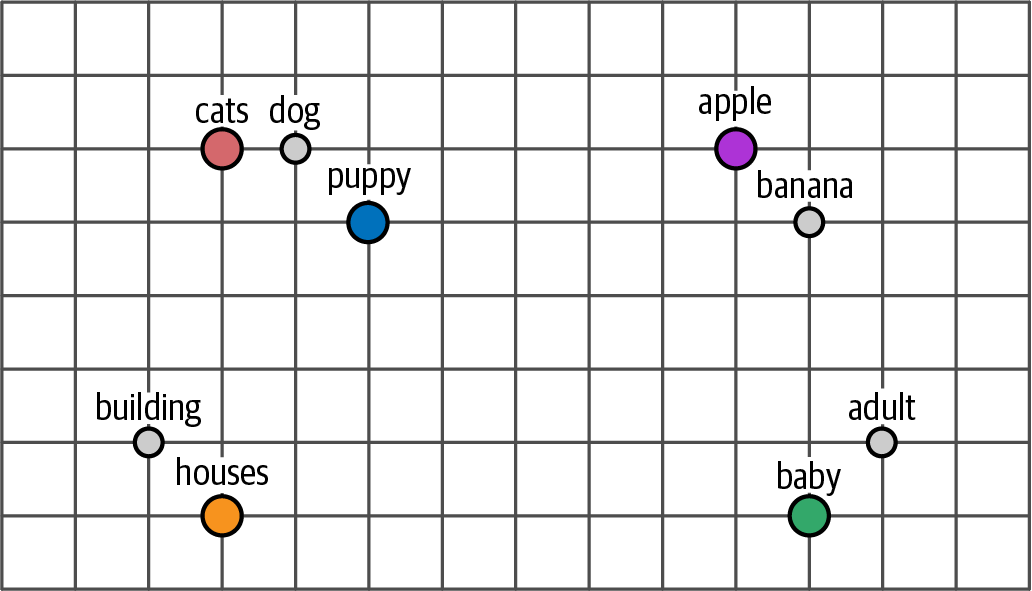

Word2vec introduced dense vector embeddings, a significant improvement over Bag-of-Words, by using neural networks to capture the semantic meaning of words based on their context within large datasets, allowing for the measurement of semantic similarity.

Figure 4. Embeddings of words that are similar will be close to each other in dimensional space.

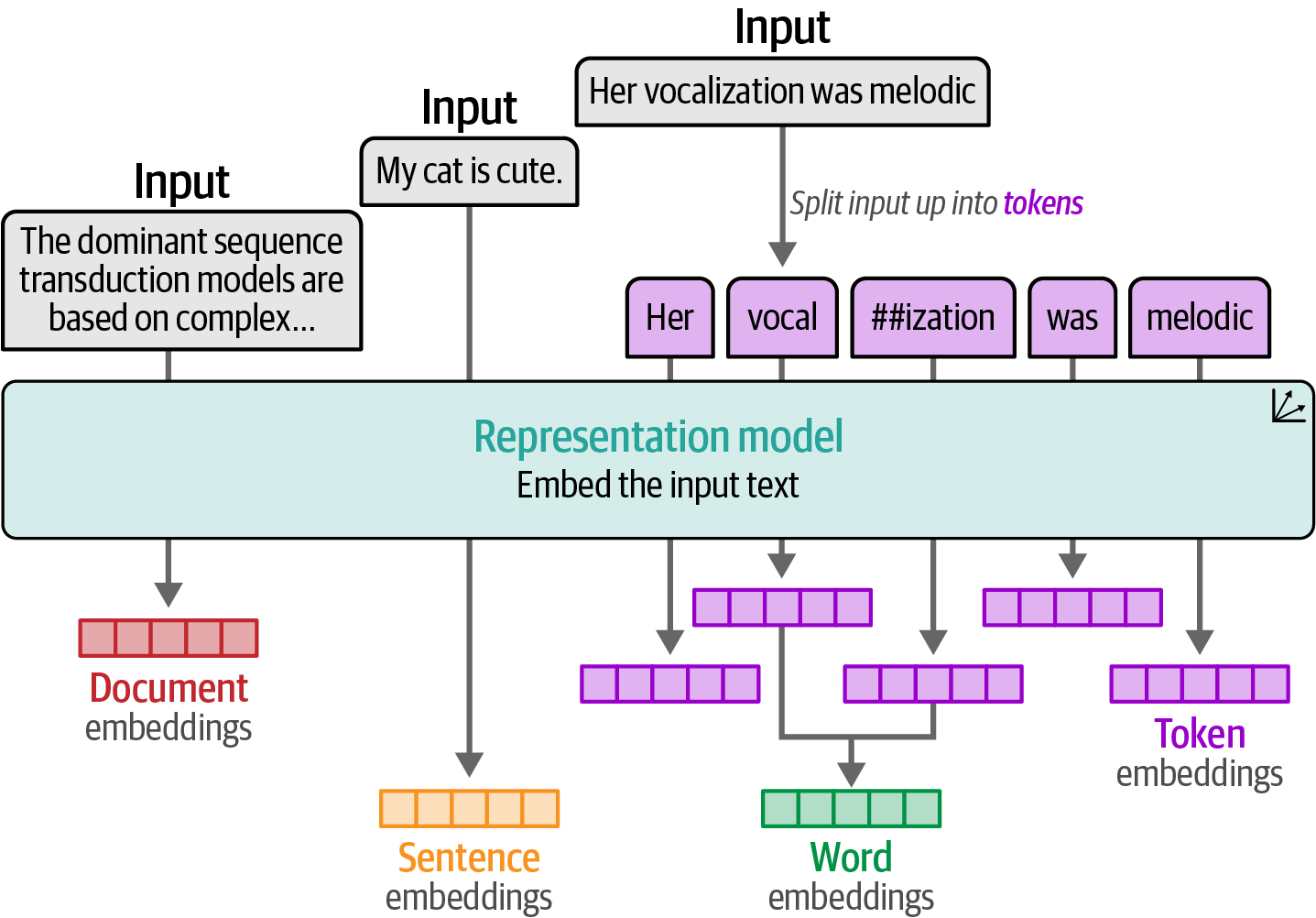

Figure 4. Embeddings of words that are similar will be close to each other in dimensional space. Figure 5. Embeddings can be created for different types of input.

Figure 5. Embeddings can be created for different types of input. -

Attention-based Transformer models, replacing RNNs which struggled with long sentences, enabled parallel processing and context-aware language representation by using stacked encoders and decoders to focus on relevant input, revolutionizing language AI.

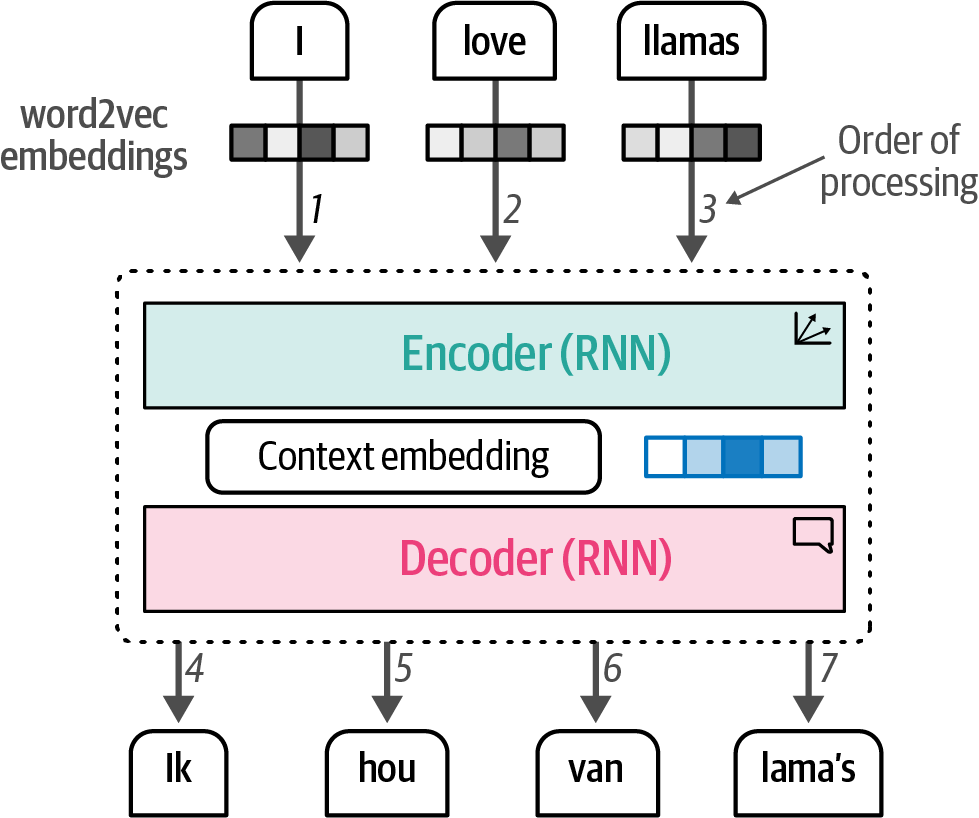

Figure 6. Using word2vec embeddings, a context embedding is generated that represents the entire sequence.

Figure 6. Using word2vec embeddings, a context embedding is generated that represents the entire sequence. -

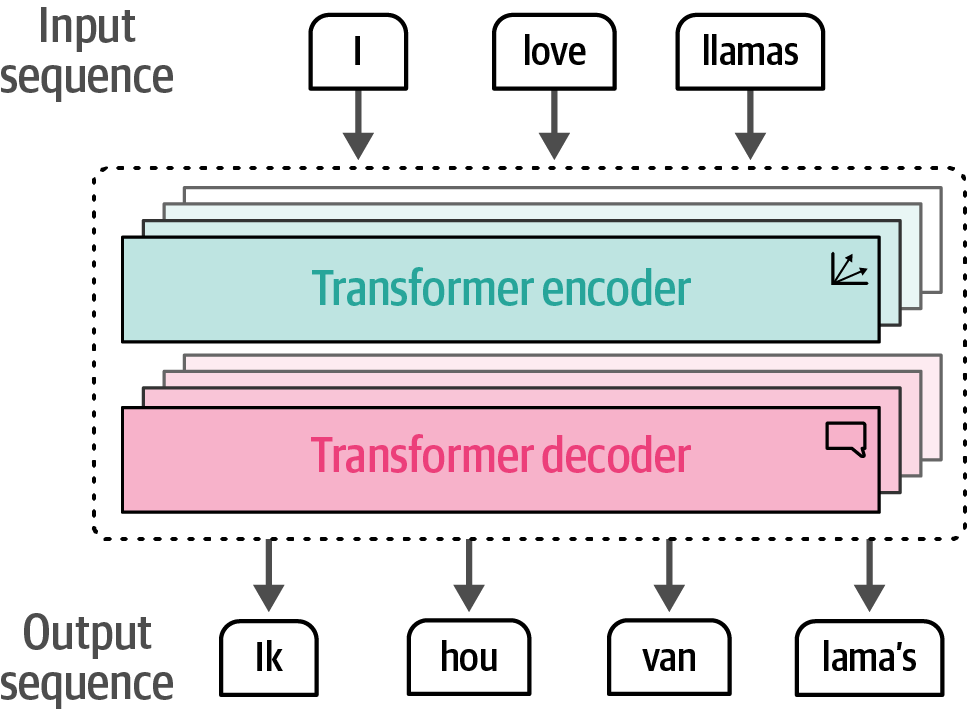

The Transformer is a combination of stacked encoder and decoder blocks where the input flows through each encoder and decoder.

Figure 7. The Transformer is a combination of stacked encoder and decoder blocks where the input flows through each encoder and decoder.

Figure 7. The Transformer is a combination of stacked encoder and decoder blocks where the input flows through each encoder and decoder. Figure 8. The encoder block revolves around self-attention to generate intermediate representations.

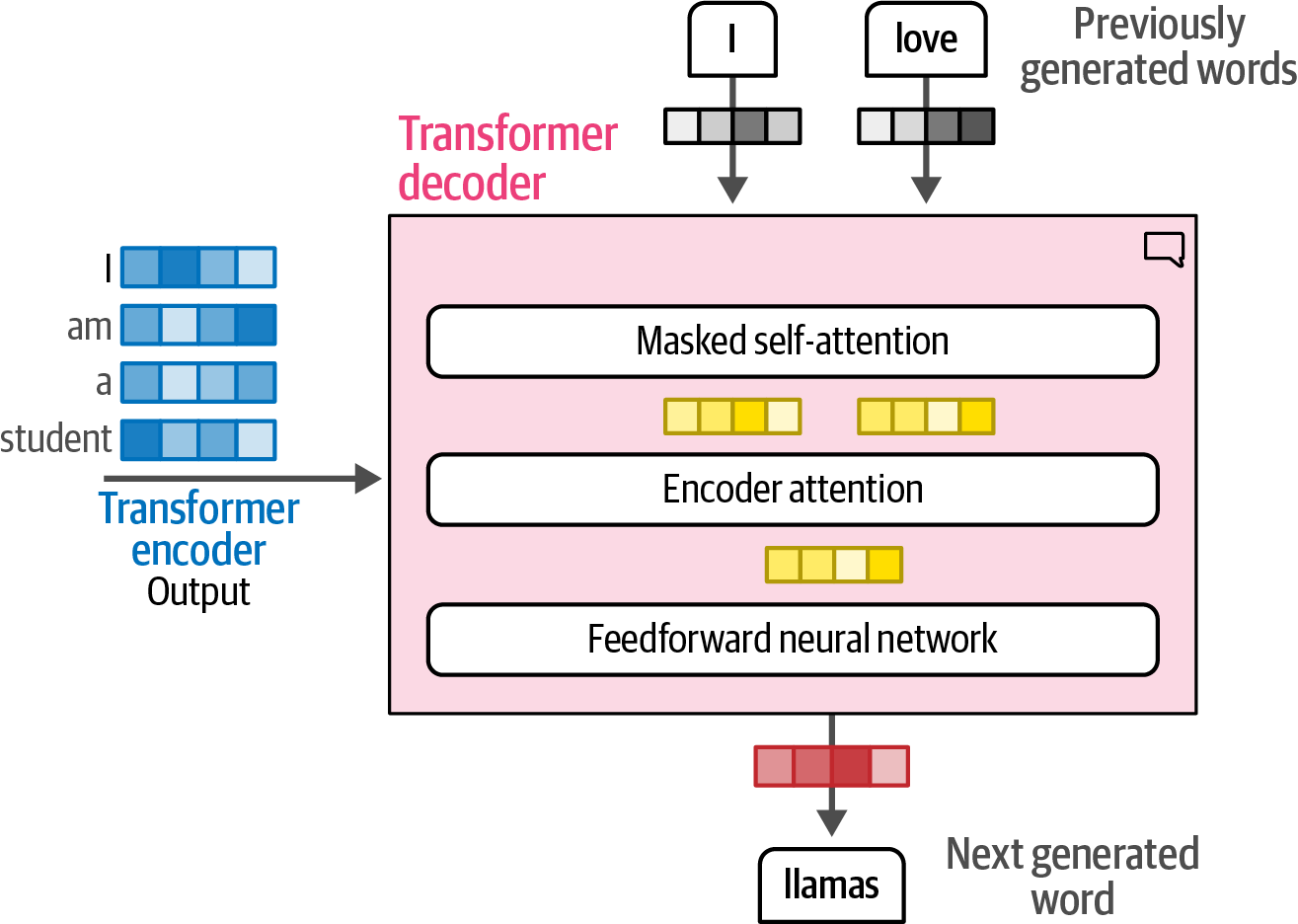

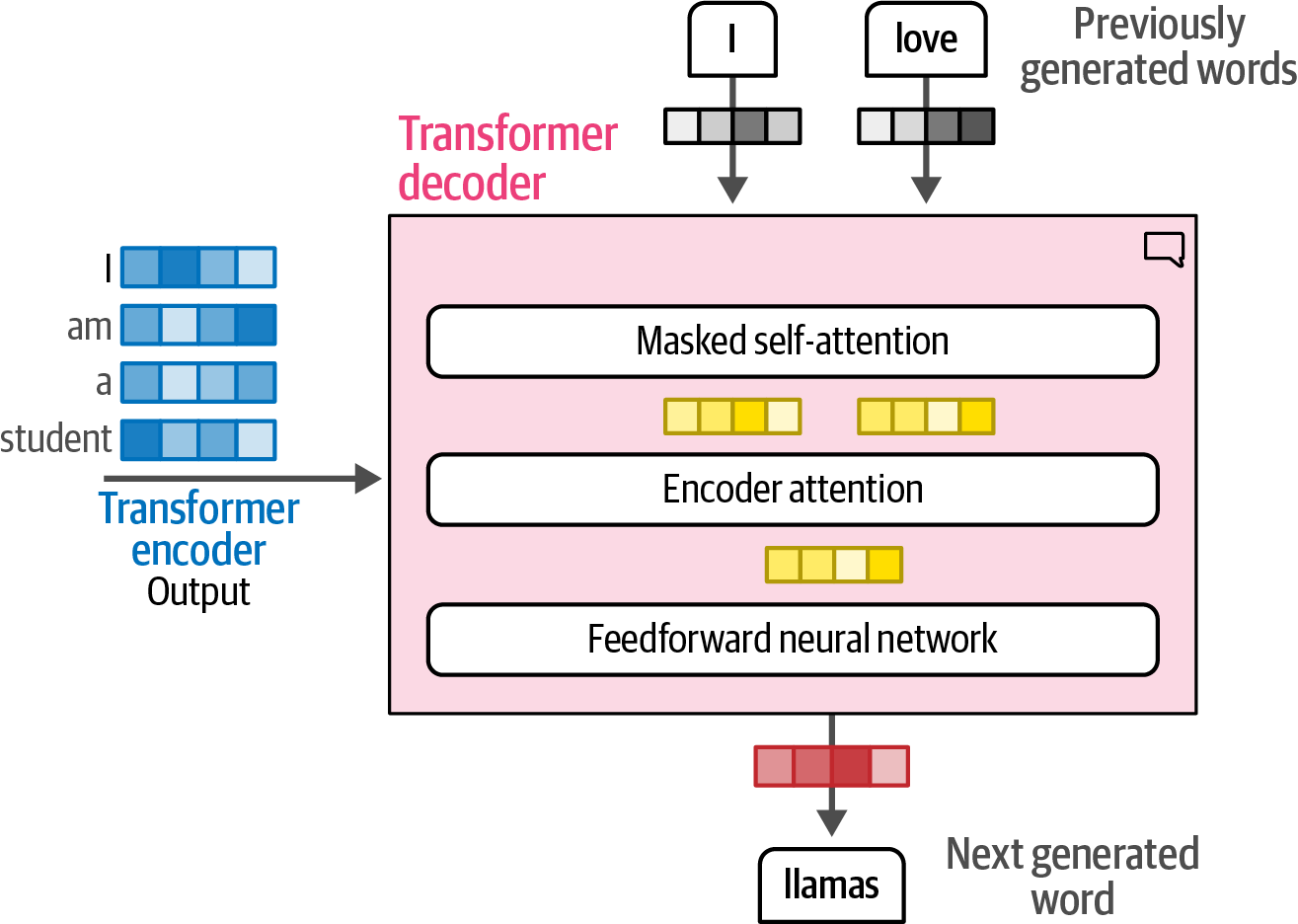

Figure 8. The encoder block revolves around self-attention to generate intermediate representations. Figure 9. The decoder has an additional attention layer that attends to the output of the encoder.

Figure 9. The decoder has an additional attention layer that attends to the output of the encoder. -

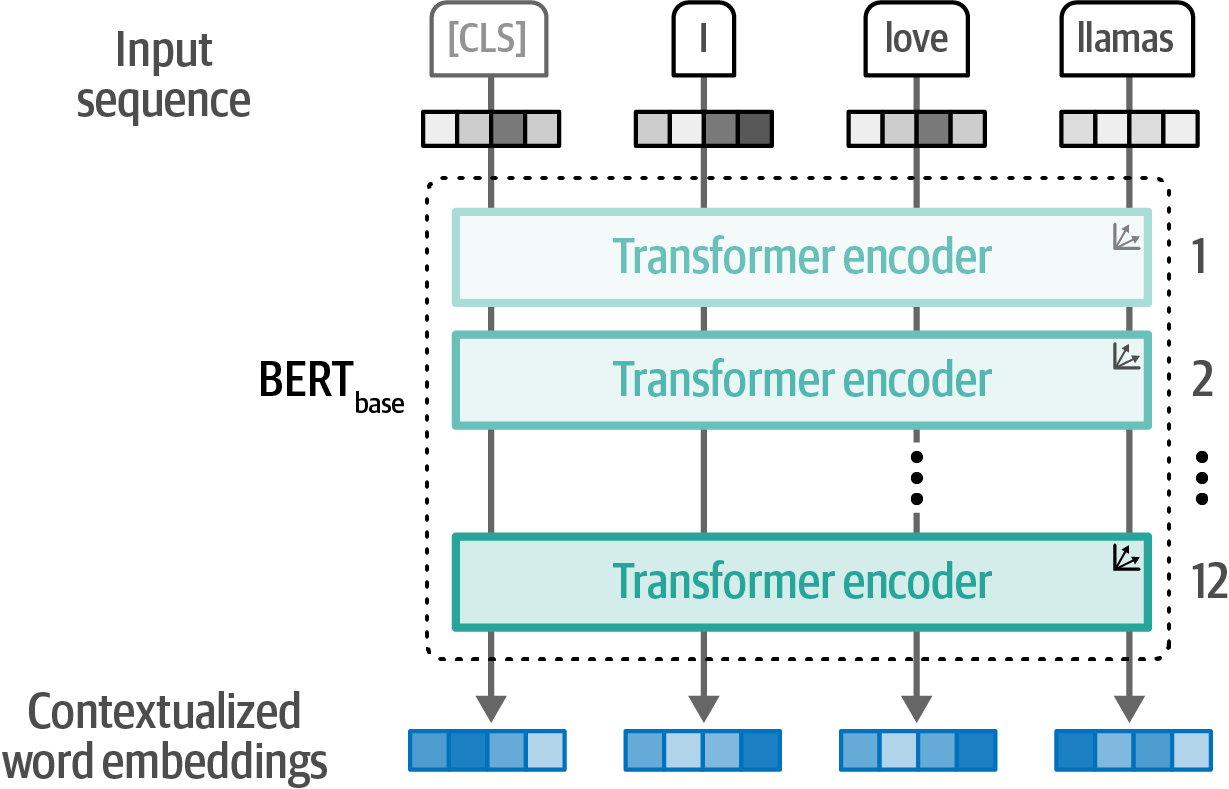

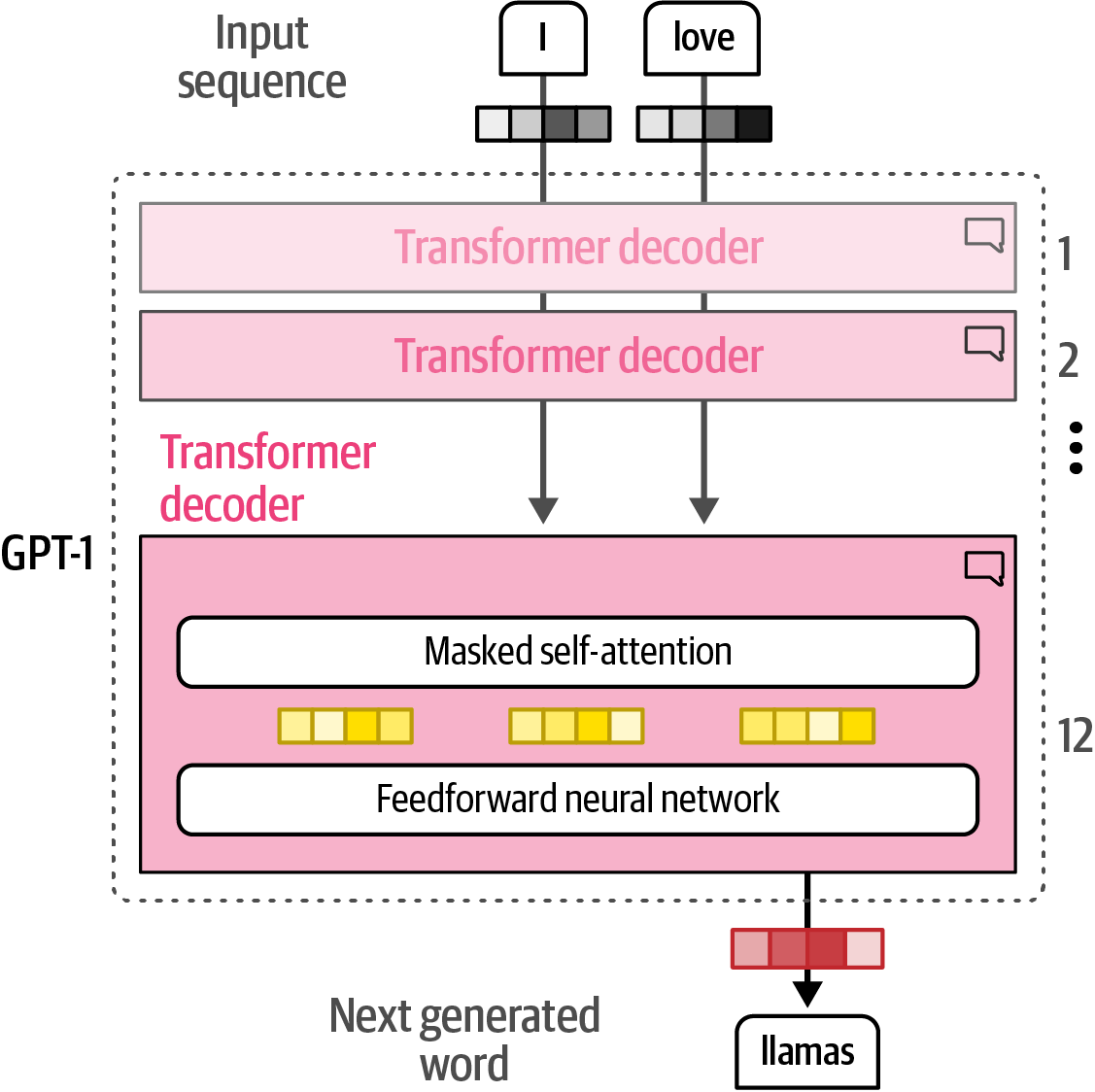

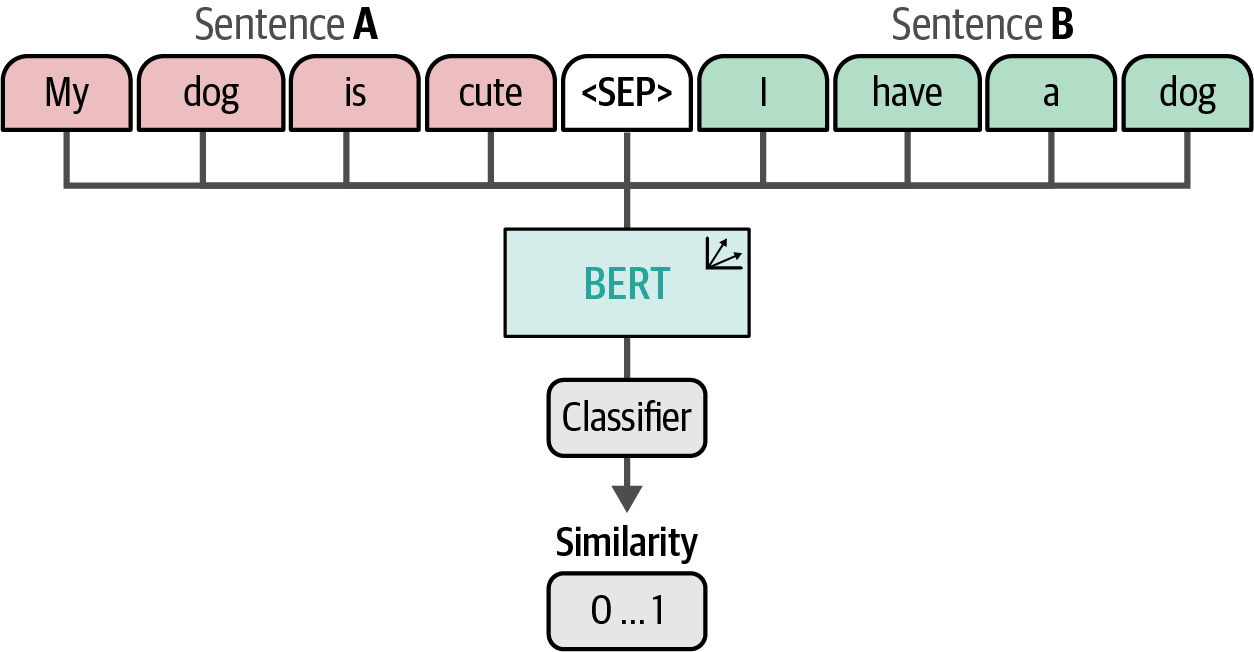

Encoder-only models (a.k.a., representation models) like Bidirectional Encoder Representations from Transformers(BERT) excel at language representation through masked language modeling, while decoder-only models (a.k.a., generative models) like Generative Pre-trained Transformer (GPT) focus on text generation and are the foundation for large language models.

Figure 10. The architecture of a BERT base model with 12 encoders.

Figure 10. The architecture of a BERT base model with 12 encoders. Figure 11. The architecture of a GPT-1. It uses a decoder-only architecture and removes the encoder-attention block.

Figure 11. The architecture of a GPT-1. It uses a decoder-only architecture and removes the encoder-attention block. -

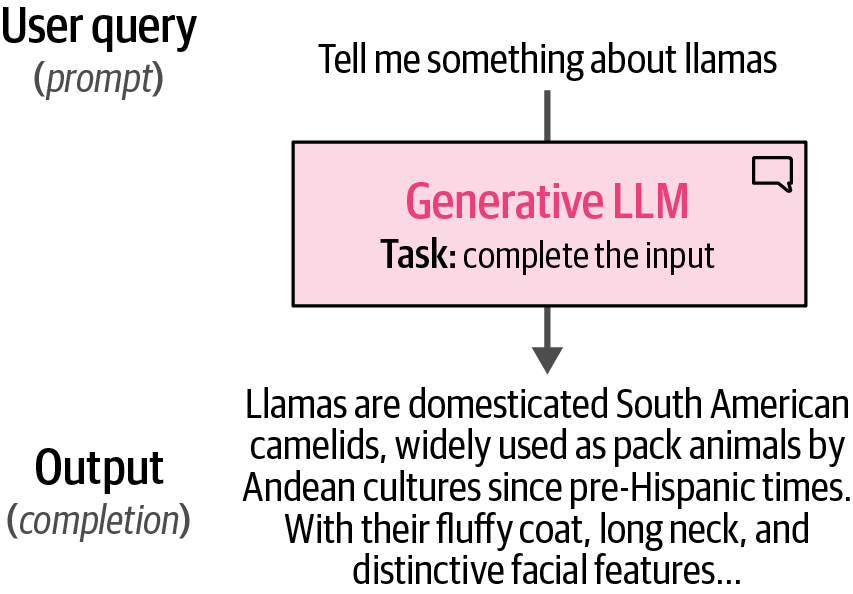

Generative LLMs function as sequence-to-sequence machines, initially designed for text completion, but their capability to be fine-tuned into chatbots or instruct models that can follow user prompts revealed their true potential.

Figure 12. Generative LLMs take in some input and try to complete it. With instruct models, this is more than just autocomplete and attempts to answer the question.

Figure 12. Generative LLMs take in some input and try to complete it. With instruct models, this is more than just autocomplete and attempts to answer the question. -

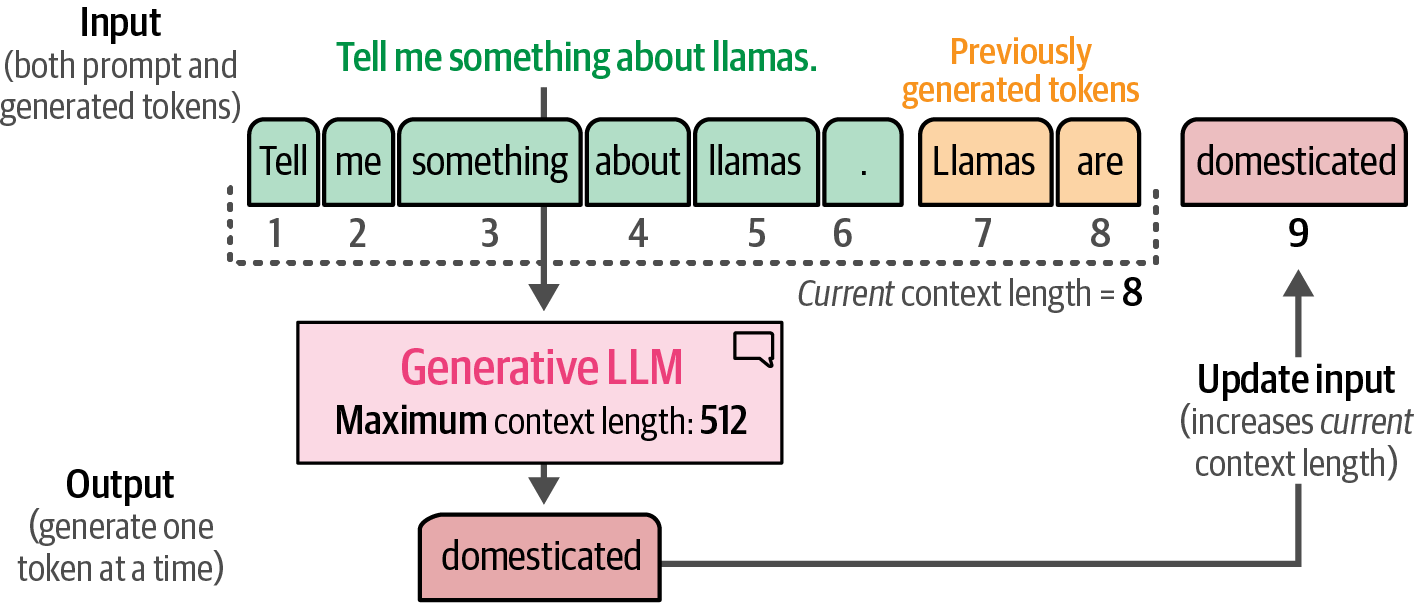

The context length, or window, represents the maximum number of tokens the model can process, enabling the generative LLM to handle larger documents, and the current length expands as the model generates new tokens due to its autoregressive nature.

Figure 13. The context length is the maximum context an LLM can handle.

Figure 13. The context length is the maximum context an LLM can handle. -

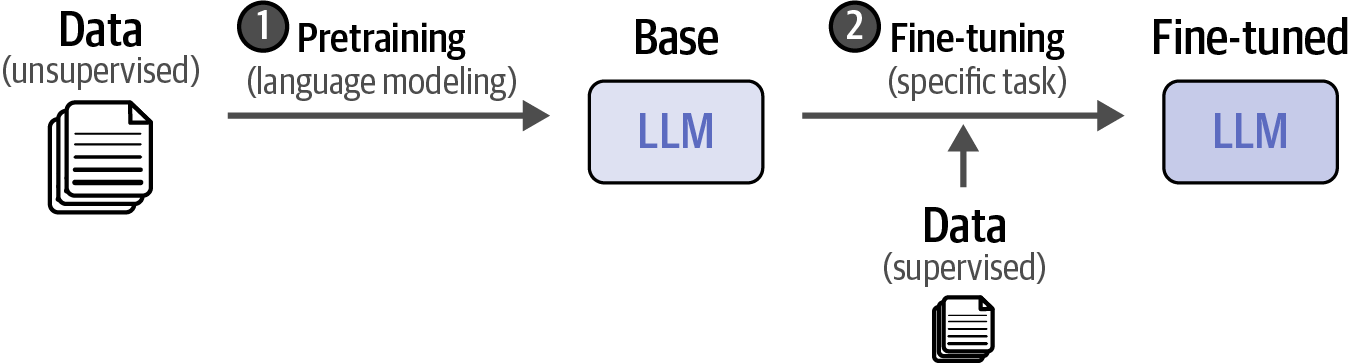

LLMs differ from traditional machine learning by using a two-step training process: pretraining, for general language learning, and fine-tuning (or post-training), to adapt the pretrained (foundation/base) model for specific tasks.

Figure 14. Compared to traditional machine learning, LLM training takes a multistep approach.

Figure 14. Compared to traditional machine learning, LLM training takes a multistep approach. -

Closed-source LLMs, like GPT-4 and Claude, are models that do not have their weights and architecture shared with the public, which are accessed via APIs, and offer high performance with managed hosting, but are costly and limit user control; open LLMs, such as Llama, share their architecture, enabling local use, fine-tuning, and privacy, but require powerful hardware and expertise.

-

The main source for finding and downloading LLMs is the Hugging Face Hub. Hugging Face is the organization behind the well-known Transformers package, which for years has driven the development of language models in general.

# If a connection to the Hugging Face URL (https://huggingface.co/) fails, try to set the HF_ENDPOINT environment variable to the mirror URL. import os os.environ["HF_ENDPOINT"] = "https://hf-mirror.com" -

Hugging Face, the organization behind the Transformers package, is the primary source for finding and downloading LLMs, built upon the Transformer framework.

import os from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline # HF_ENDPOINT controls the base URL used by the transformers library # to download models and other resources from the Hugging Face Hub. os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com' # determine the device dev = 'cuda' if torch.cuda.is_available() else 'cpu' # load model and tokenizer MODEL_NAME = 'microsoft/Phi-4-mini-instruct' model = AutoModelForCausalLM.from_pretrained( MODEL_NAME, torch_dtype='auto', device_map=dev, trust_remote_code=True, ) tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME) # create a pipeline pipe = pipeline( "text-generation", model=model, tokenizer=tokenizer, return_full_text=False, max_new_tokens=500, do_sample=True, ) # the prompt (user input / query) messages = [{"role": "user", "content": "Create a funny joke about chickens."}] # generate output output = pipe(messages) print(output[0]["generated_text"])Why did the chicken join the band? Because he heard they had the "cluck-loudest" performers around!# clear memory and empty the VRAM import gc import torch # attempt to delete the model, tokenizer, and pipeline objects from memory del model, tokenizer, pipe # flush memory gc.collect() if torch.cuda.is_available(): # if a GPU is available, empty the CUDA cache to free up GPU memory torch.cuda.empty_cache()

2. Tokens and Embeddings

Tokens and embeddings are two of the central concepts of using large language models (LLMs).

2.1. LLM Tokenization

import os

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

# HF_ENDPOINT controls the base URL used by the transformers library

# to download models and other resources from the Hugging Face Hub.

os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com'

# determine the device

dev = 'cuda' if torch.cuda.is_available() else 'cpu'

# load model and tokenizer

MODEL_NAME = 'microsoft/Phi-4-mini-instruct'

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

torch_dtype='auto',

device_map=dev,

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

prompt = '<s> Write an email apologizing to Sarah for the tragic gardening mishap. Explain how it happened.<|assistant|>'

# tokenize the input prompt

input_ids = tokenizer(prompt, return_tensors='pt').input_ids.to(dev)

print(f'input_ids: {input_ids}')

# generate the text

output_ids = model.generate(input_ids=input_ids, max_new_tokens=20)

print(f'output_ids: {output_ids}')

# print the output

print(tokenizer.decode(output_ids[0]))input_ids: tensor([[101950, 29, 16465, 448, 3719, 39950, 6396, 316, 32145,

395, 290, 62374, 66241, 80785, 403, 13, 115474, 1495,

480, 12570, 13, 200019]])

output_ids: tensor([[101950, 29, 16465, 448, 3719, 39950, 6396, 316, 32145,

395, 290, 62374, 66241, 80785, 403, 13, 115474, 1495,

480, 12570, 13, 200019, 18174, 25, 336, 2768, 512,

6537, 10384, 395, 290, 193145, 147276, 403, 279, 36210,

32145, 4464, 40, 5498, 495, 3719]])

<s> Write an email apologizing to Sarah for the tragic gardening mishap. Explain how it happened.<|assistant|>Subject: Sincere Apologies for the Gardening Mishap

Dear Sarah,

I hope this email-

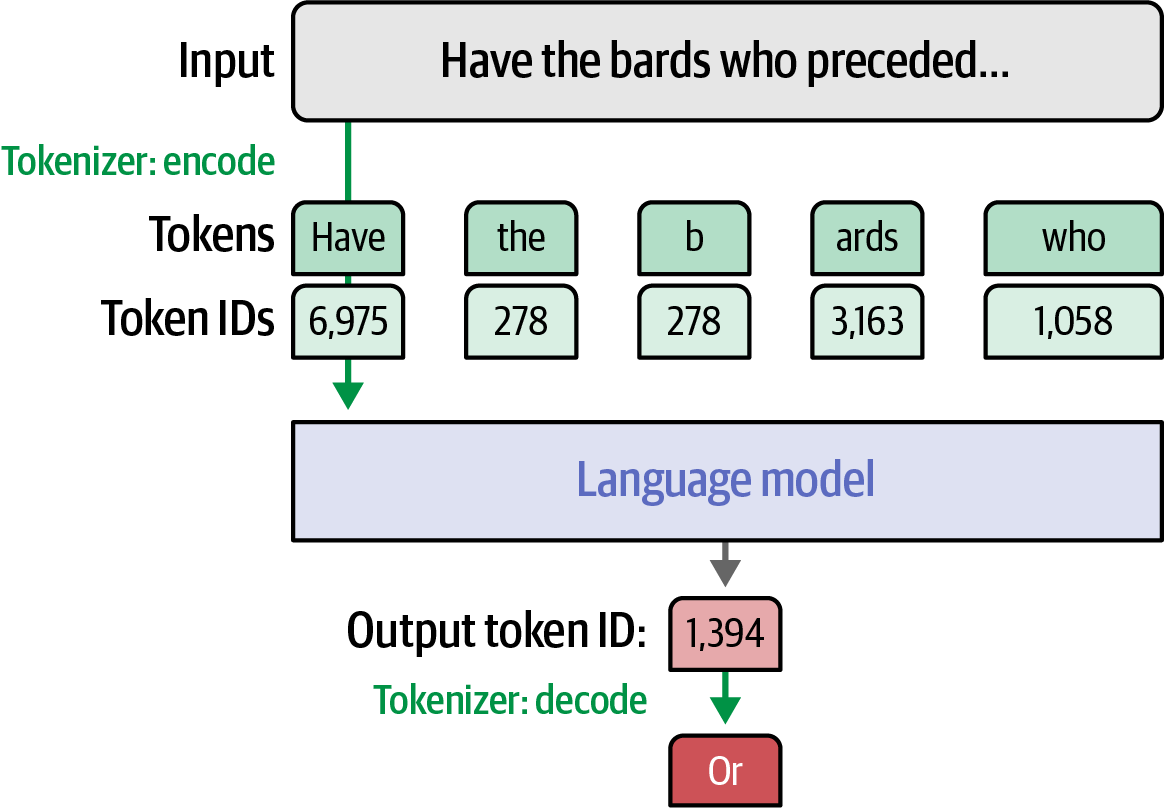

Tokens, the units into which text prompts are broken for model input, also form the model’s output.

Figure 16. A tokenizer encodes input prompts into token ID lists for the language model and decodes the model’s output token IDs back into words or tokens.

Figure 16. A tokenizer encodes input prompts into token ID lists for the language model and decodes the model’s output token IDs back into words or tokens.-

Each ID corresponds to a specific token (character, word, or subword) in the tokenizer’s vocabulary.

-

The tokenizer’s vocabulary acts as a lookup table, allowing the model to convert between text and these integer representations.

for id in [101950, 29, 16465, 448, 3719, 39950]: print(tokenizer.decode(id)) # <s # > # Write # an # email # apolog for id in [18174, 25, 336, 2768, 512]: print(tokenizer.decode(id) # Subject # : # S # inc # ere

-

-

Tokenization is determined by three major design decisions: the tokenizer algorithm (e.g., BPE, WordPiece, SentencePiece), tokenization parameters (including vocabulary size, special tokens, capitalization, treatment of capitalization and different languages), and the dataset the tokenizer is trained on (a tokenizer trained on an English text dataset will be different from another trained on a code dataset or a multilingual text dataset).

-

Tokenization methods vary in granularity, from word-level to byte-level, with subword tokenization offering a balance of vocabulary expressiveness and efficiency, making it the most common approach in modern language models.

2.2. Token Embeddings

Text --> Tokens --> Token IDs --> Embeddings (Vectors)-

A tokenizer, once trained, becomes intrinsically linked to its language model during the model’s training; consequently, a pretrained language model cannot function with a different tokenizer without retraining, as their vocabularies and tokenization schemes are aligned.

-

An embedding is a dense, numerical vector representation of a token (like a word or subword) that captures its semantic meaning within a high-dimensional space, enabling language models to understand and process relationships between words.

-

A language model stores static embedding vectors for each token in its vocabulary, but also generates contextualized word embeddings, dynamically representing a token based on its context instead of a single, fixed vector.

-

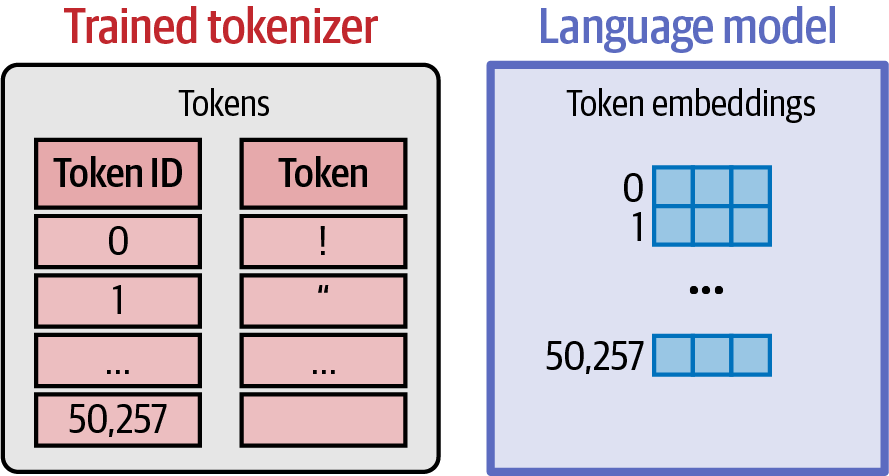

A language model holds an embedding vector associated with each token in its tokenizer.

Figure 17. A language model holds an embedding vector associated with each token in its tokenizer.

Figure 17. A language model holds an embedding vector associated with each token in its tokenizer. -

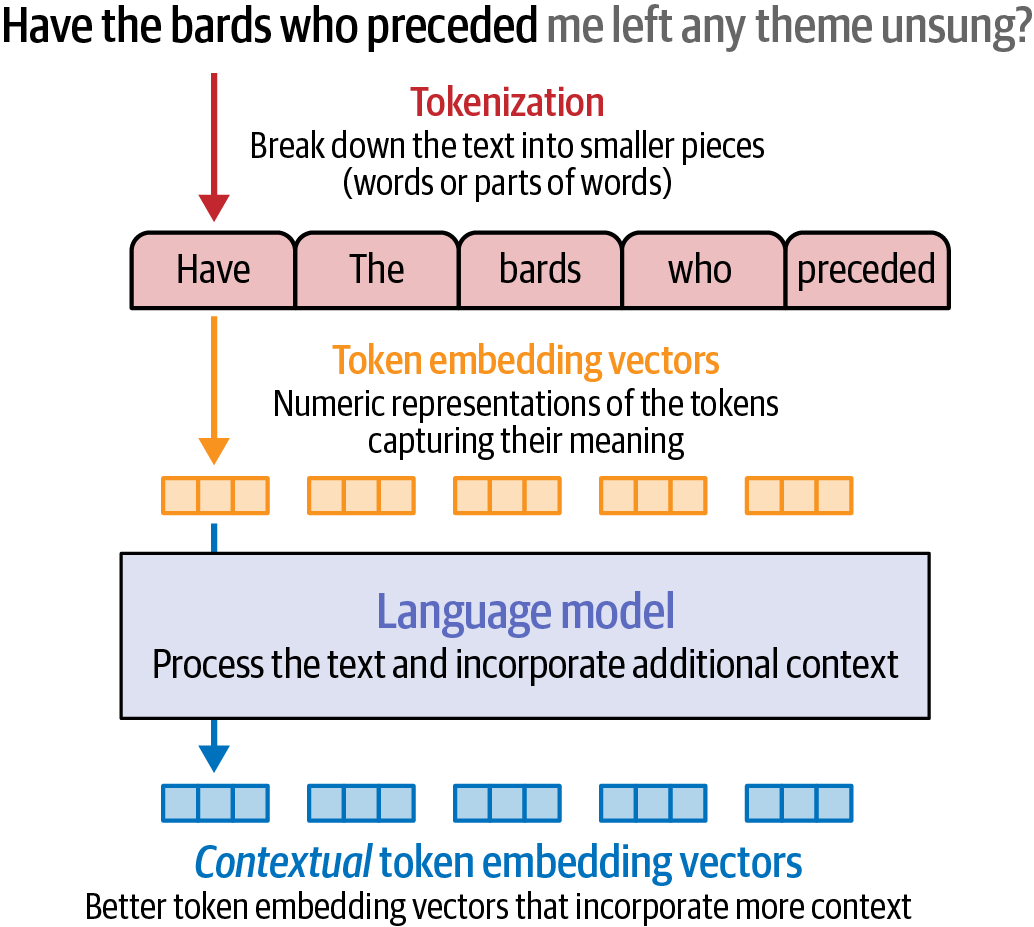

A language model operates on raw, static embeddings as its input and produces contextual text embeddings.

Figure 18. A language model operates on raw, static embeddings as its input and produces contextual text embeddings.

Figure 18. A language model operates on raw, static embeddings as its input and produces contextual text embeddings.from transformers import AutoModel, AutoTokenizer # load a tokenizer tokenizer = AutoTokenizer.from_pretrained('microsoft/deberta-base') # load a language model model = AutoModel.from_pretrained('microsoft/deberta-v3-xsmall') # tokenize the sentence: convert text to token IDs tokens = tokenizer('Hello world', return_tensors='pt') # print the decoded tokens to show tokenization for token_id in tokens['input_ids'][0]: print(tokenizer.decode(token_id)) print('\n') # process the token IDs through the model to get contextualized embeddings output = model(**tokens)[0] # show the shape of the embedding result print(f'{output.shape}\n') # output contains the contextualized embedding vectors print(output)[CLS] Hello world [SEP] torch.Size([1, 4, 384]) tensor([[[-3.4816, 0.0861, -0.1819, ..., -0.0612, -0.3911, 0.3017], [ 0.1898, 0.3208, -0.2315, ..., 0.3714, 0.2478, 0.8048], [ 0.2071, 0.5036, -0.0485, ..., 1.2175, -0.2292, 0.8582], [-3.4278, 0.0645, -0.1427, ..., 0.0658, -0.4367, 0.3834]]], grad_fn=<NativeLayerNormBackward0>)

-

2.3. Text Embeddings

Text embeddings are single, dense vectors that represent the semantic meaning of entire sentences, paragraphs, or documents, in contrast to token embeddings, which represent individual words or subwords.

from sentence_transformers import SentenceTransformer

# load model

model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

# convert text to text embeddings

embeddings = model.encode("Best movie ever!")

print(embeddings.shape) # (384,)|

Input Sequence Length: https://www.sbert.net/

For transformer models like BERT, RoBERTa, DistilBERT etc., the runtime and memory requirement grows quadratic with the input length. This limits transformers to inputs of certain lengths. A common value for BERT-based models are 512 tokens, which corresponds to about 300-400 words (for English). Each model has a maximum sequence length under |

3. Large Language Models

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

# determine the device

dev = 'cuda' if torch.cuda.is_available() else 'cpu'

# load model and tokenizer

MODEL_NAME = 'microsoft/Phi-4-mini-instruct'

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

torch_dtype='auto',

device_map=dev,

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

# create a pipeline

generator = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

return_full_text=False,

max_new_tokens=50,

do_sample=False,

)3.1. Inputs and Outputs

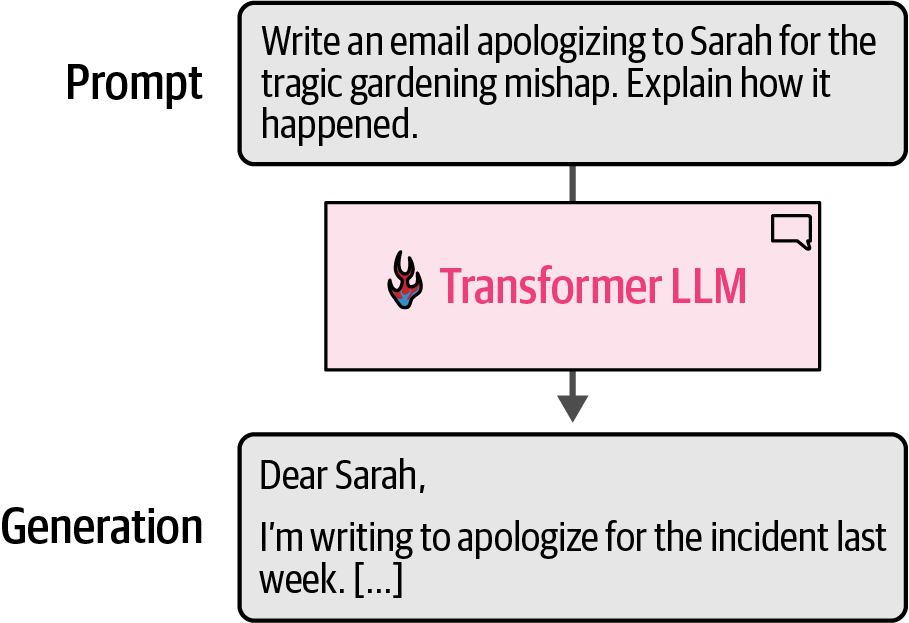

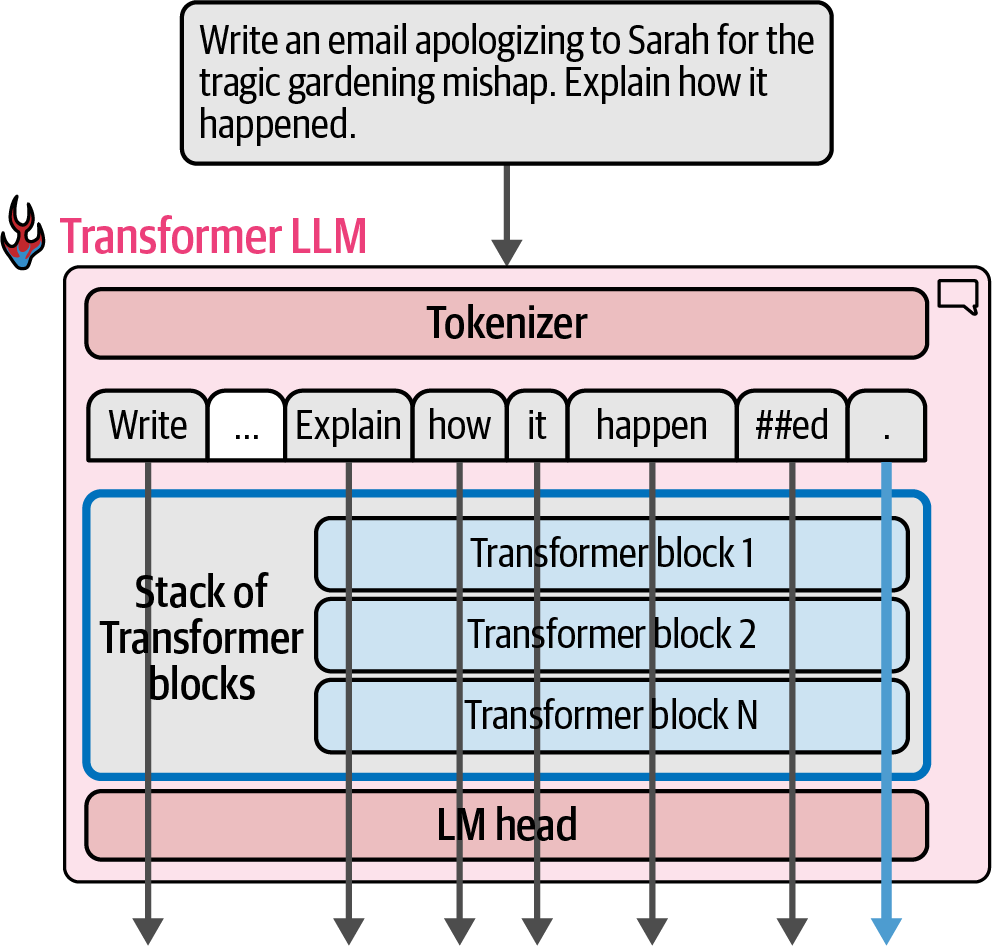

The most common picture of understanding the behavior of a Transformer LLM is to think of it as a software system that takes in text and generates text in response.

-

Once a large enough text-in-text-out model is trained on a large enough high-quality dataset, it becomes able to generate impressive and useful outputs.

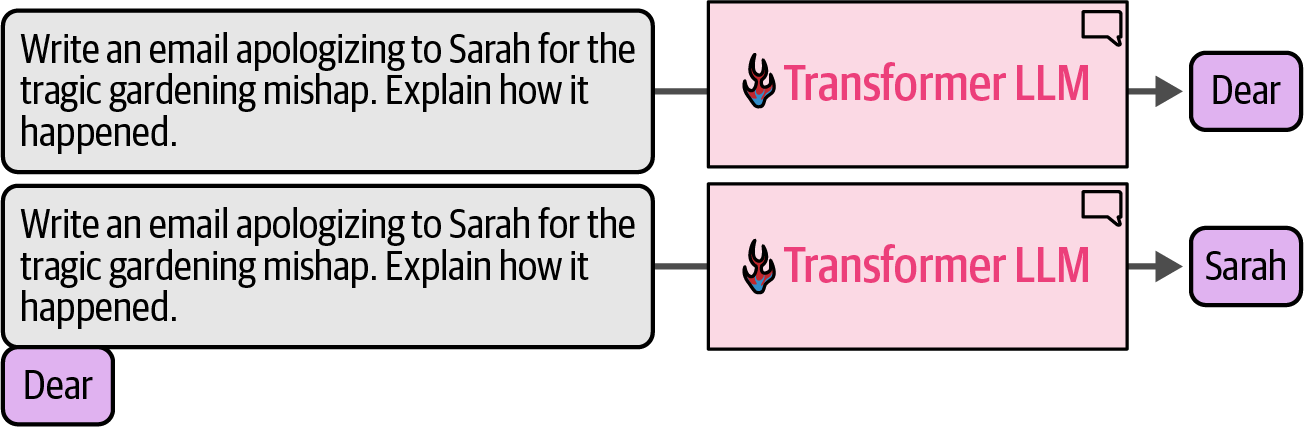

Figure 19. At a high level of abstraction, Transformer LLMs take a text prompt and output generated text.

Figure 19. At a high level of abstraction, Transformer LLMs take a text prompt and output generated text. -

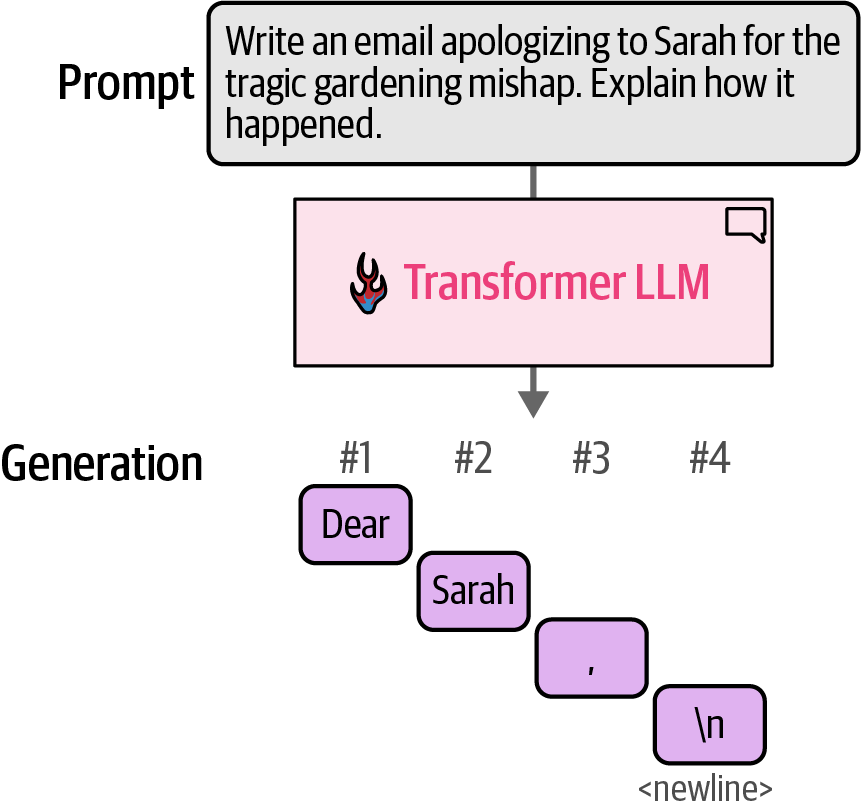

The model does not generate the text all in one operation; it actually generates one token at a time.

Figure 20. Transformer LLMs generate one token at a time, not the entire text at once.

Figure 20. Transformer LLMs generate one token at a time, not the entire text at once. -

Each token generation step is one forward pass through the model (that’s machine-learning speak for the inputs going into the neural network and flowing through the computations it needs to produce an output on the other end of the computation graph).

-

After each token generation, the input prompt for the next generation step is tweaked by appending the output token to the end of the input prompt.

Figure 21. An output token is appended to the prompt, then this new text is presented to the model again for another forward pass to generate the next token.

Figure 21. An output token is appended to the prompt, then this new text is presented to the model again for another forward pass to generate the next token. -

Text generation LLMs are called autoregressive models because they generate text sequentially, using prior outputs as input, unlike text representation models like BERT, which process the entire input at once.

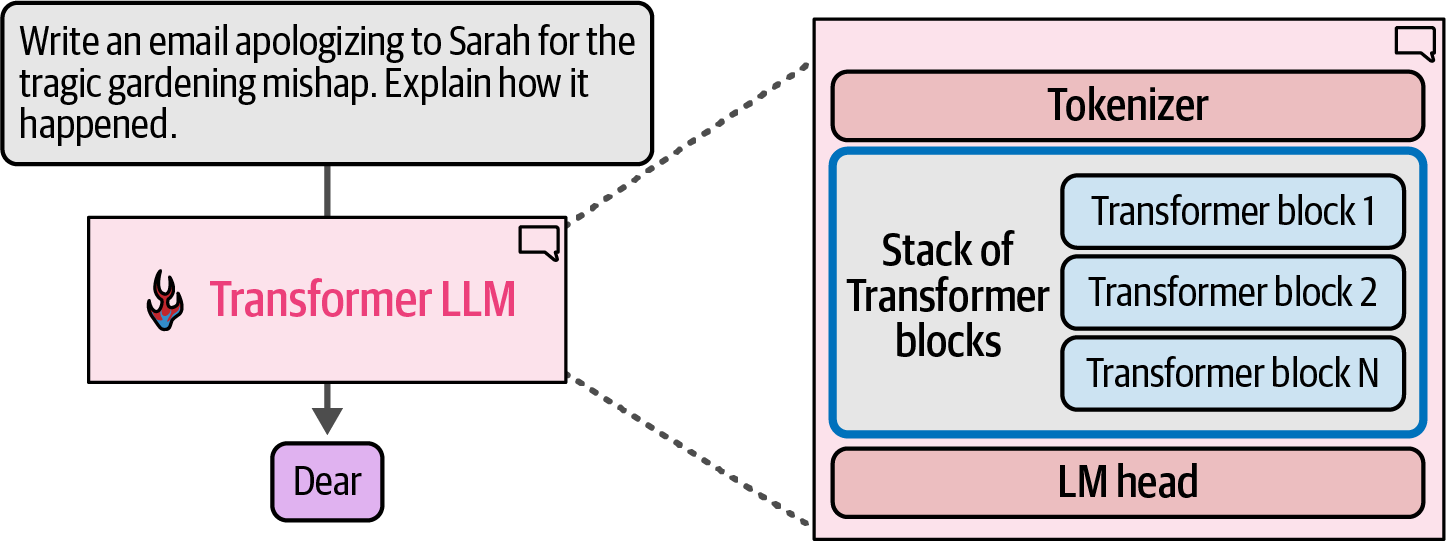

3.2. Components

-

A language model consists of a tokenizer, a stack of Transformer blocks for processing, and an LM head that converts the processed information into probability scores for the next token.

Figure 22. A Transformer LLM is made up of a tokenizer, a stack of Transformer blocks, and a language modeling head.

Figure 22. A Transformer LLM is made up of a tokenizer, a stack of Transformer blocks, and a language modeling head. -

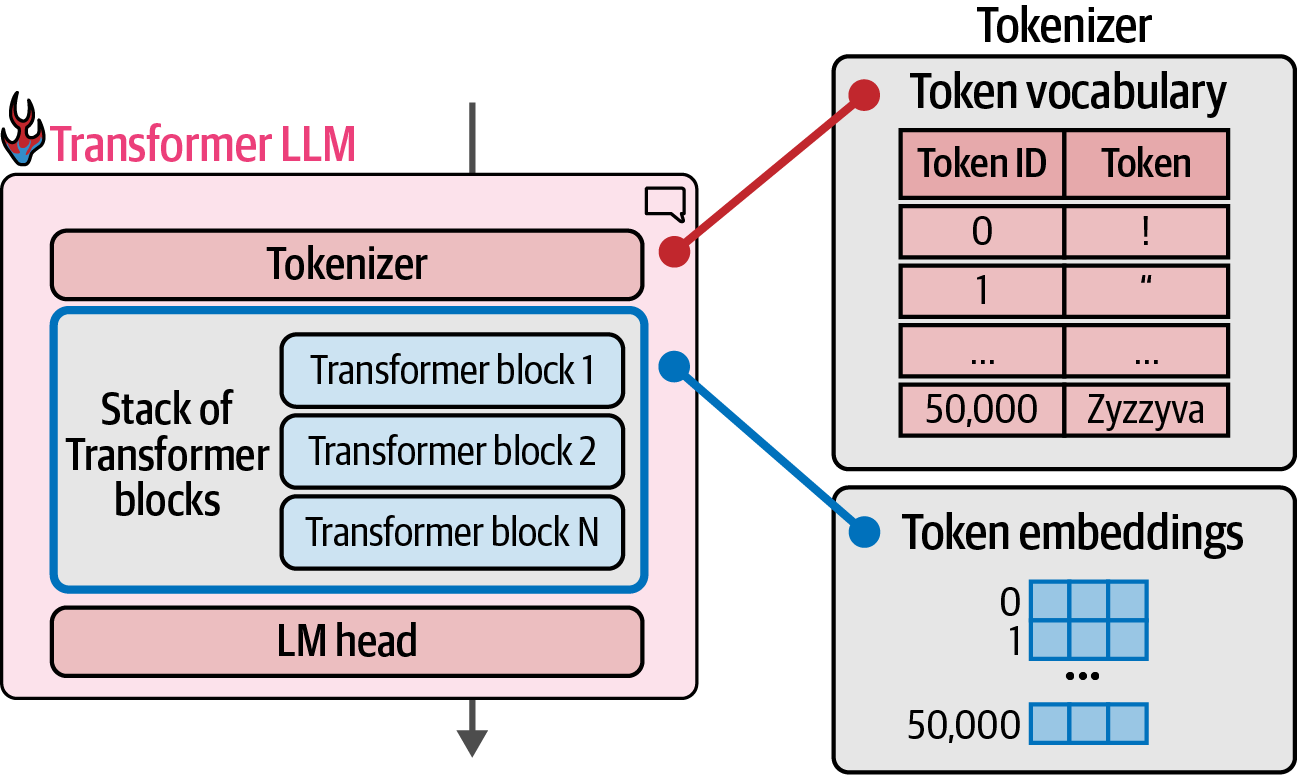

The model has a vector representation associated with each of these tokens in the vocabulary (token embeddings).

Figure 23. The tokenizer has a vocabulary of 50,000 tokens. The model has token embeddings associated with those embeddings.

Figure 23. The tokenizer has a vocabulary of 50,000 tokens. The model has token embeddings associated with those embeddings. -

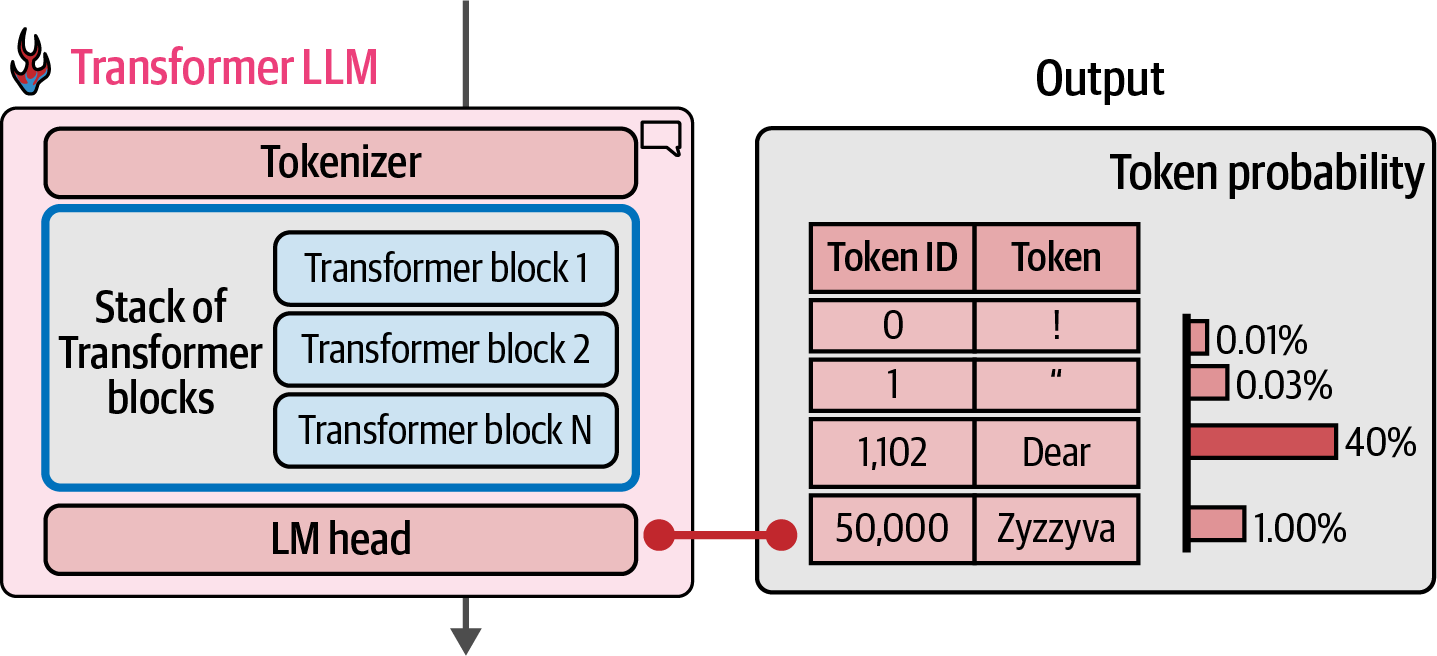

For each generated token, the process flows once through each of the Transformer blocks in the stack in order, then to the LM head, which finally outputs the probability distribution for the next token.

Figure 24. At the end of the forward pass, the model predicts a probability score for each token in the vocabulary.

Figure 24. At the end of the forward pass, the model predicts a probability score for each token in the vocabulary.import torch from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline # determine the device dev = 'cuda' if torch.cuda.is_available() else 'cpu' # load model and tokenizer MODEL_NAME = 'microsoft/Phi-4-mini-instruct' model = AutoModelForCausalLM.from_pretrained( MODEL_NAME, torch_dtype='auto', device_map=dev, trust_remote_code=True, ) print(model)Phi3ForCausalLM( (model): Phi3Model( (embed_tokens): Embedding(200064, 3072, padding_idx=199999) (layers): ModuleList( (0-31): 32 x Phi3DecoderLayer( (self_attn): Phi3Attention( (o_proj): Linear(in_features=3072, out_features=3072, bias=False) (qkv_proj): Linear(in_features=3072, out_features=5120, bias=False) ) (mlp): Phi3MLP( (gate_up_proj): Linear(in_features=3072, out_features=16384, bias=False) (down_proj): Linear(in_features=8192, out_features=3072, bias=False) (activation_fn): SiLU() ) (input_layernorm): Phi3RMSNorm((3072,), eps=1e-05) (post_attention_layernorm): Phi3RMSNorm((3072,), eps=1e-05) (resid_attn_dropout): Dropout(p=0.0, inplace=False) (resid_mlp_dropout): Dropout(p=0.0, inplace=False) ) ) (norm): Phi3RMSNorm((3072,), eps=1e-05) (rotary_emb): Phi3RotaryEmbedding() ) (lm_head): Linear(in_features=3072, out_features=200064, bias=False) )

3.3. Probability Distribution (Sampling/Decoding)

Language models use a probability distribution to determine the next token, which is called the decoding strategy.

-

The easiest strategy would be to always pick the token with the highest probability score, which is called greedy decoding (equivalent to setting the temperature to zero in an LLM).

In practice, this doesn’t tend to lead to the best outputs for most use cases.

-

A better approach is to introduce randomness by sampling from the probability distribution, sometimes choosing the second or third highest probability token.

3.4. Parallel Token Processing and Context Size

-

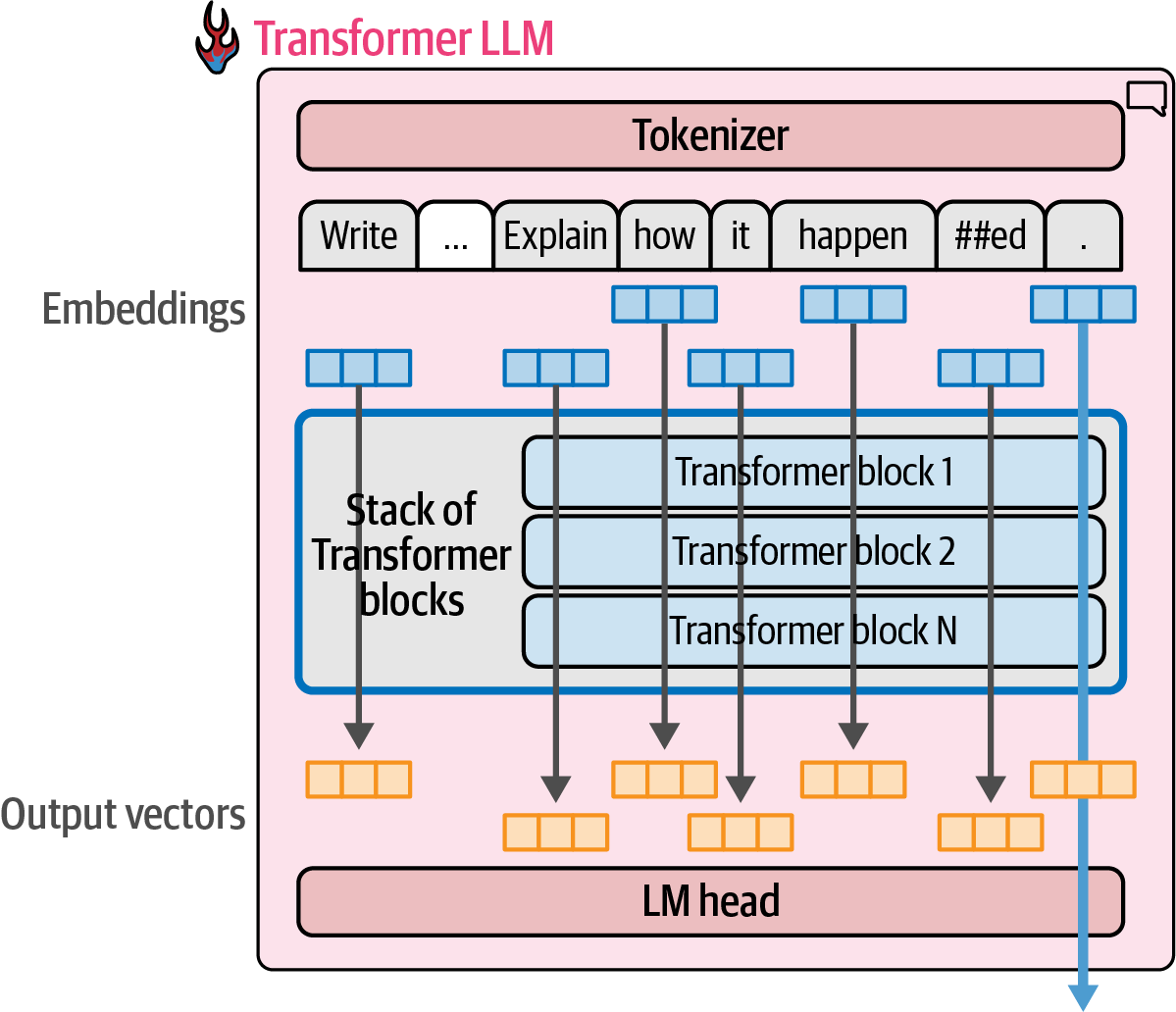

Transformers excel at parallel processing, unlike earlier architectures, which is evident in how they handle token generation.

-

Each input token is processed simultaneously through its own computation path or stream.

Figure 25. Each token is processed through its own stream of computation (with some interaction between them in attention steps).

Figure 25. Each token is processed through its own stream of computation (with some interaction between them in attention steps). -

A model with 4K context length or context size can only process 4K tokens and would only have 4K of these streams.

-

-

Each of the token streams starts with an input vector (the embedding vector and some positional information).

Figure 26. Each processing stream takes a vector as input and produces a final resulting vector of the same size (often referred to as the model dimension).

Figure 26. Each processing stream takes a vector as input and produces a final resulting vector of the same size (often referred to as the model dimension).-

At the end of the stream, another vector emerges as the result of the model’s processing.

-

For text generation, only the output result of the last stream is used to predict the next token.

-

That output vector is the only input into the LM head as it calculates the probabilities of the next token.

-

-

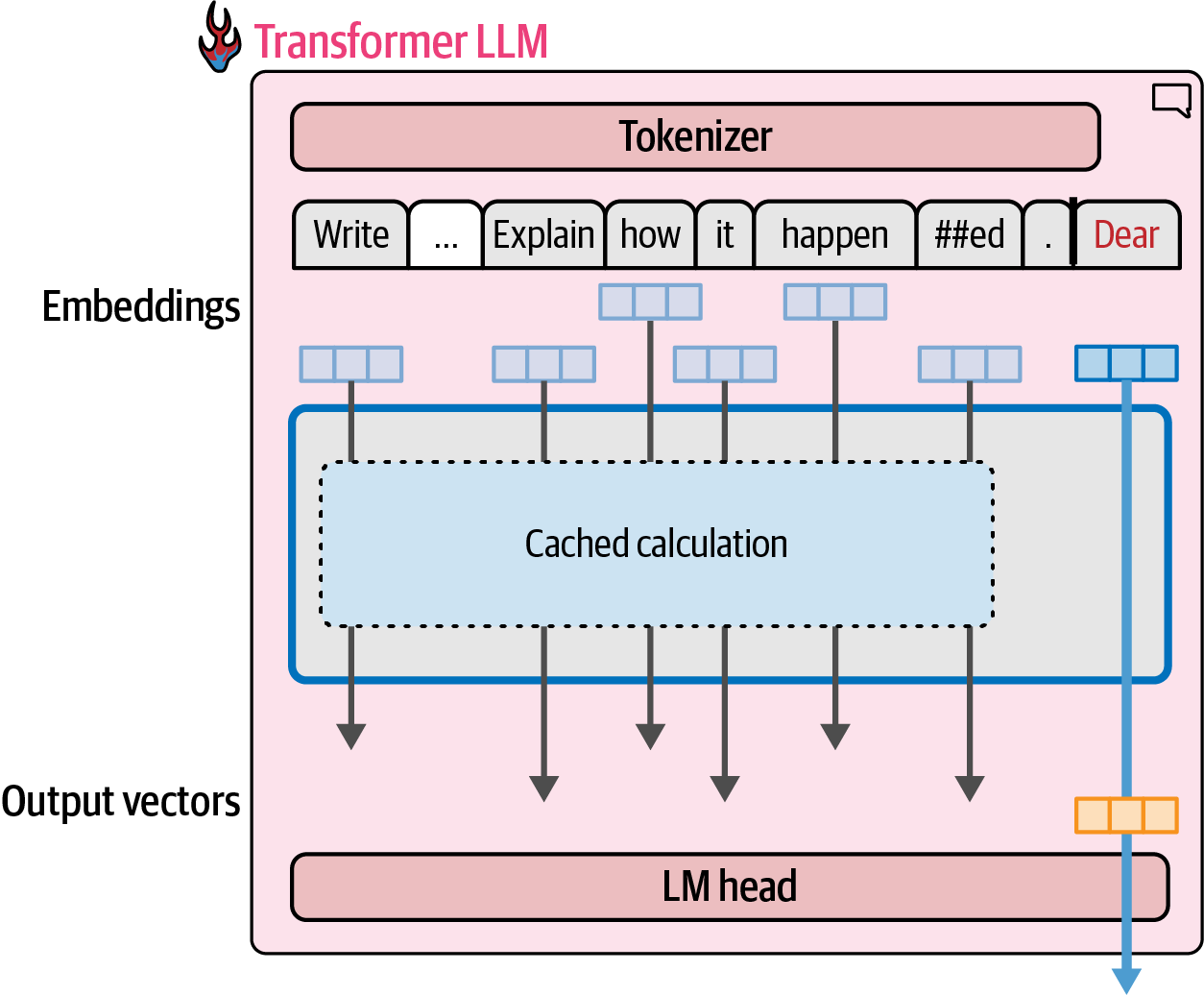

3.5. Keys and Values Caching

Transformer models use a key/value (KV) cache to cache the results of the previous calculation (especially some of the specific vectors in the attention mechanism), speeding up text generation by avoiding redundant calculations.

-

In Hugging Face Transformers, cache is enabled by default, and can be disabled it by setting

use_cachetoFalse.prompt = 'Write a very long email apologizing to Sarah for the tragic gardening mishap. Explain how it happened.' input_ids = tokenizer(prompt, return_tensors='pt').input_ids.to(dev) generation_output = model.generate( input_ids=input_ids, max_new_tokens=100, use_cache=False, )

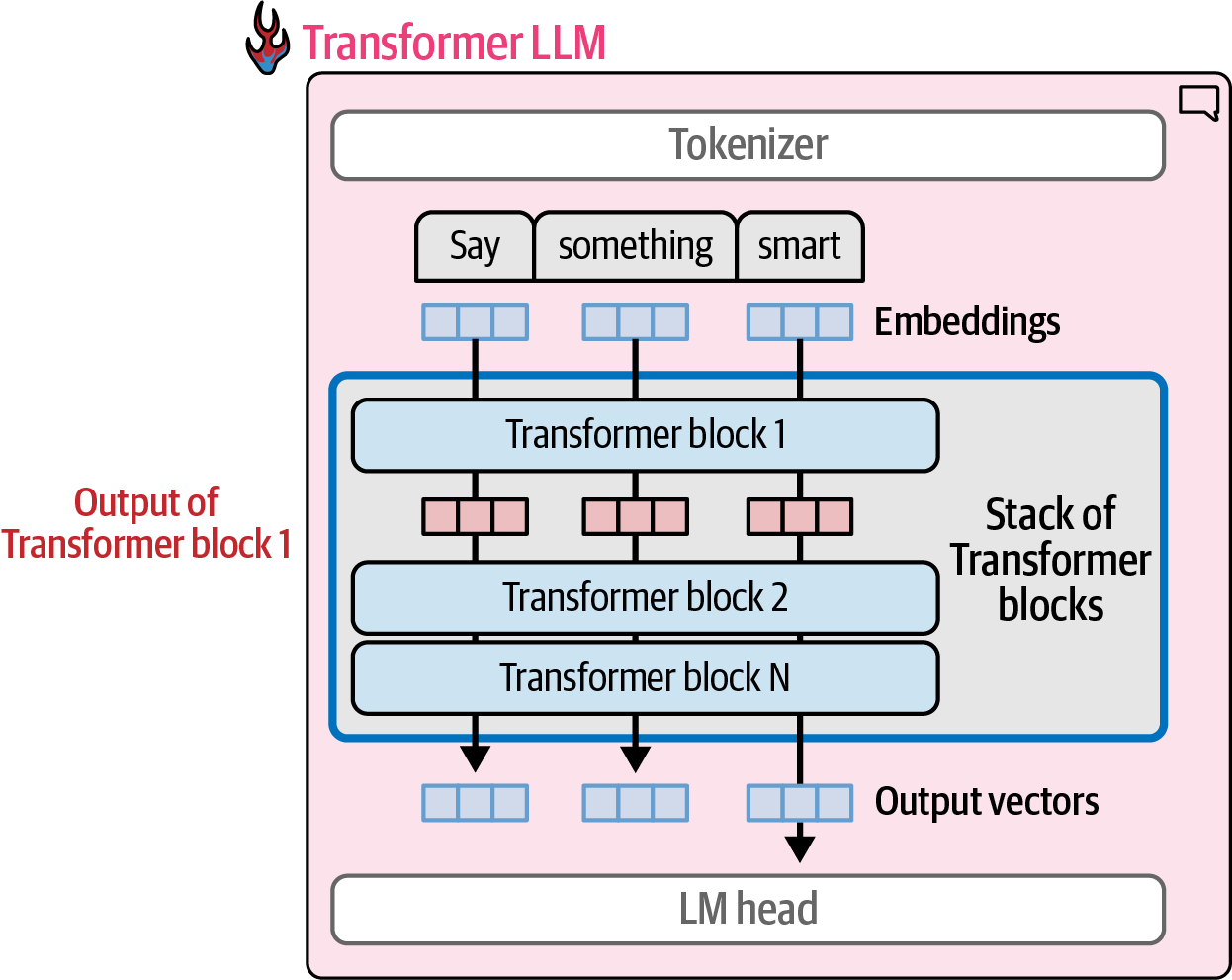

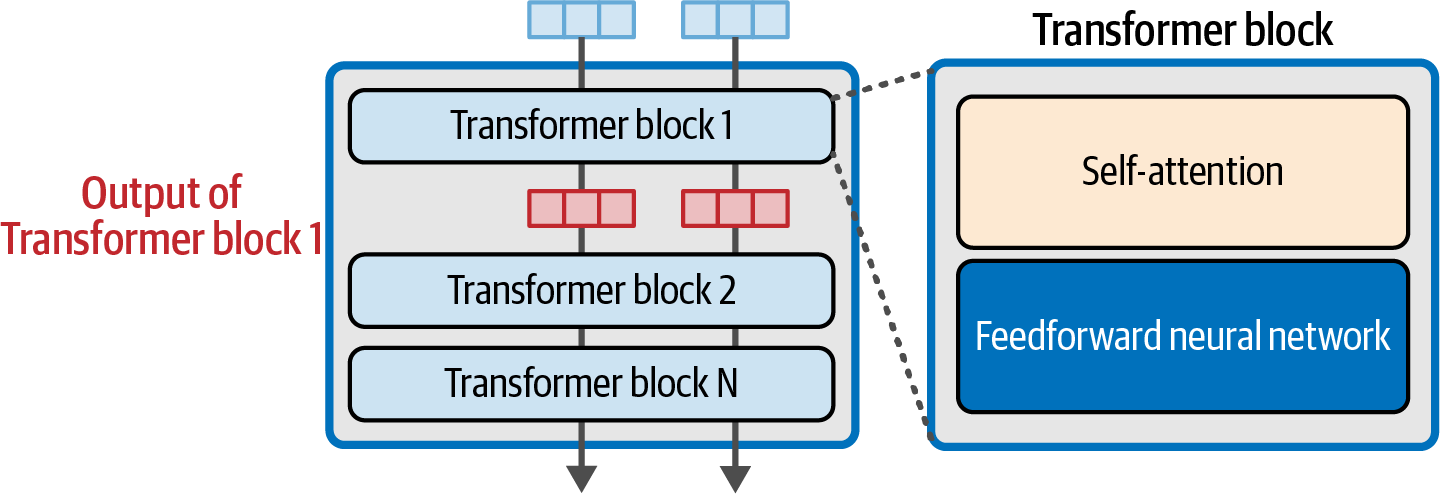

3.6. Transformer Block

Transformer LLMs are composed of a series Transformer blocks (often in the range of six in the original Transformer paper, to over a hundred in many large LLMs) and each block processes its inputs, then passes the results of its processing to the next block.

-

A Transformer block is made up of two successive components:

Figure 29. A Transformer block is made up of a self-attention layer and a feedforward neural network.

Figure 29. A Transformer block is made up of a self-attention layer and a feedforward neural network.-

The attention layer is mainly concerned with incorporating relevant information from other input tokens and positions

-

The feedforward layer houses the majority of the model’s processing capacity

-

-

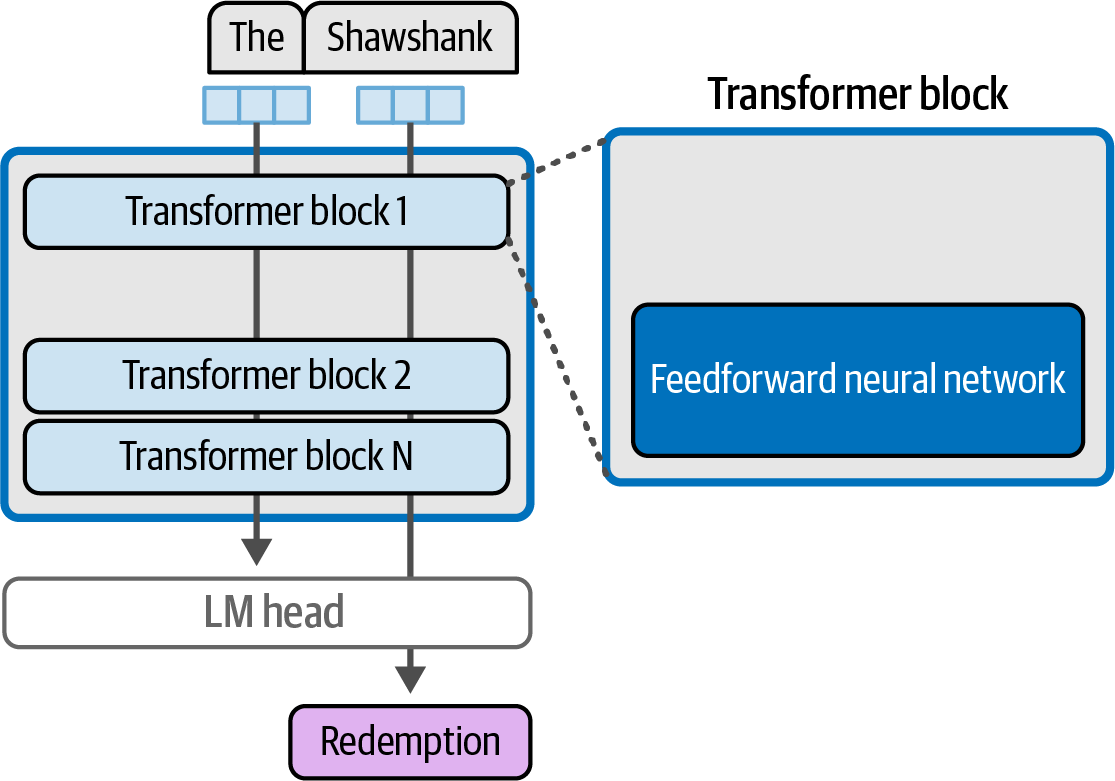

The feedforward network in a Transformer model stores learned information, such as 'The Shawshank' and 'Redemption,' and enables interpolation and generalization for generating text on unseen inputs.

Figure 30. The feedforward neural network component of a Transformer block likely does the majority of the model’s memorization and interpolation.

Figure 30. The feedforward neural network component of a Transformer block likely does the majority of the model’s memorization and interpolation. -

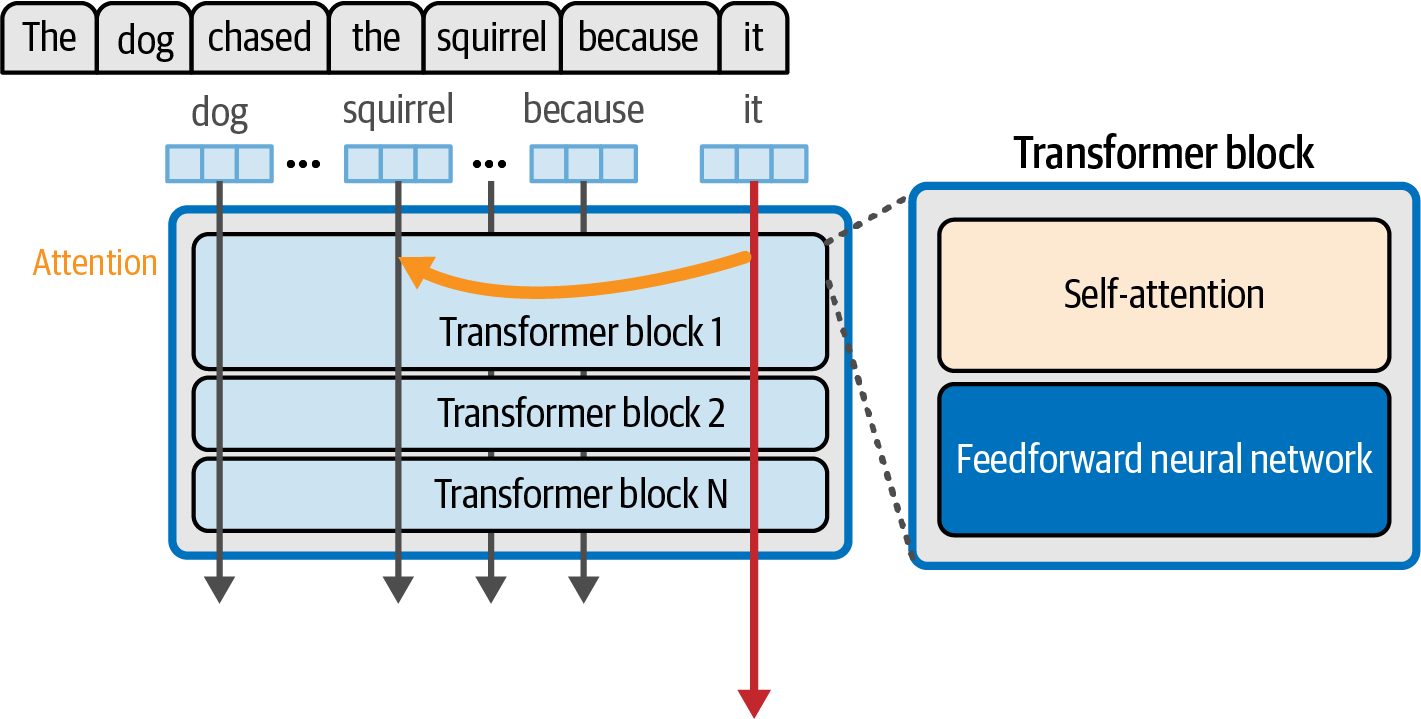

The attention layer in a Transformer model enables context awareness, crucial for language understanding beyond simple memorization.

Figure 31. The self-attention layer incorporates relevant information from previous positions that help process the current token.

Figure 31. The self-attention layer incorporates relevant information from previous positions that help process the current token.

4. Text Classification

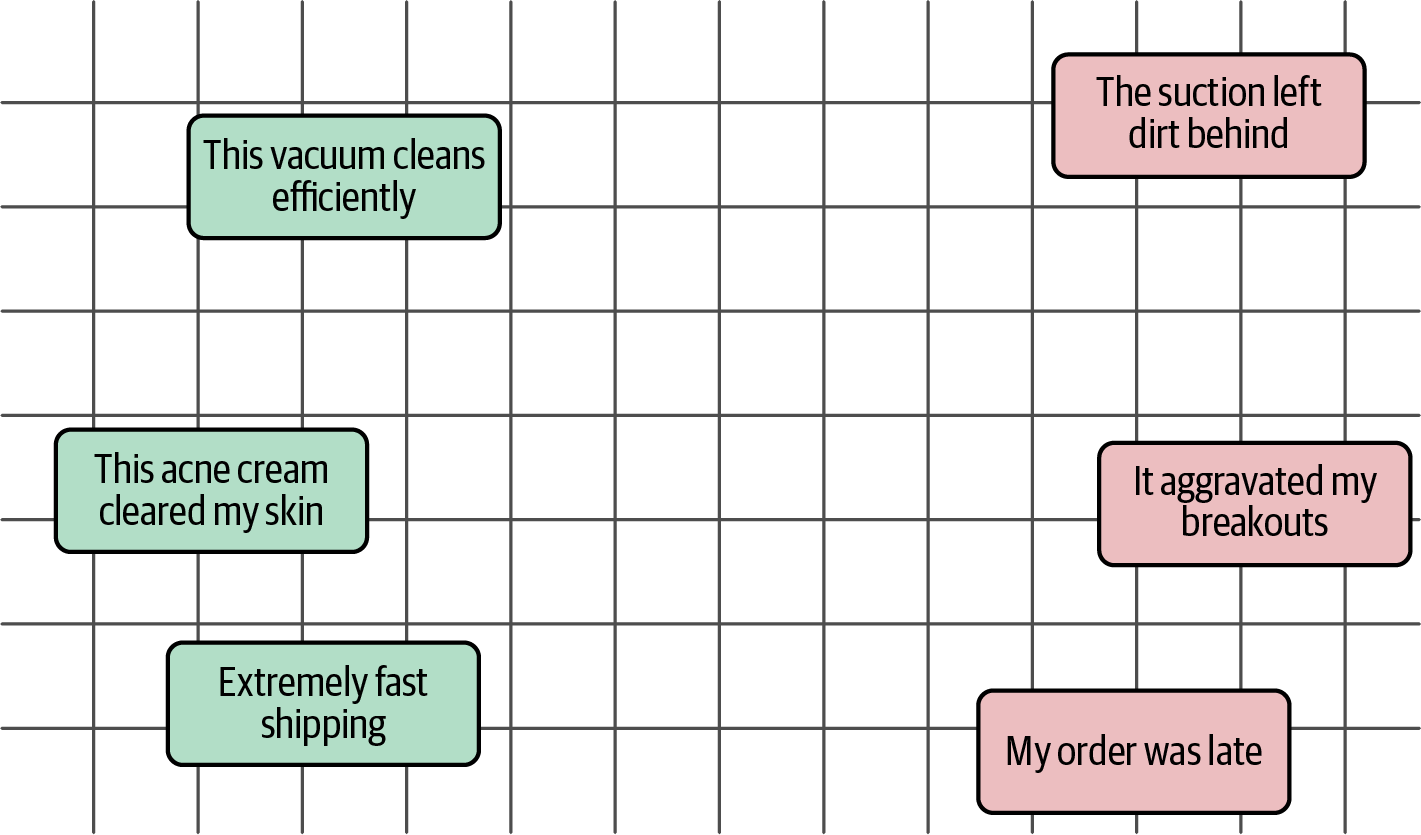

A common task in natural language processing is classification, where the goal is to train a model to assign a label or class to input text, a technique widely used in applications like sentiment analysis and intent detection, significantly impacted by both representative and generative language models.

The Hugging Face Hub is a collaborative platform for machine learning resources (models, datasets, applications), and the datasets package can be used to load datasets.

The dataset is split into train (for training), test (for final evaluation), and validation (for intermediate generalization checks, especially during hyperparameter tuning).

from datasets import load_dataset

# load data

data = load_dataset("rotten_tomatoes") # the well-known 'rotten_tomatoes' dataset

dataDatasetDict({

train: Dataset({

features: ['text', 'label'],

num_rows: 8530

})

validation: Dataset({

features: ['text', 'label'],

num_rows: 1066

})

test: Dataset({

features: ['text', 'label'],

num_rows: 1066

})

})4.1. Representation Models

-

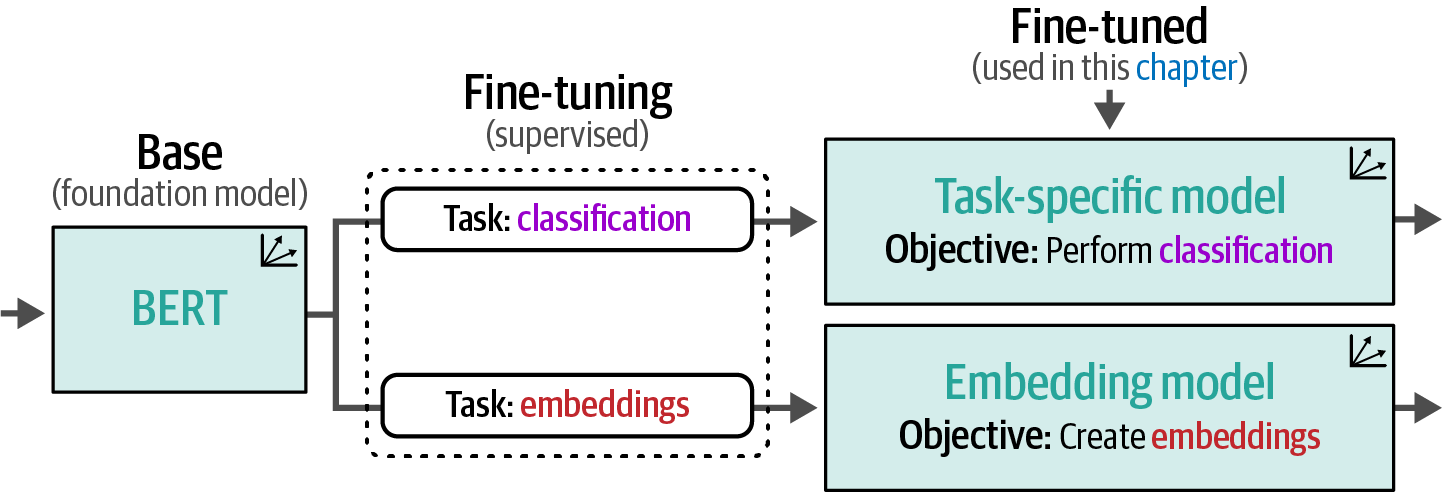

Classification with pretrained representation models generally comes in two flavors, either using a task-specific model or an embedding model.

Figure 33. A foundation model is fine-tuned for specific tasks; for instance, to perform classification or generate general-purpose embeddings.

Figure 33. A foundation model is fine-tuned for specific tasks; for instance, to perform classification or generate general-purpose embeddings. -

A task-specific model is a representation model, such as BERT, trained for a specific task, like sentiment analysis.

-

An embedding model generates general-purpose embeddings that can be used for a variety of tasks not limited to classification, like semantic search.

Figure 34. Perform classification directly with a task-specific model or indirectly with general-purpose embeddings.

Figure 34. Perform classification directly with a task-specific model or indirectly with general-purpose embeddings.

4.1.1. Task-Specific Model

from datasets import load_dataset

# load the well-known 'rotten_tomatoes' dataset for sentiment analysis

data = load_dataset("rotten_tomatoes")

# determine the device to use for computation (GPU if available, otherwise CPU)

import torch

dev = 'cuda' if torch.cuda.is_available() else 'cpu'

from transformers import pipeline

# specify the path to the pre-trained Twitter-RoBERTa-base for Sentiment Analysis model

model_path = "cardiffnlp/twitter-roberta-base-sentiment-latest"

# load the pre-trained sentiment analysis model into a pipeline for easy inference

pipe = pipeline(

model=model_path,

tokenizer=model_path,

return_all_scores=True, # return the scores for all sentiment labels

device=dev, # specify the device to run the pipeline on

)

import numpy as np

from tqdm import tqdm # for progress bar during inference

from transformers.pipelines.pt_utils import KeyDataset # utility to feed data to the pipeline

# run inference on the test dataset

y_pred = [] # list to store the predicted sentiment labels

for output in tqdm(

# iterate through the 'text' column of the test dataset

pipe(KeyDataset(data["test"], "text")), total=len(data["test"])

):

# extract the negative sentiment score

negative_score = output[0]["score"]

# extract the positive sentiment score (assuming labels are ordered: negative, neutral, positive)

positive_score = output[2]["score"]

# predict the sentiment based on the highest score (0 for negative, 1 for positive)

assignment = np.argmax([negative_score, positive_score])

# add the predicted label to the list

y_pred.append(assignment)

from sklearn.metrics import classification_report

def evaluate_performance(y_true, y_pred):

'''Create and print the classification report comparing true and predicted labels'''

performance = classification_report(

y_true, y_pred, target_names=["Negative Review", "Positive Review"]

)

print(performance)

# evaluate the performance of the sentiment analysis model on the test set

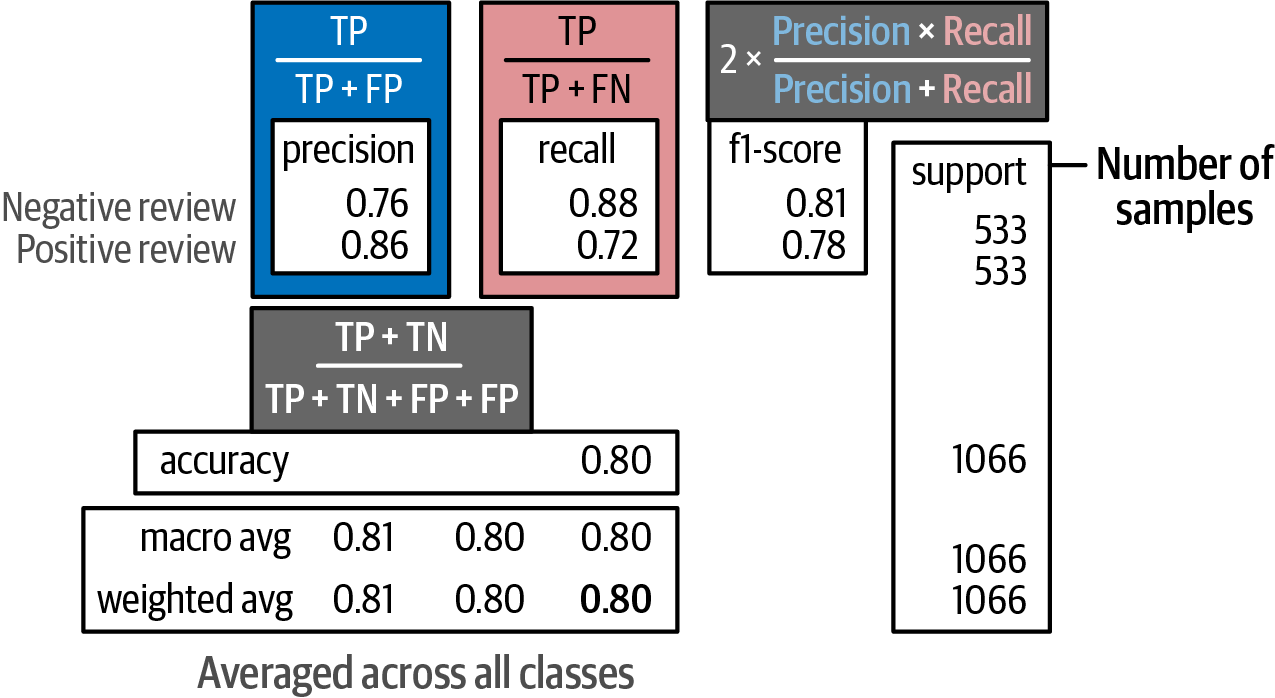

evaluate_performance(data["test"]["label"], y_pred) # compare the true labels with the predicted labels precision recall f1-score support

Negative Review 0.76 0.88 0.81 533

Positive Review 0.86 0.72 0.78 533

accuracy 0.80 1066

macro avg 0.81 0.80 0.80 1066

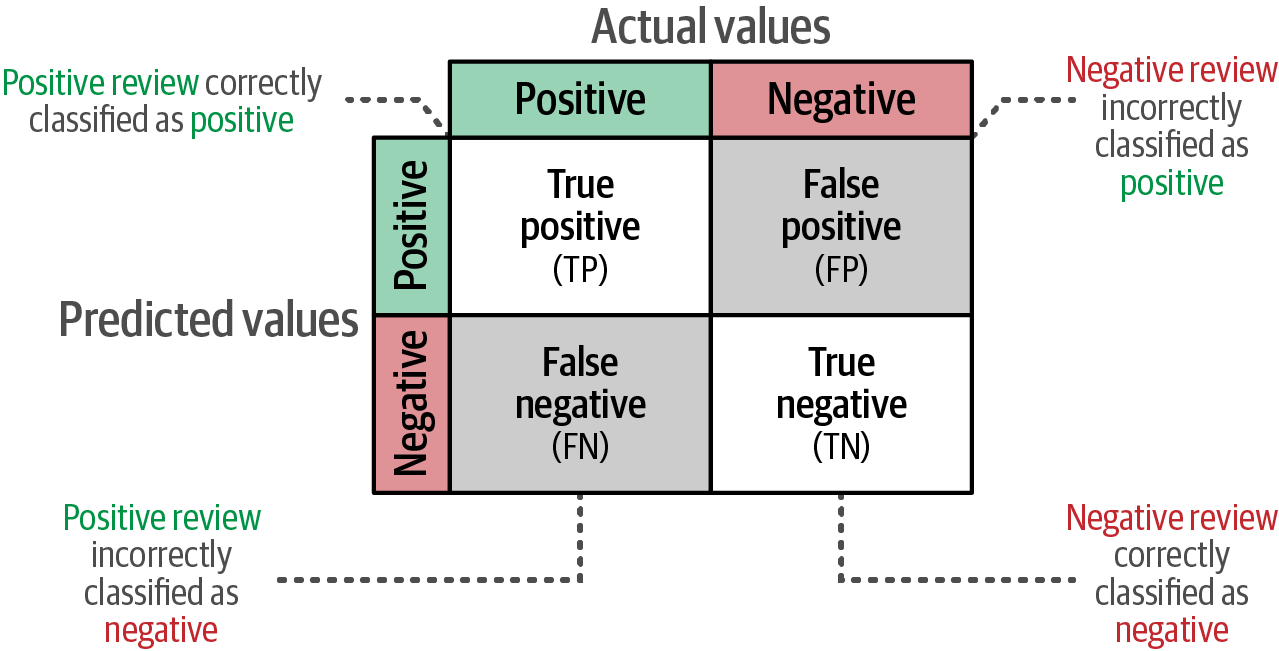

weighted avg 0.81 0.80 0.80 1066The above generated classification report shows four such methods: precision, recall, accuracy, and the F1 score.

-

Precision measures how many of the items found are relevant, which indicates the accuracy of the relevant results.

-

Recall refers to how many relevant classes were found, which indicates its ability to find all relevant results.

-

Accuracy refers to how many correct predictions the model makes out of all predictions, which indicates the overall correctness of the model.

-

The F1 score balances both precision and recall to create a model’s overall performance.

A confusion matrix visualizes the performance of a classification model by showing the counts of four prediction outcomes: True Positives, True Negatives, False Positives, and False Negatives, which serves as the basis for calculating various metrics to evaluate the model’s quality.

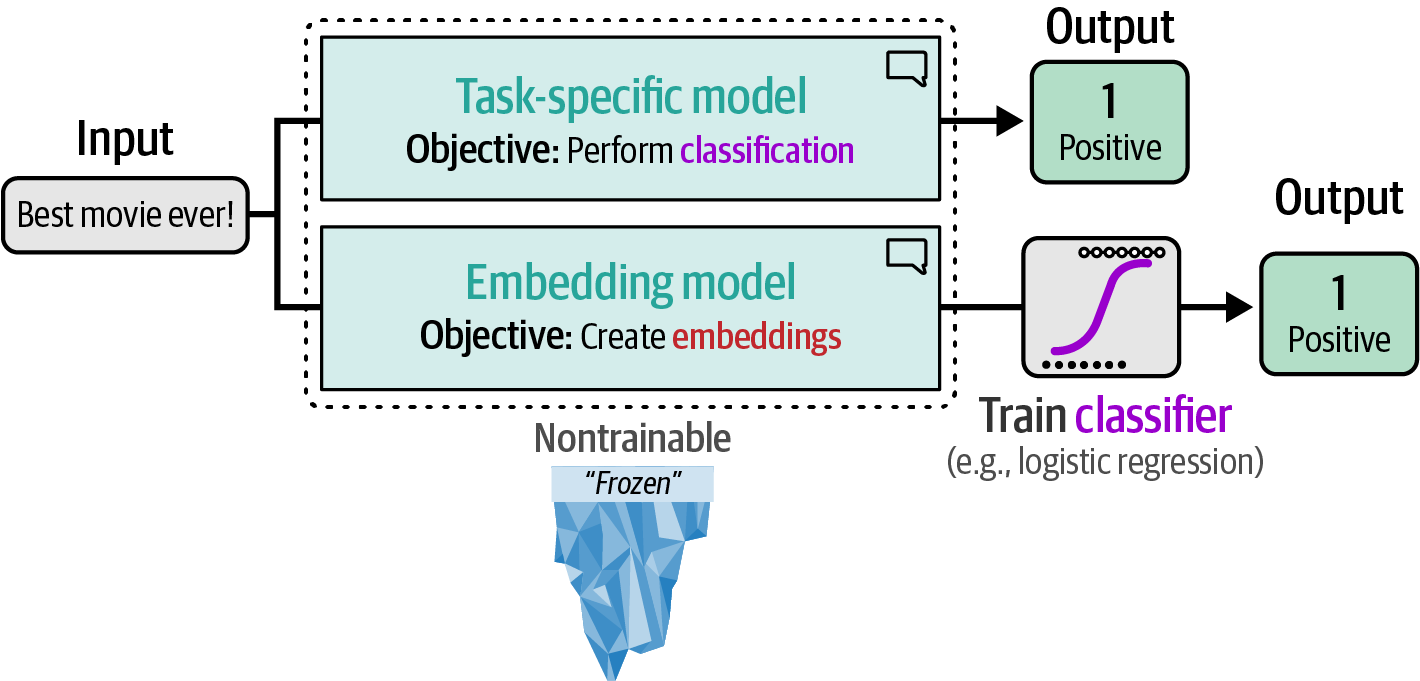

4.1.2. Embedding model

-

Without fine-tuning a representation model, a general-purpose embedding model can generate features that are then fed into a separate, trainable classifier (like logistic regression, which can be trained efficiently on a CPU), creating a two-step classification approach.

-

A major benefit of this separation is avoiding the costly fine-tuning of the embedding model, instead, a classifier, such as logistic regression, can be trained efficiently on the CPU.

from datasets import load_dataset # load the well-known 'rotten_tomatoes' dataset for sentiment analysis data = load_dataset("rotten_tomatoes") # load the SentenceTransformer model for generating text embeddings from sentence_transformers import SentenceTransformer model = SentenceTransformer("sentence-transformers/all-mpnet-base-v2") # convert the text data from the train and test splits into embeddings train_embeddings = model.encode(data["train"]["text"], show_progress_bar=True) test_embeddings = model.encode(data["test"]["text"], show_progress_bar=True) from sklearn.linear_model import LogisticRegression # train a logistic regression classifier on the generated training embeddings # initialize the logistic regression model with a random state for reproducibility clf = LogisticRegression(random_state=42) # train the classifier using the training embeddings and their corresponding labels clf.fit(train_embeddings, data["train"]["label"]) from sklearn.metrics import classification_report def evaluate_performance(y_true, y_pred): '''Create and print the classification report comparing true and predicted labels''' performance = classification_report( y_true, y_pred, target_names=["Negative Review", "Positive Review"] ) print(performance) # predict the sentiment labels for the test embeddings using the trained classifier y_pred = clf.predict(test_embeddings) # evaluate the performance of the classifier on the test set evaluate_performance(data["test"]["label"], y_pred)precision recall f1-score support Negative Review 0.85 0.86 0.85 533 Positive Review 0.86 0.85 0.85 533 accuracy 0.85 1066 macro avg 0.85 0.85 0.85 1066 weighted avg 0.85 0.85 0.85 1066 -

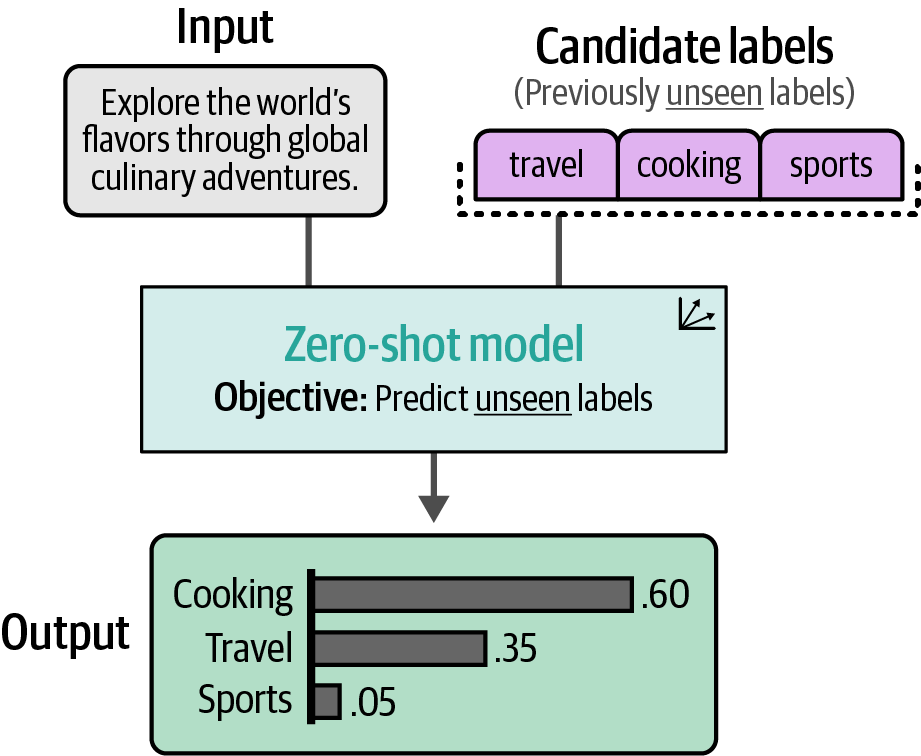

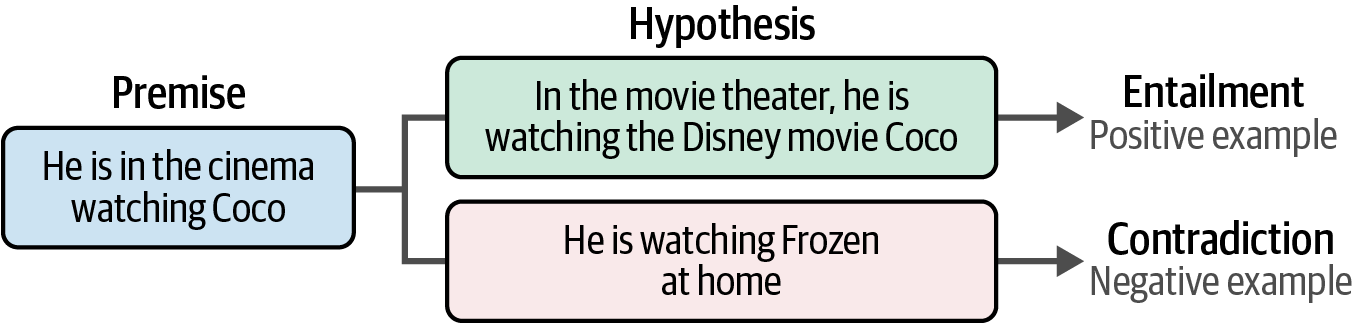

Zero-shot classification can be used on unlabeled data by leveraging the model’s pre-existing knowledge to predict labels based solely on their definitions.

-

In zero-shot classification, without any labeled examples, the model determines the relationship between input text and predefined candidate labels.

Figure 37. In zero-shot classification, we have no labeled data, only the labels them‐ selves. The zero-shot model decides how the input is related to the candidate labels.

Figure 37. In zero-shot classification, we have no labeled data, only the labels them‐ selves. The zero-shot model decides how the input is related to the candidate labels. -

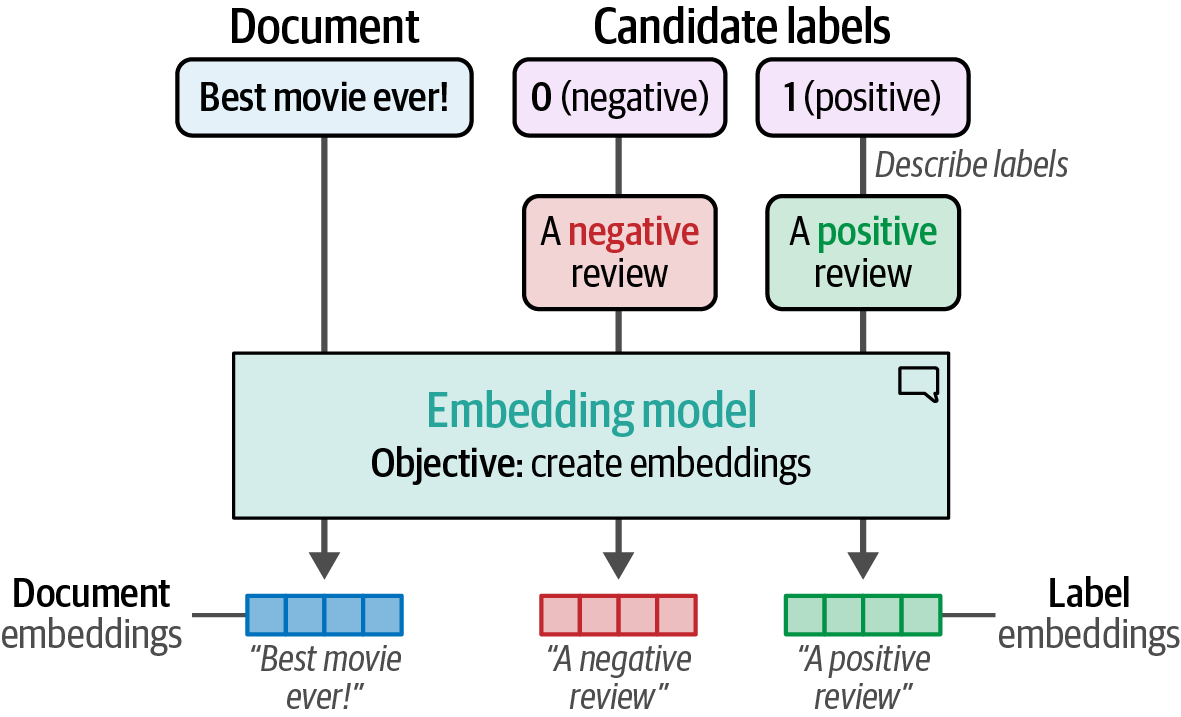

Zero-shot classification generates target labels without labeled data by describing and embedding labels (e.g., "negative movie review") and documents.

Figure 38. To embed the labels, we first need to give them a description, such as “a negative movie review.” This can then be embedded through sentence-transformers.

Figure 38. To embed the labels, we first need to give them a description, such as “a negative movie review.” This can then be embedded through sentence-transformers. -

To assign labels to documents in zero-shot classification, cosine similarity, representing the cosine of the angle between the embedding vectors, can be applied to document-label embedding pairs.

from datasets import load_dataset # load the well-known 'rotten_tomatoes' dataset for sentiment analysis data = load_dataset('rotten_tomatoes') from sentence_transformers import SentenceTransformer # load model model = SentenceTransformer('sentence-transformers/all-mpnet-base-v2') # convert text to embeddings train_embeddings = model.encode(data['train']['text'], show_progress_bar=True) test_embeddings = model.encode(data['test']['text'], show_progress_bar=True) # create embeddings for our labels label_embeddings = model.encode(['A negative review', 'A positive review']) import numpy as np from sklearn.metrics.pairwise import cosine_similarity # find the best matching label for each document using cosine similarity sim_matrix = cosine_similarity(test_embeddings, label_embeddings) # get the index of the label with the highest similarity score for each test embedding y_pred = np.argmax(sim_matrix, axis=1) from sklearn.metrics import classification_report def evaluate_performance(y_true, y_pred): '''Create and print the classification report comparing true and predicted labels''' performance = classification_report( y_true, y_pred, target_names=['Negative Review', 'Positive Review'] ) print(performance) evaluate_performance(data['test']['label'], y_pred)precision recall f1-score support Negative Review 0.78 0.77 0.78 533 Positive Review 0.77 0.79 0.78 533 accuracy 0.78 1066 macro avg 0.78 0.78 0.78 1066 weighted avg 0.78 0.78 0.78 1066From Wikipedia, the free encyclopedia

In data analysis, cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths. It follows that the cosine similarity does not depend on the magnitudes of the vectors, but only on their angle. The cosine similarity always belongs to the interval

[−1, 1].import numpy as np # import the NumPy library for numerical operations A = np.array([1, 2, 3]) # create a NumPy array named A B = np.array([4, 5, 6]) # create a NumPy array named B # calculate the cosine similarity using the formula: (A dot B) / (||A|| * ||B||) dot_product = np.dot(A, B) # calculate the dot product of A and B norm_A = np.linalg.norm(A) # calculate the Euclidean norm (magnitude) of A norm_B = np.linalg.norm(B) # calculate the Euclidean norm (magnitude) of B cosine_similarity = dot_product / (norm_A * norm_B) # calculate the cosine similarity print(cosine_similarity) # 0.9746318461970762

-

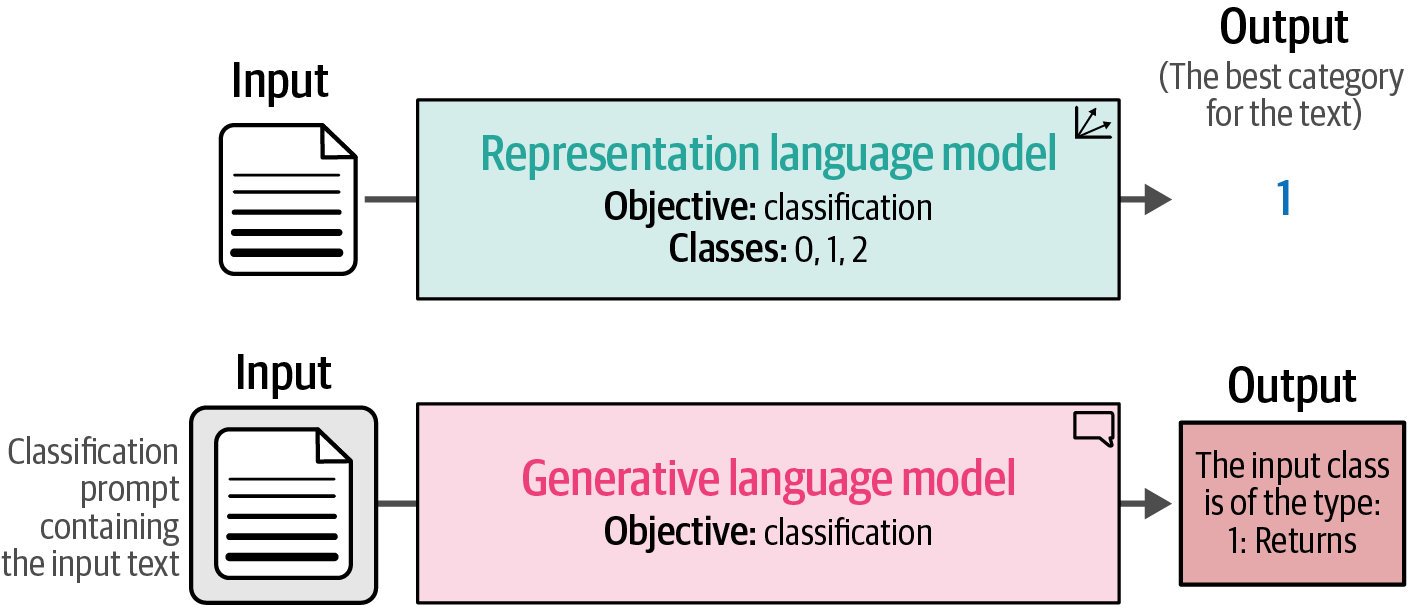

4.2. Generative Models

-

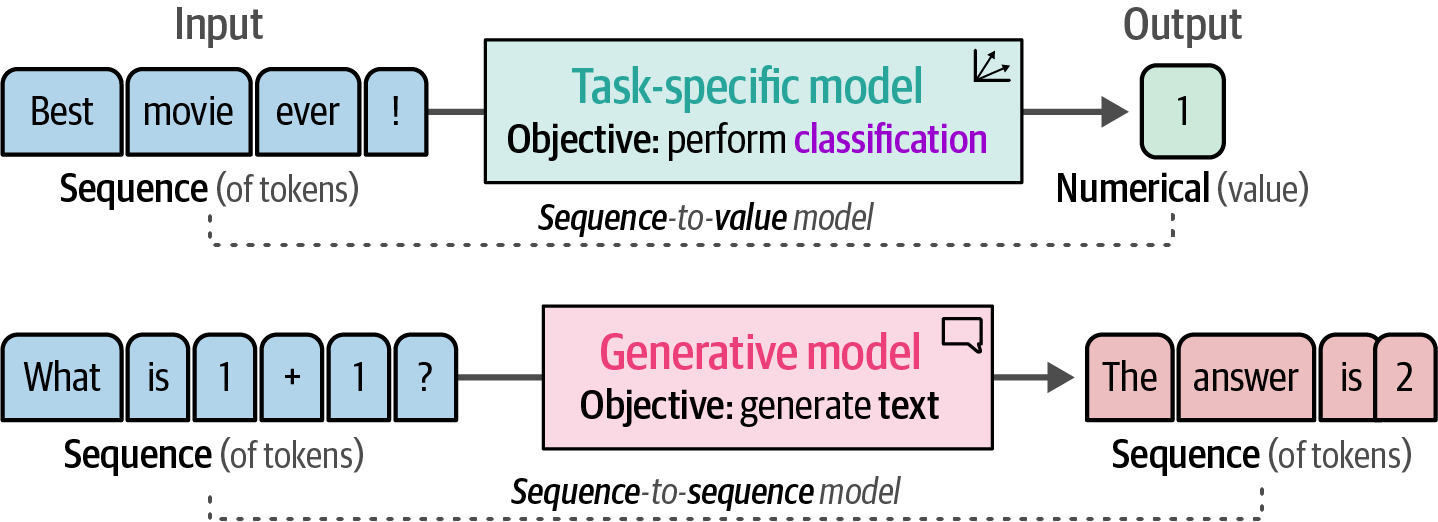

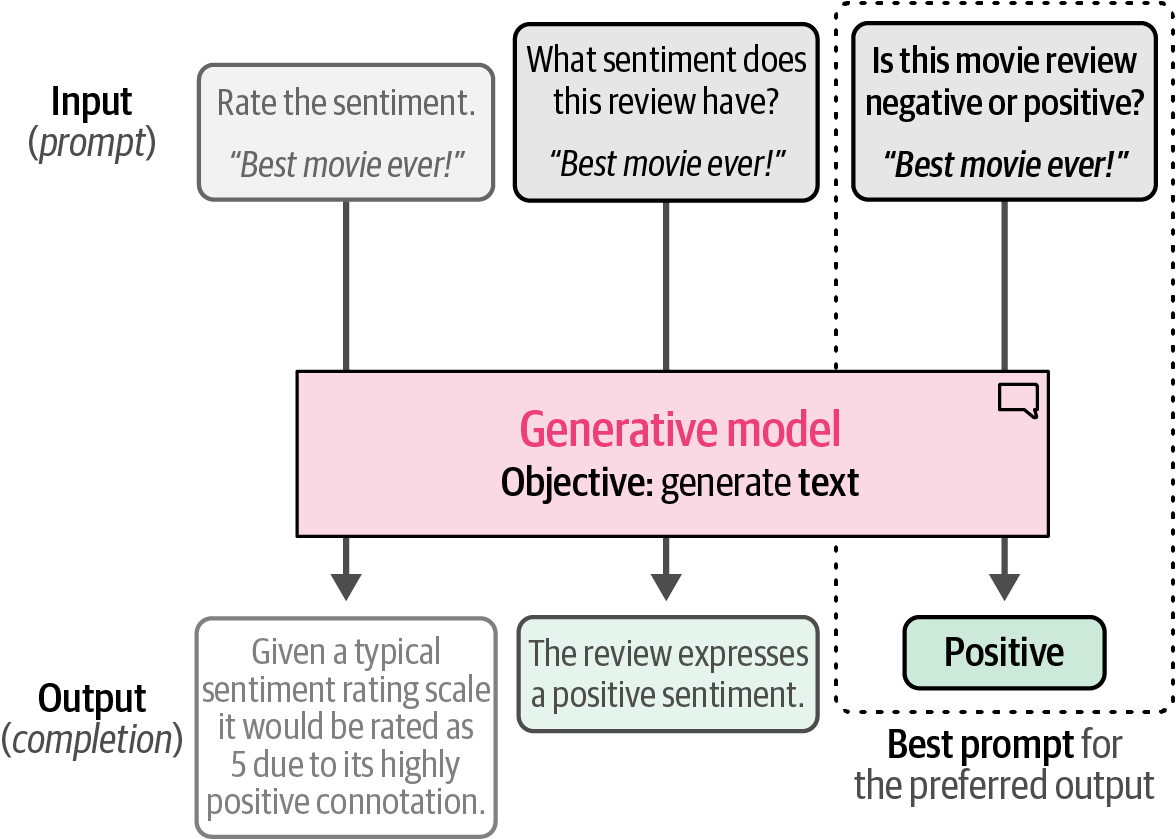

Text classification with generative language models (like GPT) involves feeding input text to the model and having it generate text as output, in contrast to task-specific models that directly output a class label.

Figure 39. A task-specific model generates numerical values from sequences of tokens while a generative model generates sequences of tokens from sequences of tokens.

Figure 39. A task-specific model generates numerical values from sequences of tokens while a generative model generates sequences of tokens from sequences of tokens. -

Generative models are generally trained on a wide variety of tasks and usually don’t inherently know how to handle specific tasks like classifying a movie review without explicit instructions.

-

Prompt engineering is the skill of crafting effective instructions, or prompts, to guide generative AI models towards producing desired and high-quality outputs for specific tasks, like text classification, which often involves iterative refinement of these prompts based on the model’s responses.

Figure 40. Prompt engineering allows prompts to be updated to improve the output generated by the model.

Figure 40. Prompt engineering allows prompts to be updated to improve the output generated by the model.

4.2.1. Text-to-Text Transfer Transformer

-

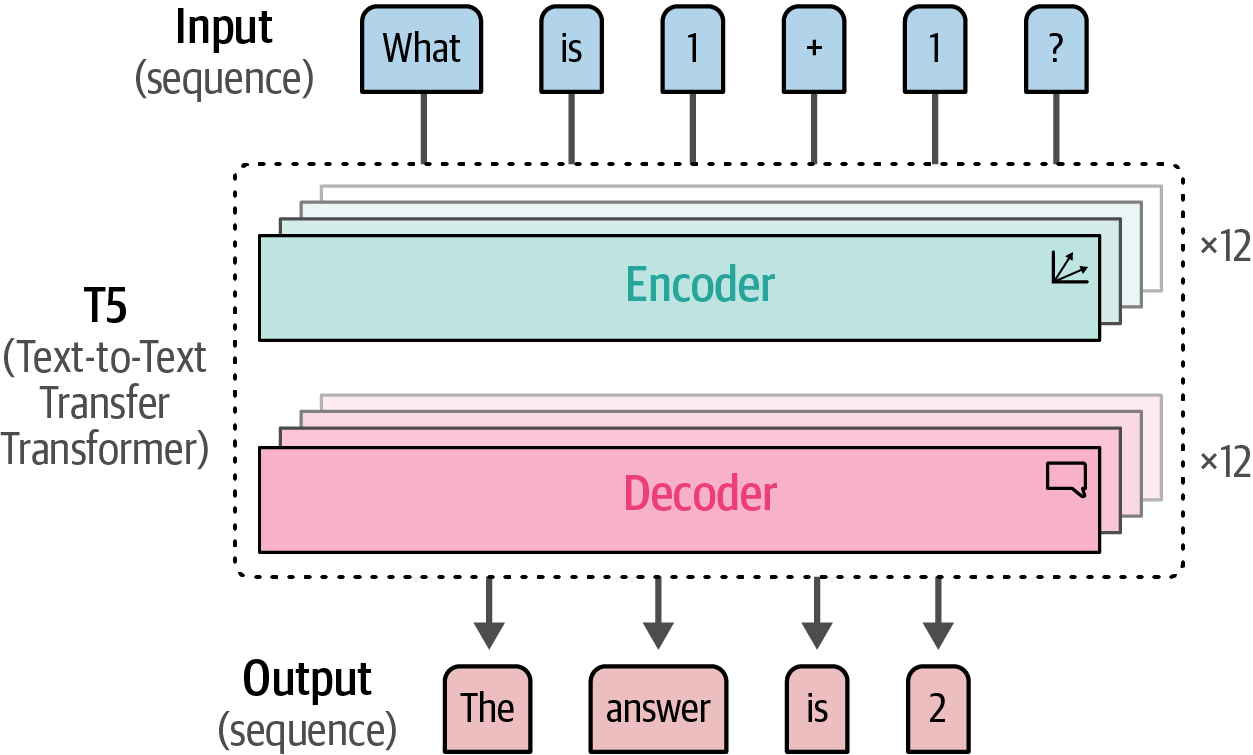

Text-to-Text Transfer Transformer or T5, like the original Transformer, is a generative encoder-decoder sequence-to-sequence model, contrasting with encoder-only BERT and decoder-only GPT.

Figure 41. The T5 architecture is similar to the original Transformer model, a decoder- encoder architecture.

Figure 41. The T5 architecture is similar to the original Transformer model, a decoder- encoder architecture.-

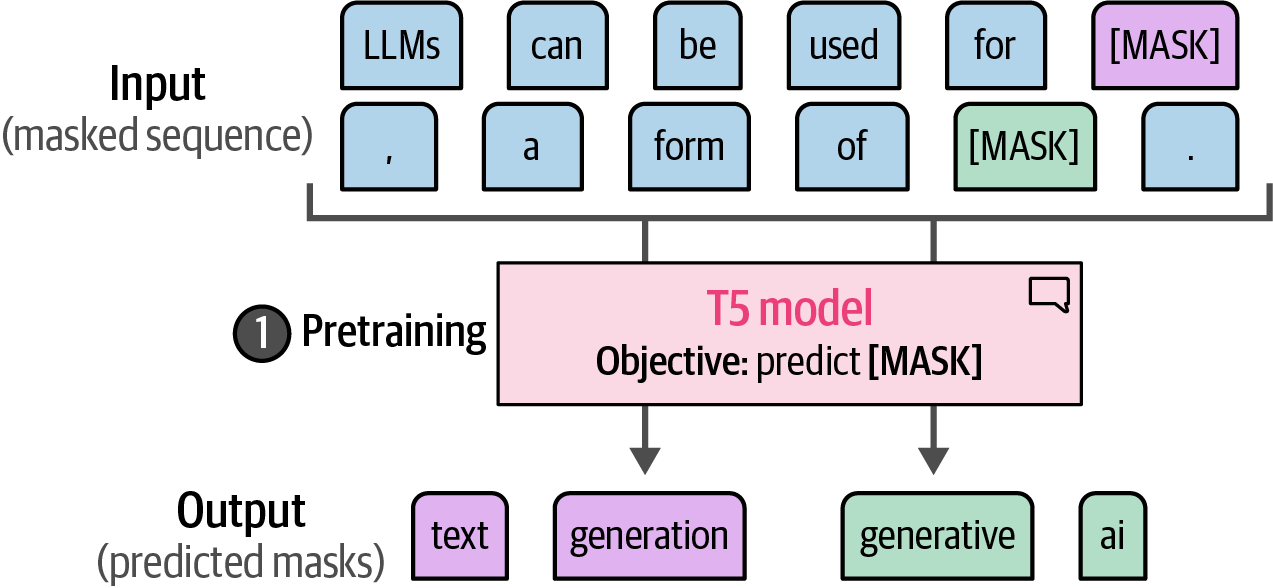

In the first step of training, namely pretraining, encoder-decoder models like T5 are initially trained with a masked language modeling objective that masks sets of tokens (or token spans), differing from BERT’s individual token masking approach.

Figure 42. In the first step of training, namely pretraining, the T5 model needs to predict masks that could contain multiple tokens.

Figure 42. In the first step of training, namely pretraining, the T5 model needs to predict masks that could contain multiple tokens. -

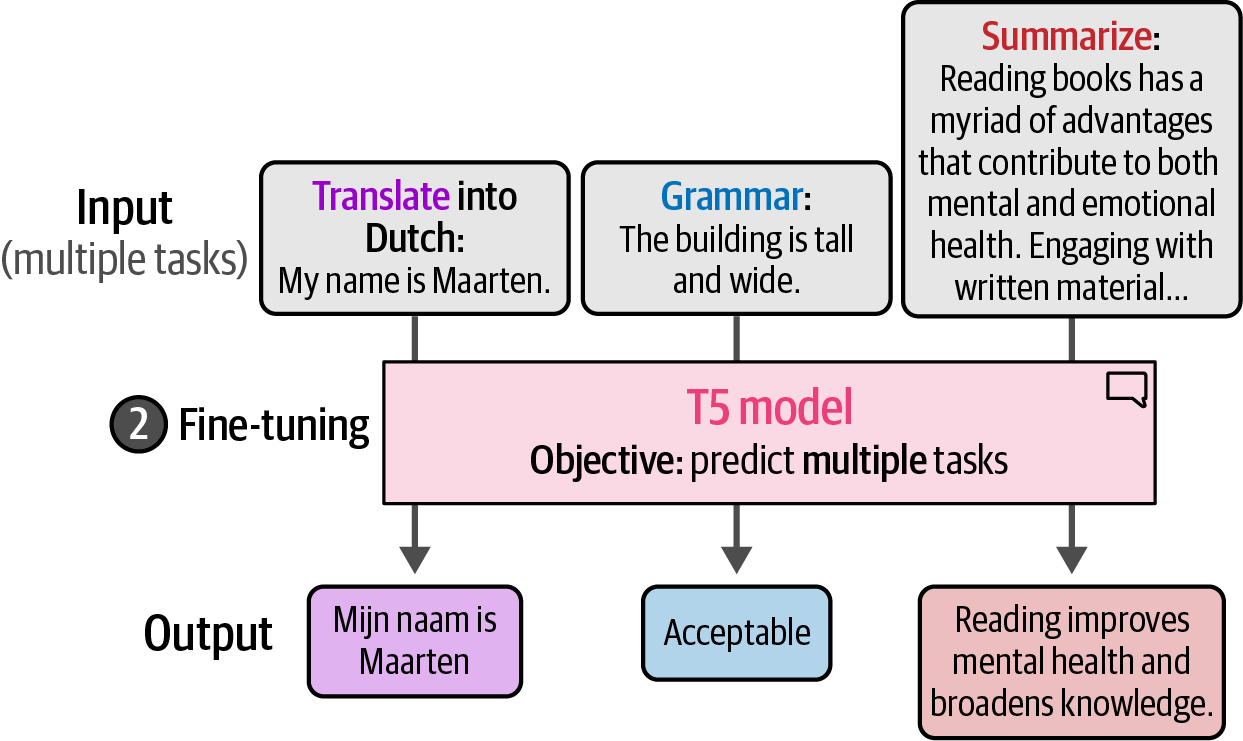

In the second step of training, namely fine-tuning the base model, instead of fine-tuning the model for one specific task, each task is converted to a sequence-to-sequence task and trained simultaneously.

Figure 43. By converting specific tasks to textual instructions, the T5 model can be trained on a variety of tasks during fine-tuning.

Figure 43. By converting specific tasks to textual instructions, the T5 model can be trained on a variety of tasks during fine-tuning.

-

from datasets import load_dataset

# load the well-known 'rotten_tomatoes' dataset for sentiment analysis

data = load_dataset('rotten_tomatoes')

import torch

# determine the device to use for computation (GPU if available, otherwise CPU)

dev = 'cuda' if torch.cuda.is_available() else 'cpu'

from transformers import pipeline

# specify the path to the pre-trained FLAN-T5-small model for text-to-text generation

model_path = 'google/flan-t5-small'

# load the pre-trained text-to-text generation model into a pipeline for easy inference

pipe = pipeline(

'text2text-generation',

model=model_path,

device=dev,

)

# prepare our data by creating a prompt and combining it with the text

prompt = 'Is the following sentence positive or negative? '

# apply the prompt to each example in the dataset's 'text' column to create a new 't5' column

data = data.map(lambda example: {'t5': prompt + example['text']})

# data # uncomment to inspect the modified dataset

from tqdm import tqdm # for progress bar during inference

from transformers.pipelines.pt_utils import (

KeyDataset,

) # utility to feed data to the pipeline

# Run inference

y_pred = []

# iterate through the test dataset using the pipeline for text generation

for output in tqdm(

pipe(KeyDataset(data['test'], 't5')), total=len(data['test'])

):

# extract the generated text from the pipeline's output

text = output[0]['generated_text']

# classify the generated text as 0 (negative) if it equals 'negative', otherwise 1 (positive)

y_pred.append(0 if text == 'negative' else 1)

from sklearn.metrics import classification_report

def evaluate_performance(y_true, y_pred):

'''Create and print the classification report comparing true and predicted labels'''

performance = classification_report(

y_true, y_pred, target_names=['Negative Review', 'Positive Review']

)

print(performance)

# evaluate the performance of the model by comparing the true labels with the predicted labels

evaluate_performance(data['test']['label'], y_pred) precision recall f1-score support

Negative Review 0.83 0.85 0.84 533

Positive Review 0.85 0.83 0.84 533

accuracy 0.84 1066

macro avg 0.84 0.84 0.84 1066

weighted avg 0.84 0.84 0.84 10664.2.2. ChatGPT for Classification

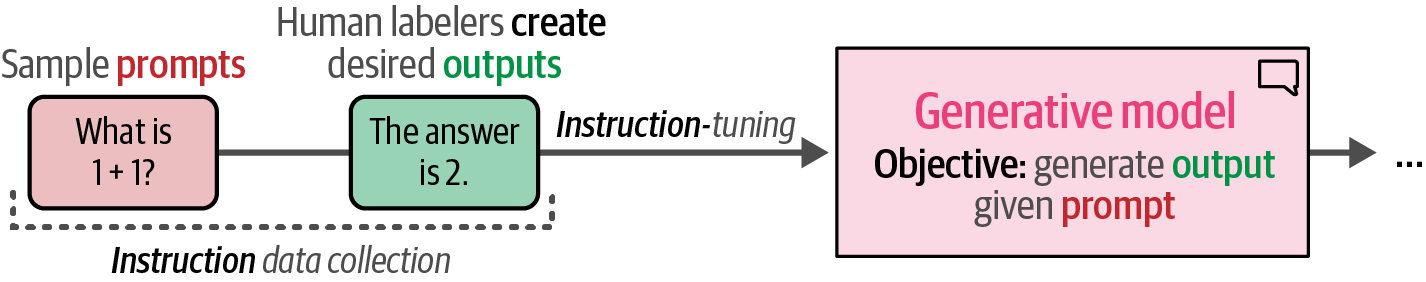

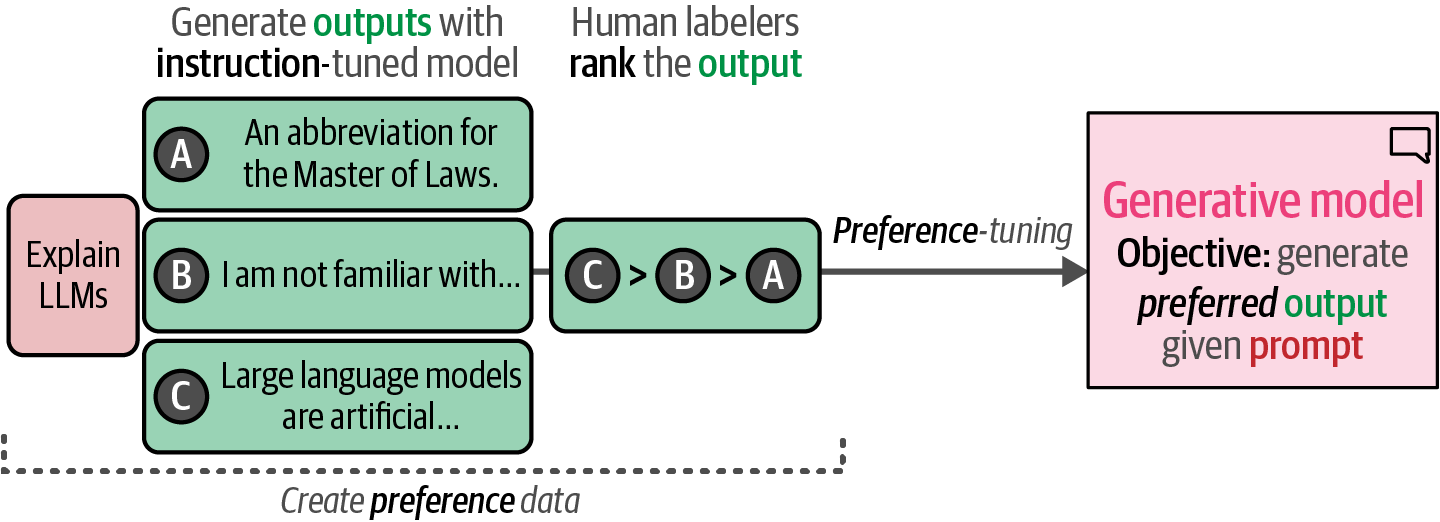

OpenAI shared an overview of the training procedure that involved an important component, namely preference tuning.

-

OpenAI first manually created the desired output to an input prompt (instruction data) and used that data to create a first variant of its model.

Figure 44. Manually labeled data consisting of an instruction (prompt) and output was used to perform fine-tuning (instruction-tuning).

Figure 44. Manually labeled data consisting of an instruction (prompt) and output was used to perform fine-tuning (instruction-tuning). -

OpenAI used the resulting model to generate multiple outputs that were manually ranked from best to worst.

Figure 45. Manually ranked preference data was used to generate the final model, ChatGPT.

Figure 45. Manually ranked preference data was used to generate the final model, ChatGPT.

import openai

# create client for interacting with OpenAI API

client = openai.OpenAI(api_key='YOUR_KEY_HERE')

def chatgpt_generation(prompt, document, model='gpt-3.5-turbo-0125'):

'''Generate an output based on a prompt and an input document using ChatGPT.'''

# define the message structure for the OpenAI API

messages = [

{'role': 'system', 'content': 'You are a helpful assistant.'},

{'role': 'user', 'content': prompt.replace('[DOCUMENT]', document)},

]

# call the OpenAI Chat Completions API to get a response

chat_completion = client.chat.completions.create(

messages=messages, model=model, temperature=0 # temperature=0 for deterministic output

)

# return the content of the first choice's message

return chat_completion.choices[0].message.content

# define a prompt template as a base for sentiment classification

prompt = '''Predict whether the following document is a positive or negative

movie review:

[DOCUMENT]

If it is positive return 1 and if it is negative return 0. Do not give any

other answers.

'''

# predict the target for a single document using GPT

document = 'unpretentious , charming , quirky , original'

chatgpt_generation(prompt, document)

from datasets import load_dataset

# load the well-known 'rotten_tomatoes' dataset for sentiment analysis

data = load_dataset('rotten_tomatoes')

from tqdm import tqdm

# generate predictions for all documents in the test set

predictions = [

chatgpt_generation(prompt, doc) for doc in tqdm(data['test']['text'])

]

# convert the string predictions ('0' or '1') to integers

y_pred = [int(pred) for pred in predictions]

from sklearn.metrics import classification_report

def evaluate_performance(y_true, y_pred):

'''Create and print the classification report comparing true and predicted labels'''

performance = classification_report(

y_true, y_pred, target_names=['Negative Review', 'Positive Review']

)

print(performance)

# evaluate the performance of ChatGPT on the test set

evaluate_performance(data['test']['label'], y_pred)5. Text Clustering and Topic Modeling

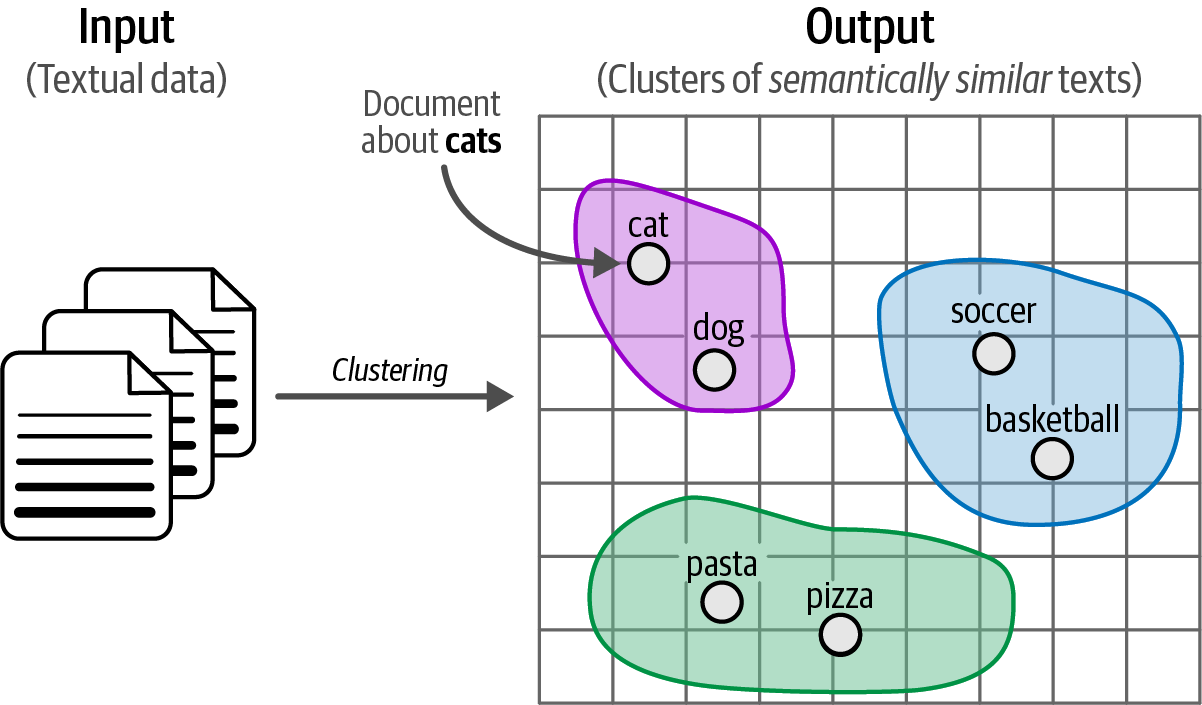

Although supervised techniques, such as classification, have reigned supreme over the last few years in the industry, the potential of unsupervised techniques such as text clustering cannot be understated.

-

Text clustering aims to group similar texts based on their semantic content, meaning, and relationships.

Figure 46. Clustering unstructured textual data.

Figure 46. Clustering unstructured textual data. -

Text clustering is also applied in topic modeling to uncover abstract topics within large textual datasets.

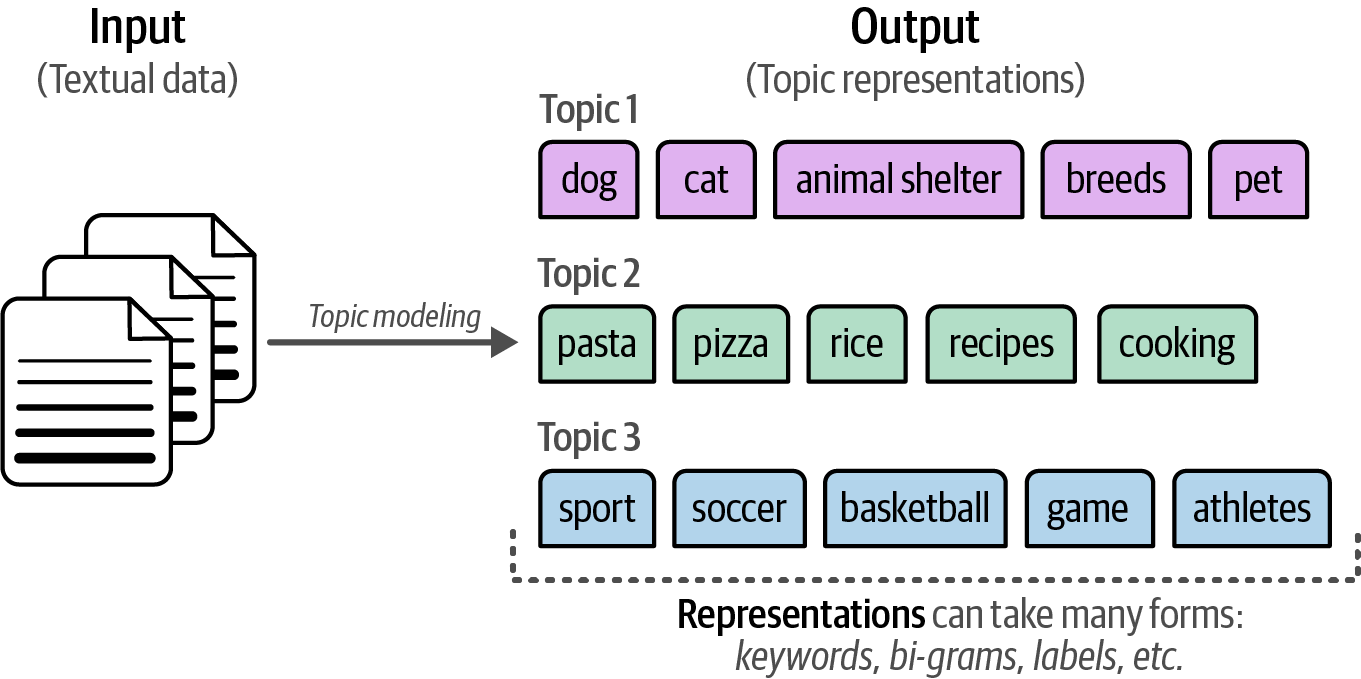

Figure 47. Topic modeling is a way to give meaning to clusters of textual documents.

Figure 47. Topic modeling is a way to give meaning to clusters of textual documents.

5.1. ArXiv’s Articles: Computation and Language

ArXiv is an open-access platform for scholarly articles, mostly in the fields of computer science, mathematics, and physics.

from datasets import load_dataset

# load the 'arxiv_nlp' dataset from Hugging Face Datasets library

dataset = load_dataset("maartengr/arxiv_nlp")["train"]

# extract metadata

abstracts = dataset["Abstracts"]

titles = dataset["Titles"]5.2. A Common Pipeline for Text Clustering

Text clustering enables the discovery of both known and unknown data patterns, providing an intuitive understanding of tasks like classification and their complexity, making it valuable beyond just exploratory data analysis.

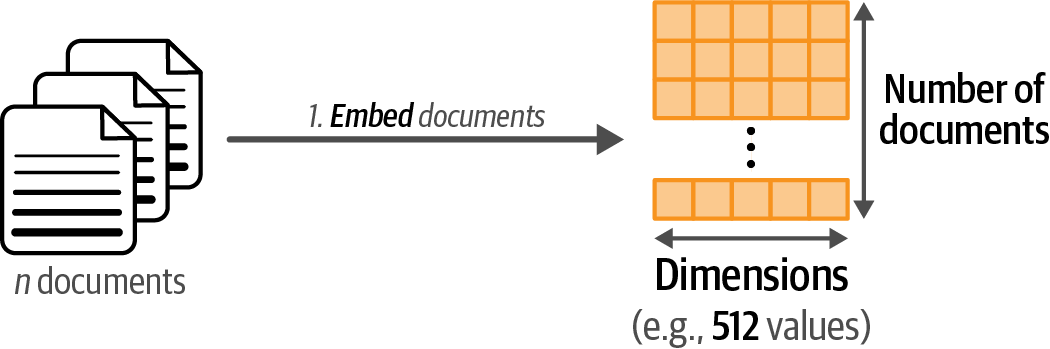

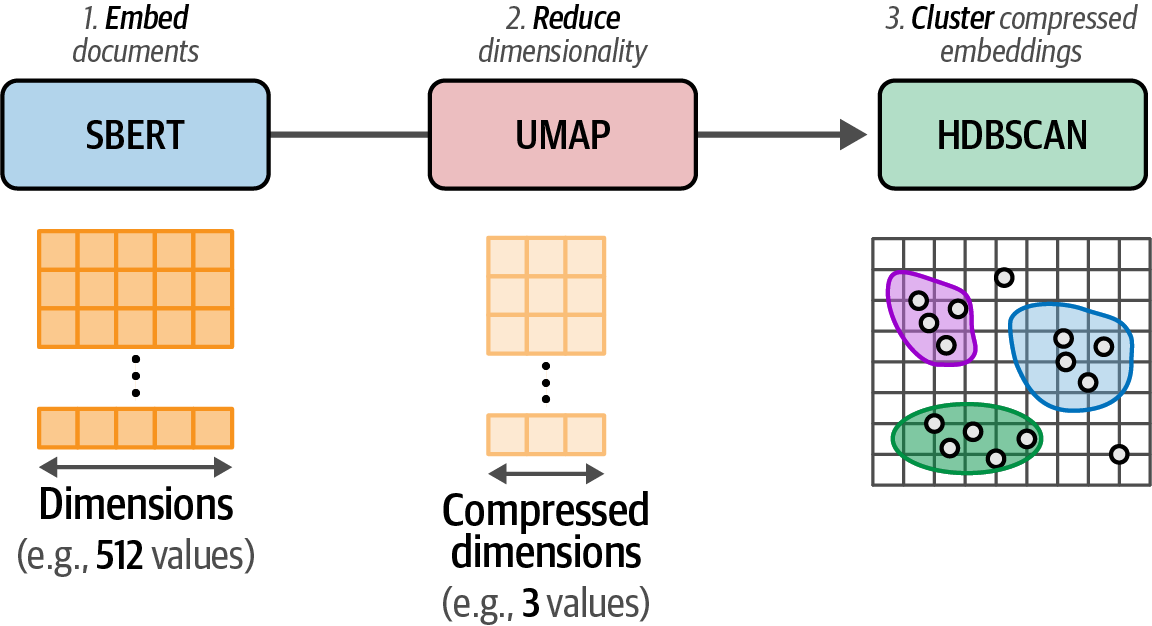

Although there are many methods for text clustering, from graph-based neural networks to centroid-based clustering techniques, a common pipeline that has gained popularity involves three steps and algorithms:

-

Convert the input documents to embeddings with an embedding model.

Figure 48. Step 1: We convert documents to embeddings using an embedding model.

Figure 48. Step 1: We convert documents to embeddings using an embedding model. -

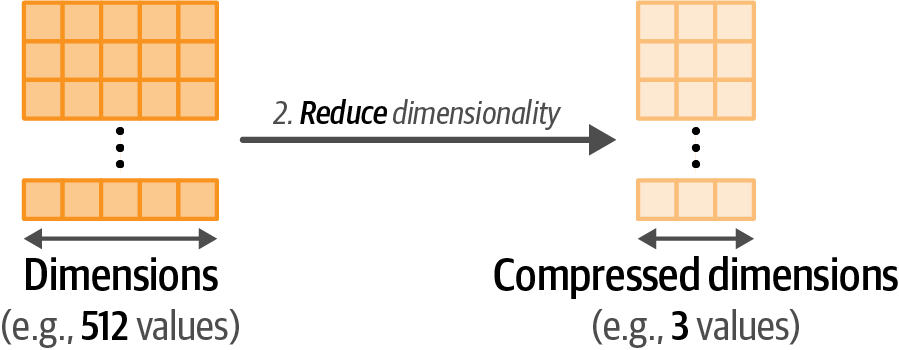

Reduce the dimensionality of embeddings with a dimensionality reduction model.

Figure 49. Step 2: The embeddings are reduced to a lower-dimensional space using dimensionality reduction.

Figure 49. Step 2: The embeddings are reduced to a lower-dimensional space using dimensionality reduction. -

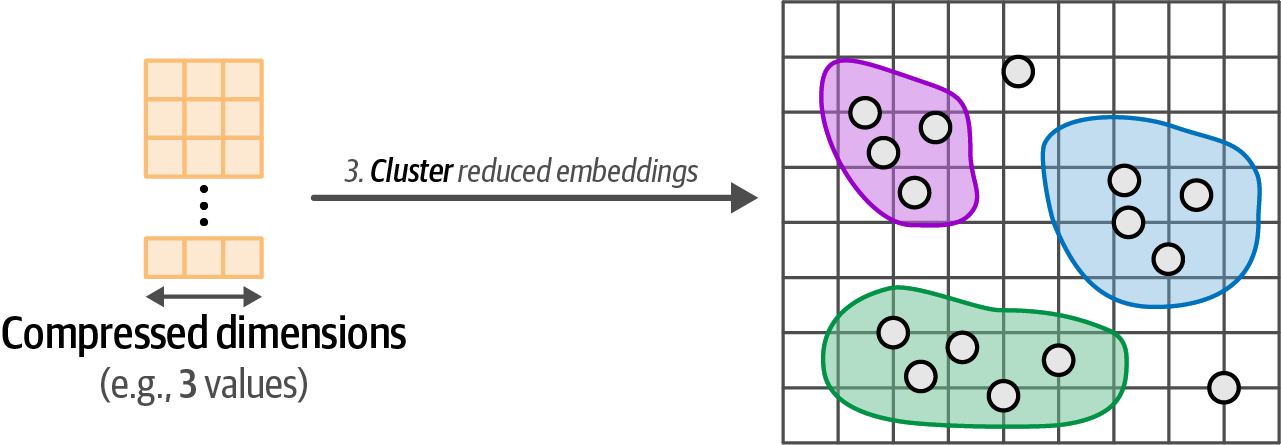

Find groups of semantically similar documents with a cluster model.

Figure 50. Step 3: We cluster the documents using the embeddings with reduced dimensionality.

Figure 50. Step 3: We cluster the documents using the embeddings with reduced dimensionality.

5.2.1. Embedding Documents

from sentence_transformers import SentenceTransformer

# create an embedding model using a pre-trained Sentence Transformer model

embedding_model = SentenceTransformer('thenlper/gte-small') (1)

# generate embeddings for each abstract in the 'abstracts' list

embeddings = embedding_model.encode(abstracts, show_progress_bar=True)

# check the dimensions (shape) of the resulting embeddings

embeddings.shape # (44949, 384) (2)

| 1 | The thenlper/gte-small model is a more recent model that outperforms the previous model on clustering tasks and due to its small size is even faster for inference. |

| 2 | The embeddings.shape of (44949, 384) shows that there are 44,949 abstract embeddings, each with a dimensionality of 384. |

5.2.2. Reducing the Dimensionality of Embeddings

-

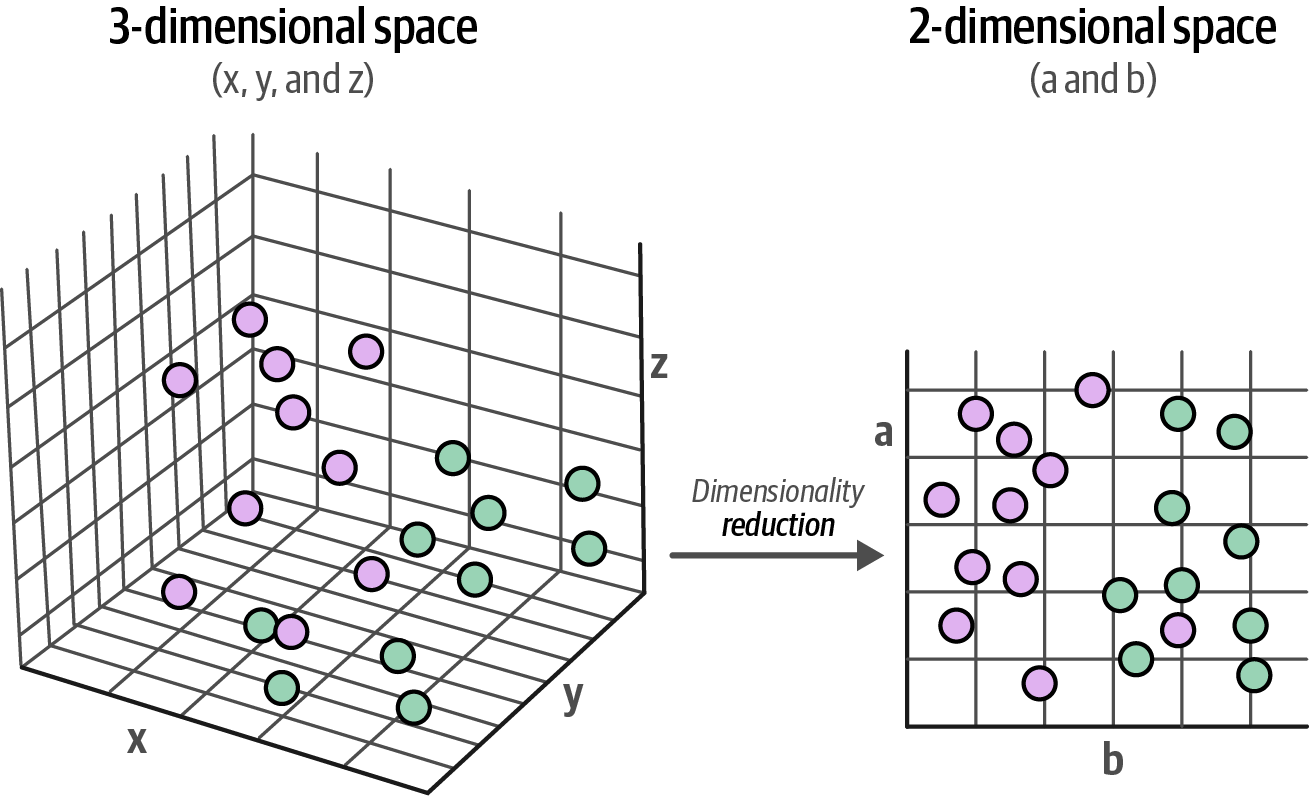

Reducing the dimensionality of embeddings is essential before clustering high-dimensional data to simplify the representation and enhance clustering effectiveness.

-

Dimensionality reduction is a compression technique and that the underlying algorithm is not arbitrarily removing dimensions.

Figure 51. Dimensionality reduction allows data in high-dimensional space to be compressed to a lower-dimensional representation.

Figure 51. Dimensionality reduction allows data in high-dimensional space to be compressed to a lower-dimensional representation. -

Well-known methods for dimensionality reduction are Principal Component Analysis (PCA) and Uniform Manifold Approximation and Projection (UMAP).

from umap import UMAP # reduce the input embeddings from 384 dimensions to 5 dimensions using UMAP umap_model = UMAP( # generally, values between 5 and 10 work well to capture high-dimensional global structures. n_components=5, # the number of dimensions to reduce to min_dist=0.0, # the effective minimum distance between embedded points metric='cosine', # the metric to use to compute distances in high dimensional space random_state=42, # for reproducibility of the embedding ) # fit and then transform the embeddings to the lower-dimensional space reduced_embeddings = umap_model.fit_transform(embeddings)

5.2.3. Cluster the Reduced Embeddings

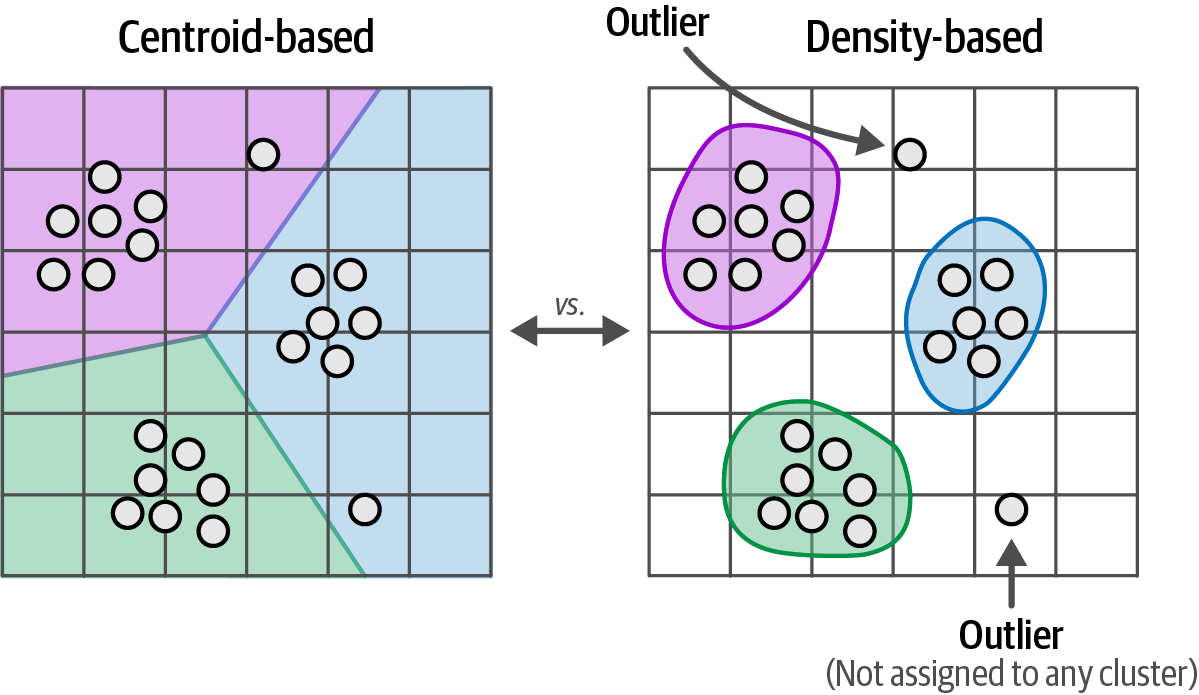

-

While k-means, a centroid-based algorithm needing a predefined number of clusters, is common, density-based algorithms are preferable when the number of clusters is unknown as they automatically determine the clusters and don’t require all data points to belong to one.

Figure 52. The clustering algorithm not only impacts how clusters are generated but also how they are viewed.

Figure 52. The clustering algorithm not only impacts how clusters are generated but also how they are viewed. -

A common density-based model is Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN).

from hdbscan import HDBSCAN # initialize and fit the HDBSCAN clustering model hdbscan_model = HDBSCAN( # the minimum number of samples in a group for it to be considered a cluster min_cluster_size=50, # the metric to use when calculating pairwise distances between data points metric='euclidean', # the method used to select clusters from the hierarchy ('eom' stands for Excess of Mass) cluster_selection_method='eom' ).fit(reduced_embeddings) # fit the HDBSCAN model to the reduced dimensionality embeddings # extract the cluster labels assigned to each data point (-1 indicates noise) clusters = hdbscan_model.labels_ # How many clusters did we generate? (excluding the noise cluster labeled -1) num_clusters = len(set(clusters)) - (1 if -1 in clusters else 0)

5.2.4. Inspecting the Clusters

-

To inspect each cluster manually and explore the assigned documents to get an understanding of its content.

import numpy as np # print first three documents in cluster 0 cluster = 0 for index in np.where(clusters == cluster)[0][:3]: print(abstracts[index][:300] + "... \n") -

To visualize clustering approximation results without manual review, further reduce document embeddings to two dimensions for plotting on an 2D plane.

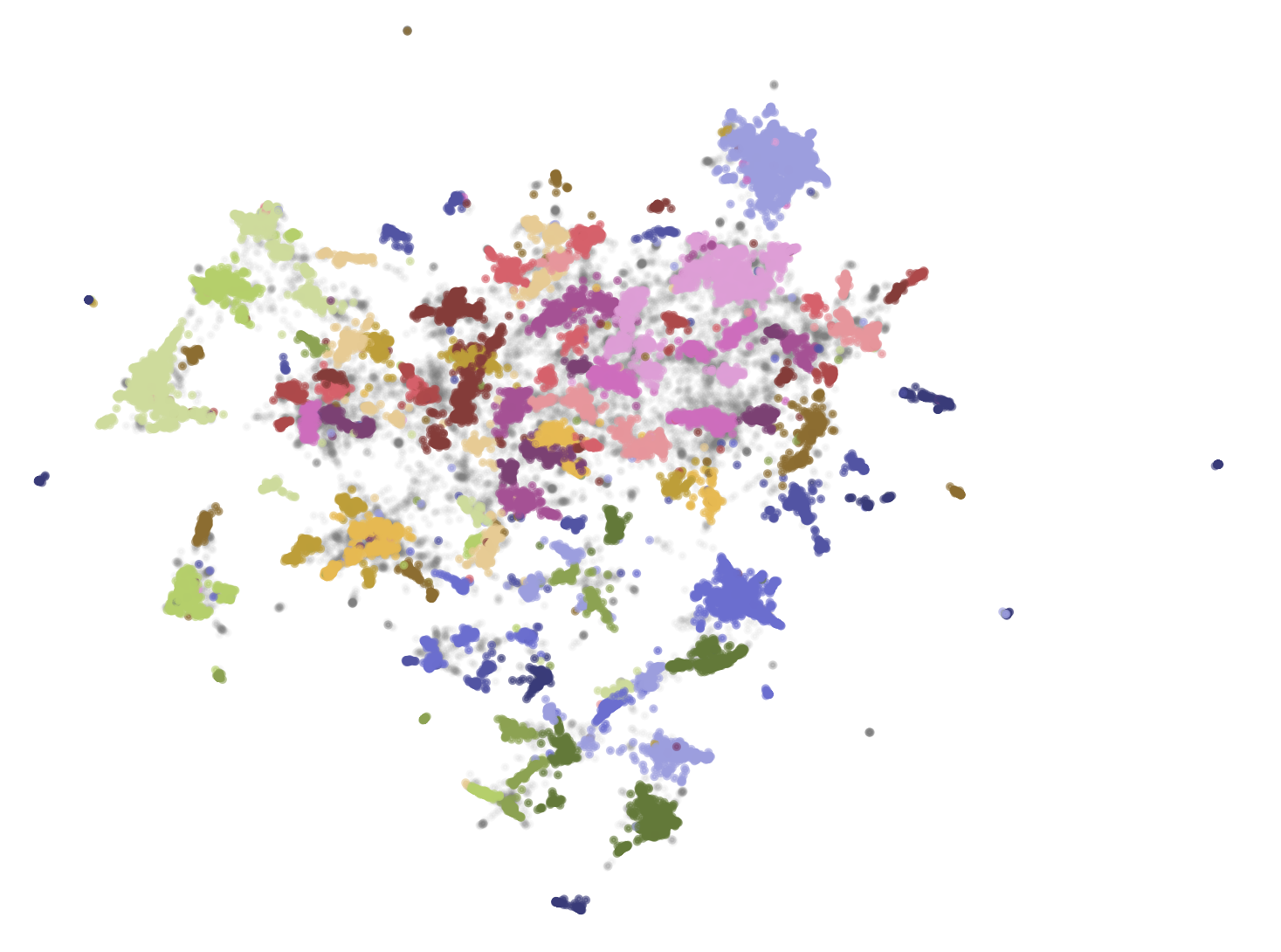

import pandas as pd from umap import UMAP import matplotlib.pyplot as plt # reduce 384-dimensional embeddings to two dimensions for easier visualization reduced_embeddings = UMAP( n_components=2, min_dist=0.0, metric="cosine", random_state=42, ).fit_transform(embeddings) # create dataframe df = pd.DataFrame(reduced_embeddings, columns=["x", "y"]) df["title"] = titles df["cluster"] = [str(c) for c in clusters] # select outliers (cluster -1) and non-outliers (clusters) to_plot = df.loc[df.cluster != "-1", :] outliers = df.loc[df.cluster == "-1", :] # plot outliers and non-outliers separately plt.scatter(outliers.x, outliers.y, alpha=0.05, s=2, c="grey", label="Outliers") plt.scatter( to_plot.x, to_plot.y, c=to_plot.cluster.astype(int), alpha=0.6, s=2, cmap="tab20b", label="Clusters", ) plt.axis("off") plt.legend() # Add a legend to distinguish outliers and clusters plt.title("Visualization of Clustered Abstracts") # Add a title for context plt.show() Figure 53. The generated clusters (colored) and outliers (gray) are represented as a 2D visualization.

Figure 53. The generated clusters (colored) and outliers (gray) are represented as a 2D visualization.

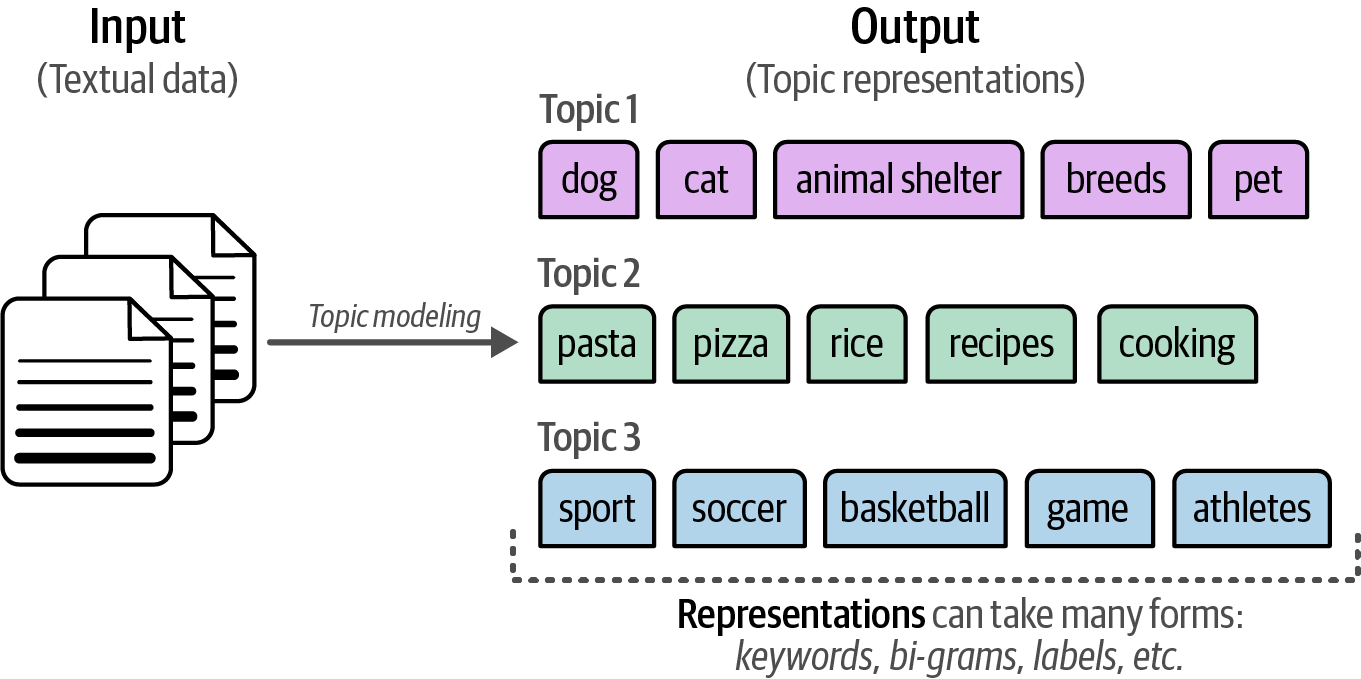

5.3. From Text Clustering to Topic Modeling

Text clustering is a powerful tool for finding structure among large collections of documents, whereas topic modeling is the process of discovering underlying themes or latent topics within a collection of textual data, which typically involves finding a set of keywords or phrases that best represent and capture the meaning of the topic.

5.3.1. BERTopic: A Modular Topic Modeling Framework

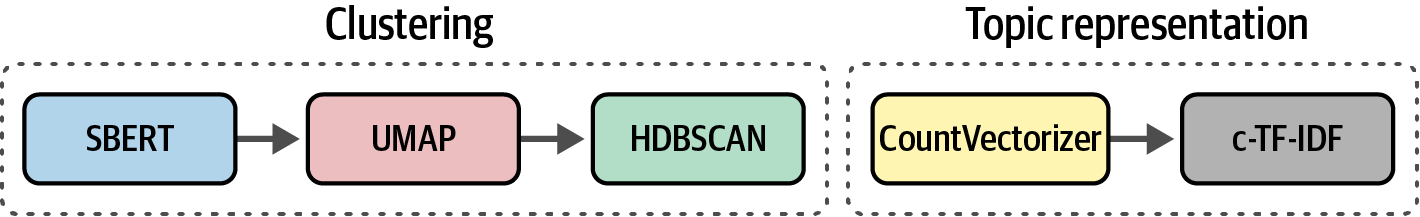

BERTopic is a topic modeling technique that leverages clusters of semantically similar texts to extract various types of topic representations.

-

First, similar to text clustering, it embeds documents, reduces their dimensionality, and then clusters these embeddings to group semantically similar texts. .The first part of BERTopic’s pipeline is to create clusters of semantically similar documents.

-

Second, it models word distributions using a bag-of-words approach, counting word frequencies within documents to help extract the most frequent terms.

The bag-of-words approach does exactly what its name implies: it counts the number of times each word appears in a document, which can then be used to extract the most frequent words within that document.

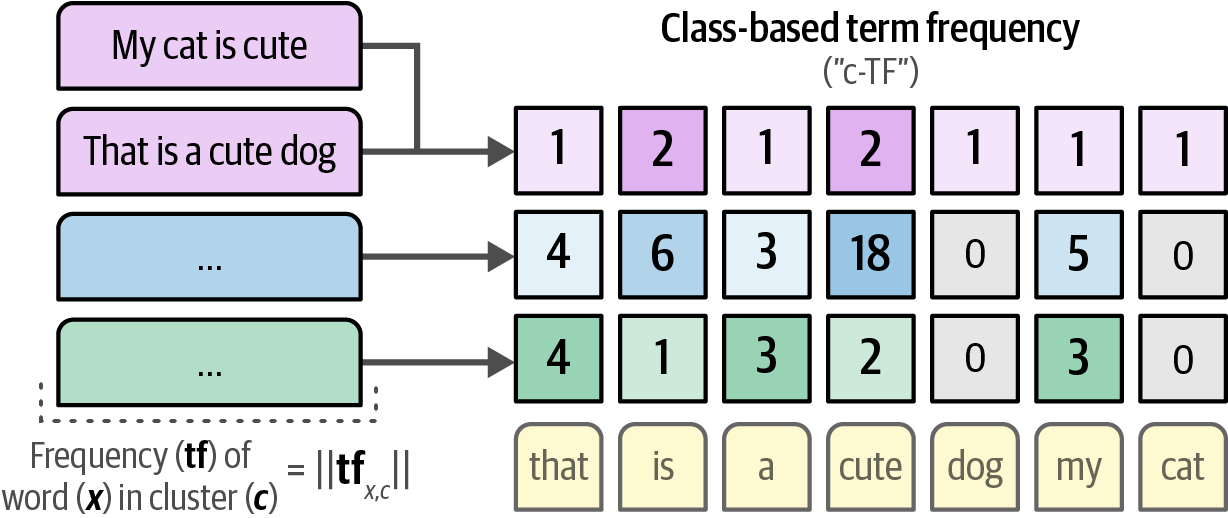

Figure 56. A bag-of-words counts the number of times each word appears inside a document.

Figure 56. A bag-of-words counts the number of times each word appears inside a document. Figure 57. Generating c-TF by counting the frequency of words per cluster instead of per document.

Figure 57. Generating c-TF by counting the frequency of words per cluster instead of per document.

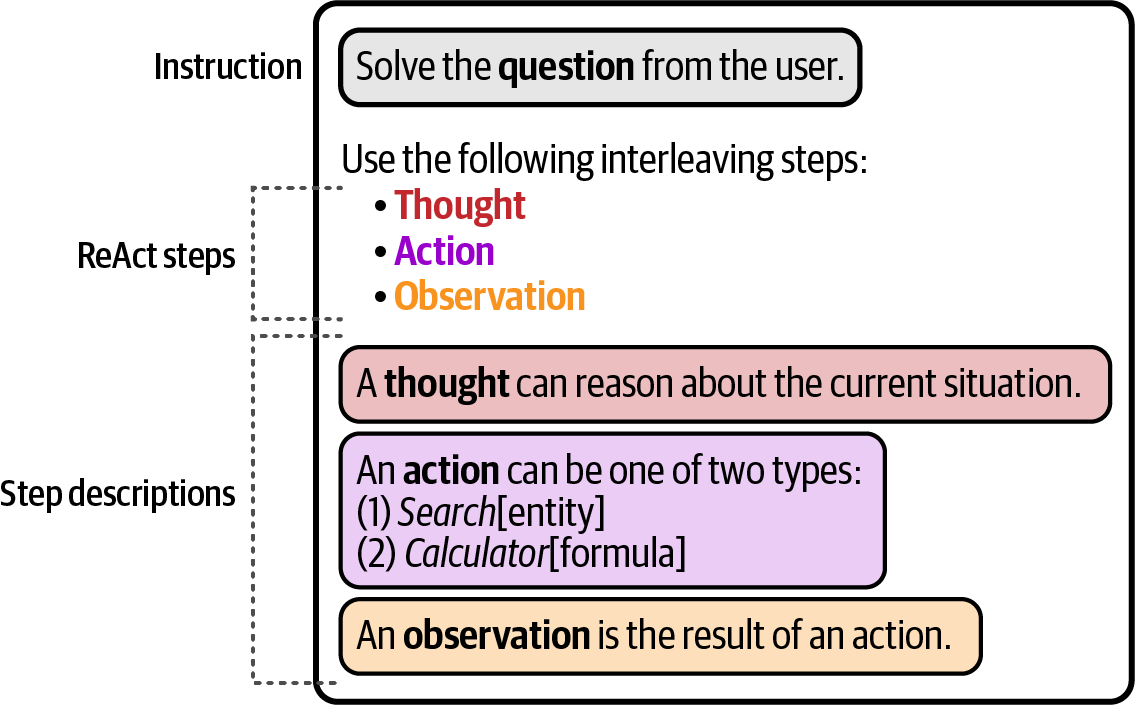

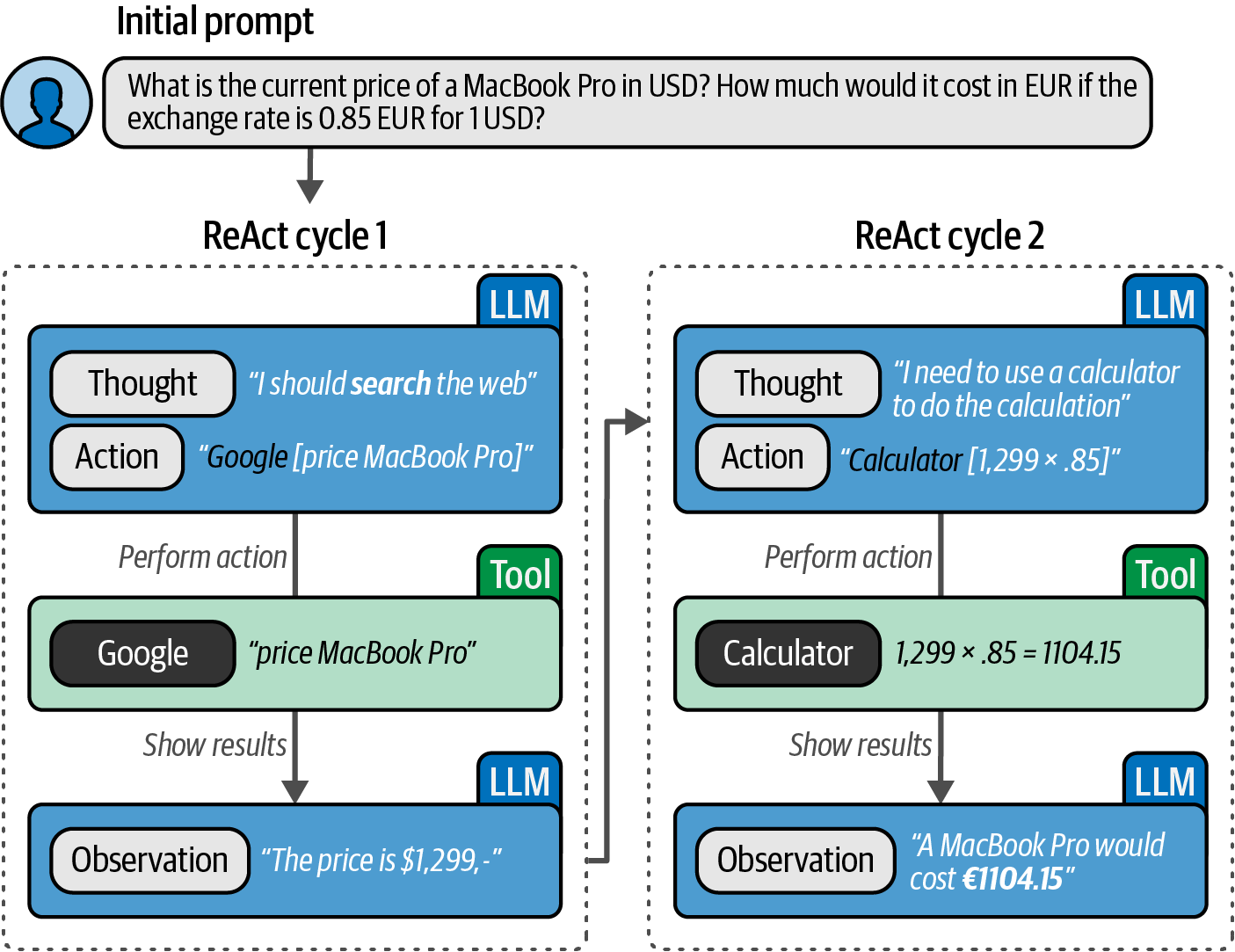

6. Prompt Engineering

Prompt engineering is the art and science of crafting effective prompts to guide large language models (LLMs) and other generative AI systems to produce desired and high-quality outputs. It involves understanding how these models interpret and respond to different phrasings, instructions, and contexts within a prompt to achieve specific goals, such as generating creative text, answering questions accurately, or performing tasks effectively.

6.1. Using Text Generation Models

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

# determine the device

dev = 'cuda' if torch.cuda.is_available() else 'cpu'

# load model and tokenizer

model_path = 'microsoft/Phi-4-mini-instruct'

model = AutoModelForCausalLM.from_pretrained(

model_path,

device_map=dev,

torch_dtype='auto',

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained(model_path)

# create a pipeline

pipe = pipeline(

'text-generation',

model=model,

tokenizer=tokenizer,

return_full_text=False,

max_new_tokens=500,

do_sample=False,

)

# prompt

messages = [{'role': 'user', 'content': 'Create a funny joke about chickens.'}]

# generate the output

output = pipe(messages)

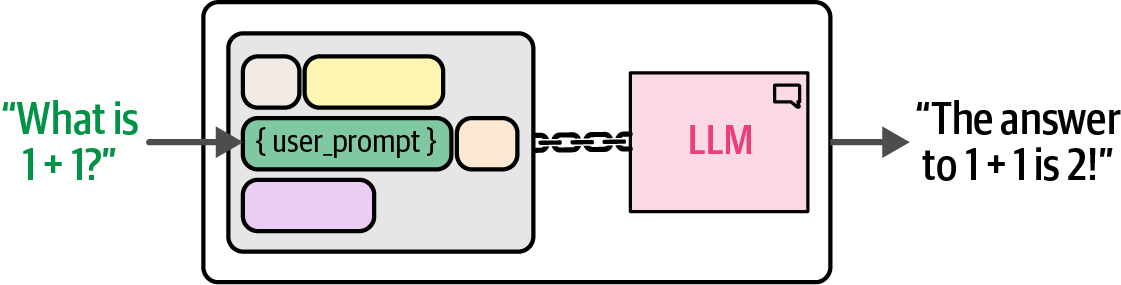

print(output[0]['generated_text'])6.1.1. Prompt Template

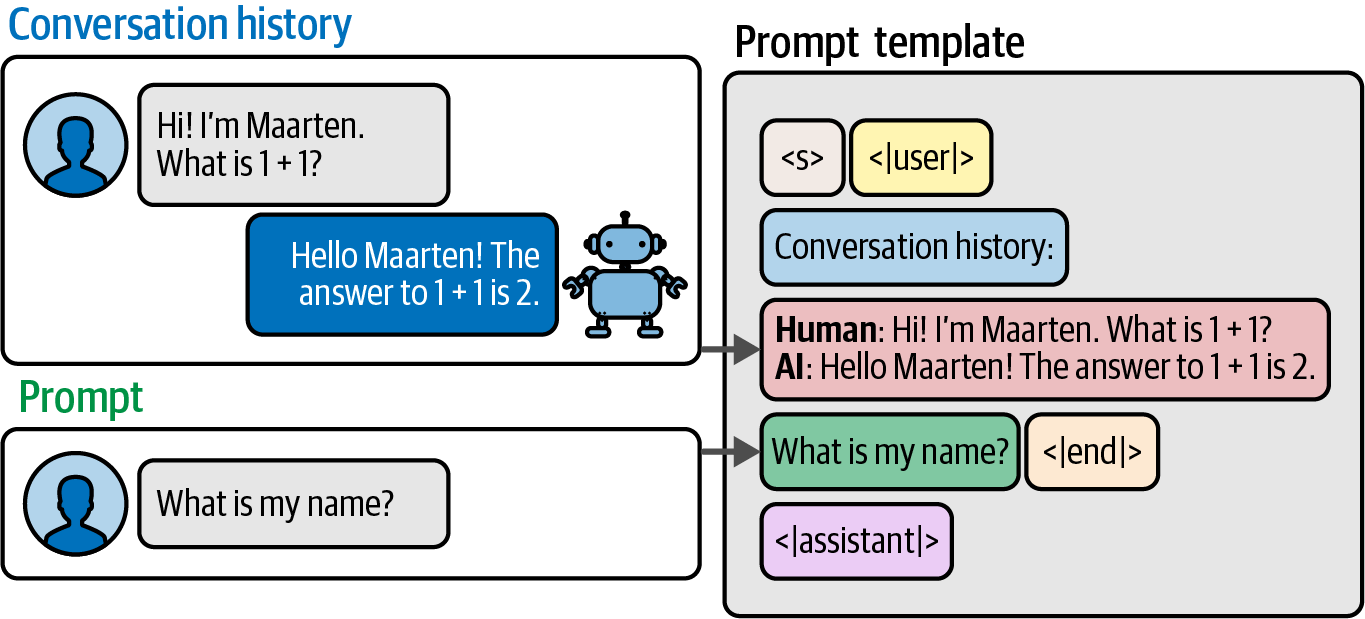

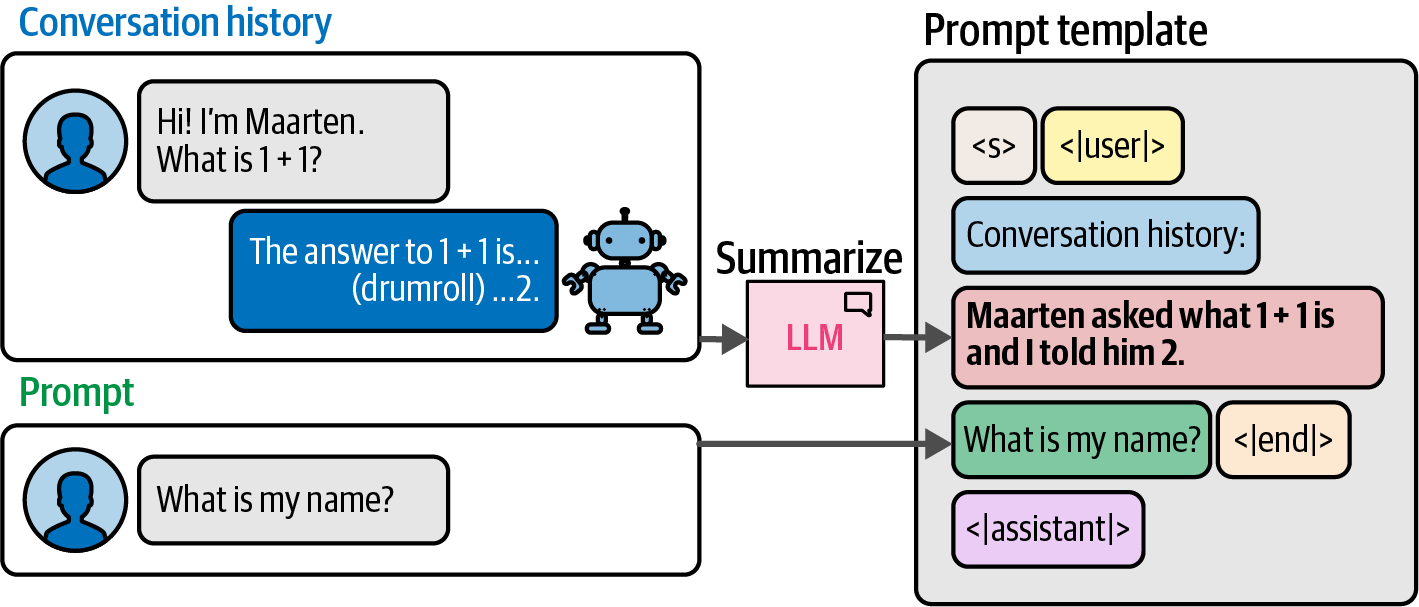

-

Under the hood,

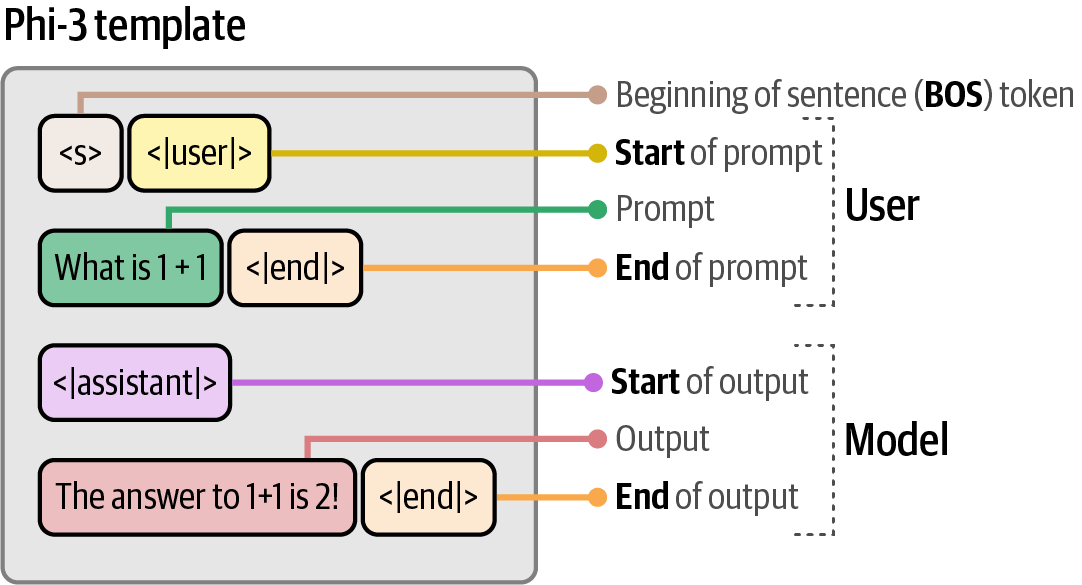

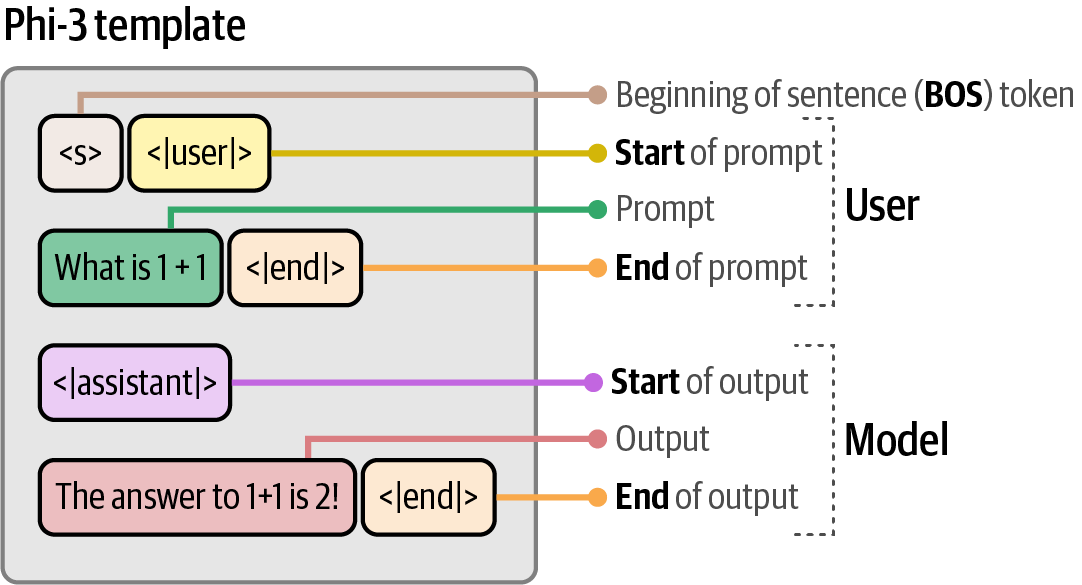

transformers.pipelinefirst converts the messages into a specific prompt template which was used during the training of the model.# apply prompt template prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False) print(prompt)<s><|user|> Create a funny joke about chickens.<|end|> <|assistant|> Figure 58. The template Phi-3 expects when interacting with the model.

Figure 58. The template Phi-3 expects when interacting with the model.

6.1.2. Controlling Model Output

-

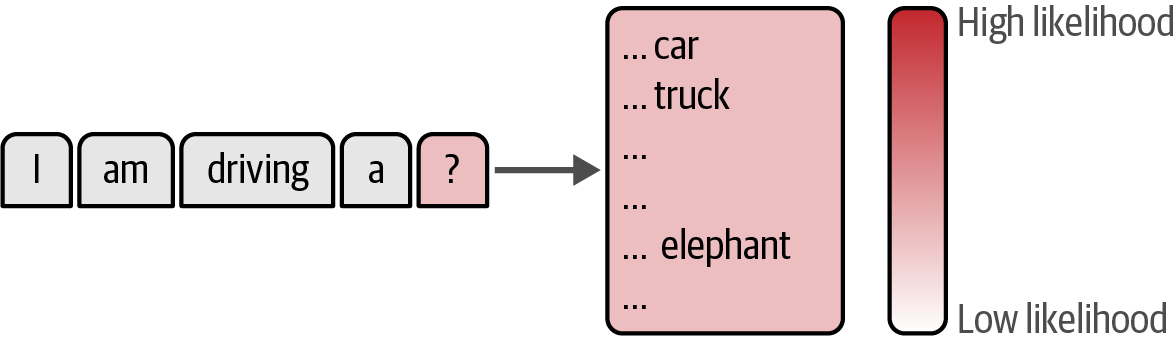

Each time an LLM needs to generate a token, it assigns a likelihood number to each possible token to generate different responses for the exact same prompt.

Figure 59. The model chooses the next token to generate based on their likelihood scores.

Figure 59. The model chooses the next token to generate based on their likelihood scores. -

The

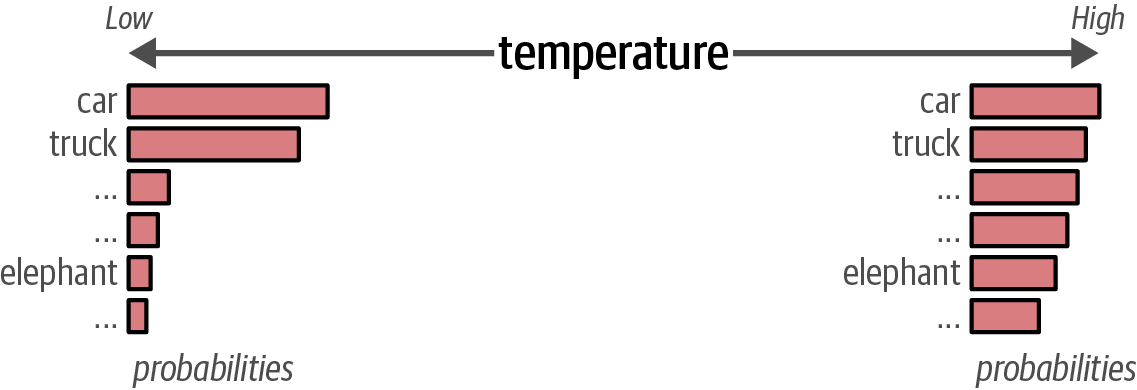

temperaturecontrols the randomness or creativity of the text generated; a higher temperature increases creativity by making less probable tokens more likely, while a temperature of0results in deterministic output by always selecting the most probable token.# using a high temperature output = pipe(messages, do_sample=True, temperature=1) print(output[0]["generated_text"]) Figure 60. A higher temperature increases the likelihood that less probable tokens are generated and vice versa.

Figure 60. A higher temperature increases the likelihood that less probable tokens are generated and vice versa. -

The

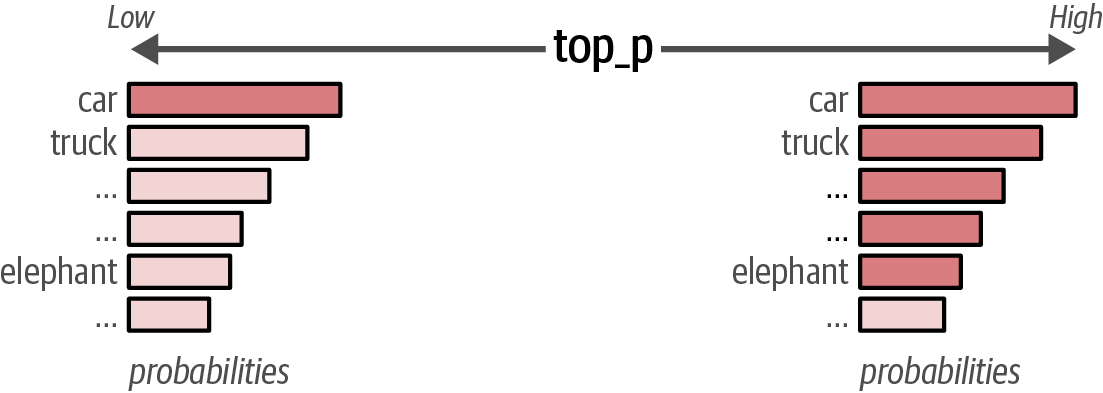

top-p, or nucleus sampling, is a technique that controls the subset of tokens (the nucleus) an LLM considers for generation by including tokens until their cumulative probability reaches a specified threshold.For instance, if

top_pis set to0.1, the model will consider tokens until their cumulative probability reaches 10%, and iftop_pis set to1, all tokens will be considered.# using a high top_p output = pipe(messages, do_sample=True, top_p=1) print(output[0]["generated_text"]) Figure 61. A higher top_p increases the number of tokens that can be selected to generate and vice versa.

Figure 61. A higher top_p increases the number of tokens that can be selected to generate and vice versa. -

The

top_kparameter directly limits the number of most probable tokens an LLM considers; setting it to 100 restricts the selection to only the top 100 tokens.Table 1. Use case examples when selecting values for temperature and top_p. Example use case temperature top_p Description Brainstorming session

High

High

High randomness with large pool of potential tokens. The results will be highly diverse, often leading to very creative and unexpected results.

Email generation

Low

Low

Deterministic output with high probable predicted tokens. This results in predictable, focused, and conservative outputs.

Creative writing

High

Low

High randomness with a small pool of potential tokens. This combination produces creative outputs but still remains coherent.

Translation

Low

High

Deterministic output with high probable predicted tokens. Produces coherent output with a wider range of vocabulary, leading to outputs with linguistic variety.

6.2. Prompt Engineering

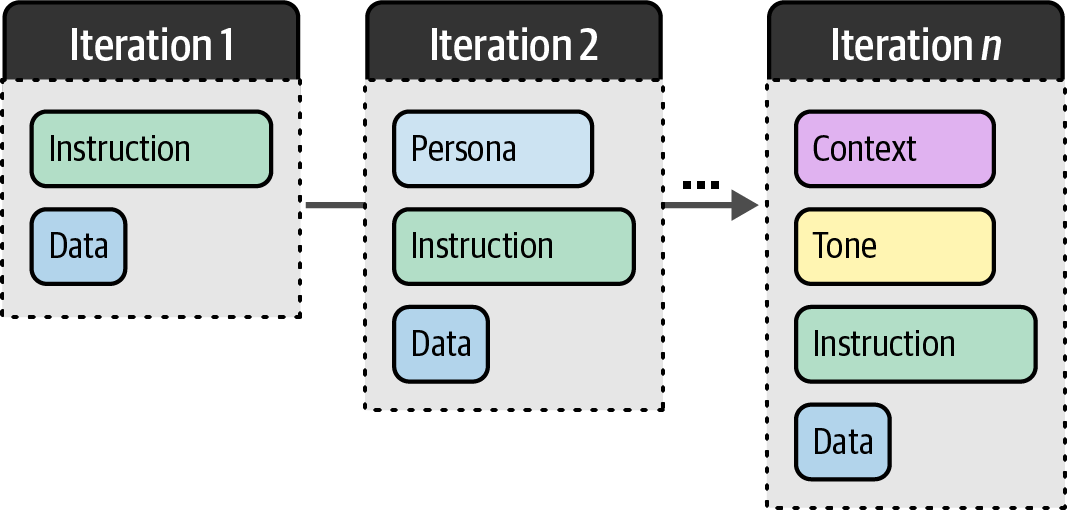

Prompt engineering is the iterative process of designing effective prompts, including questions, statements, or instructions, to elicit useful and relevant outputs from LLMs through experimentation and optimization.

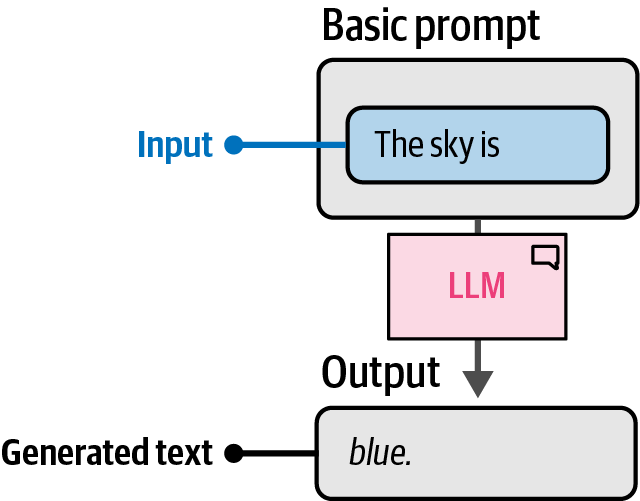

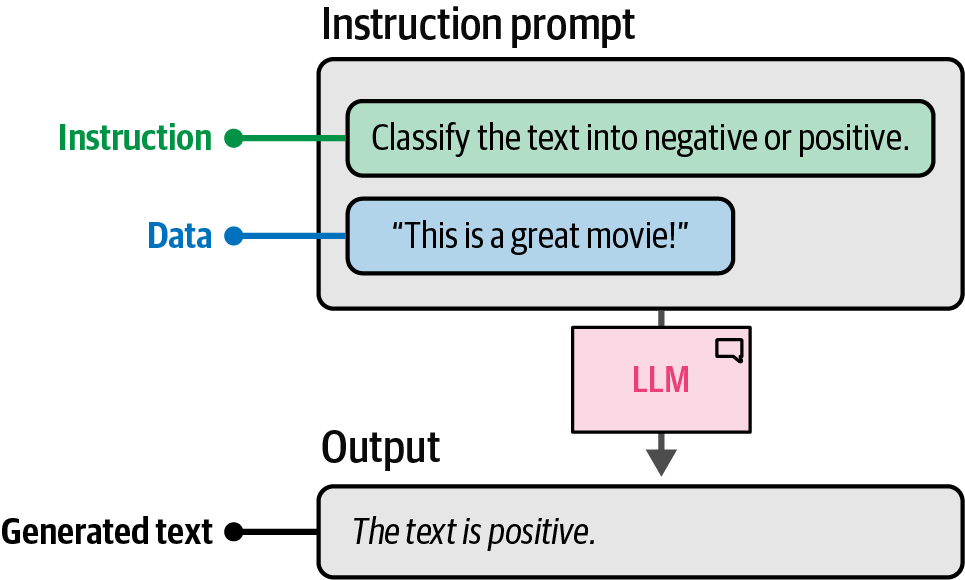

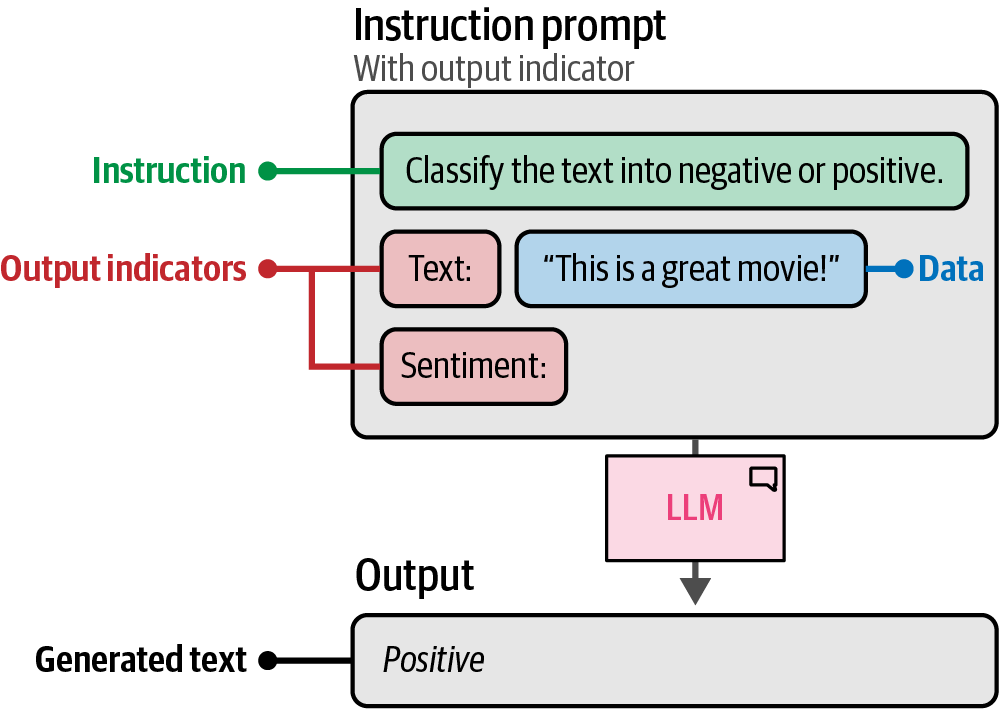

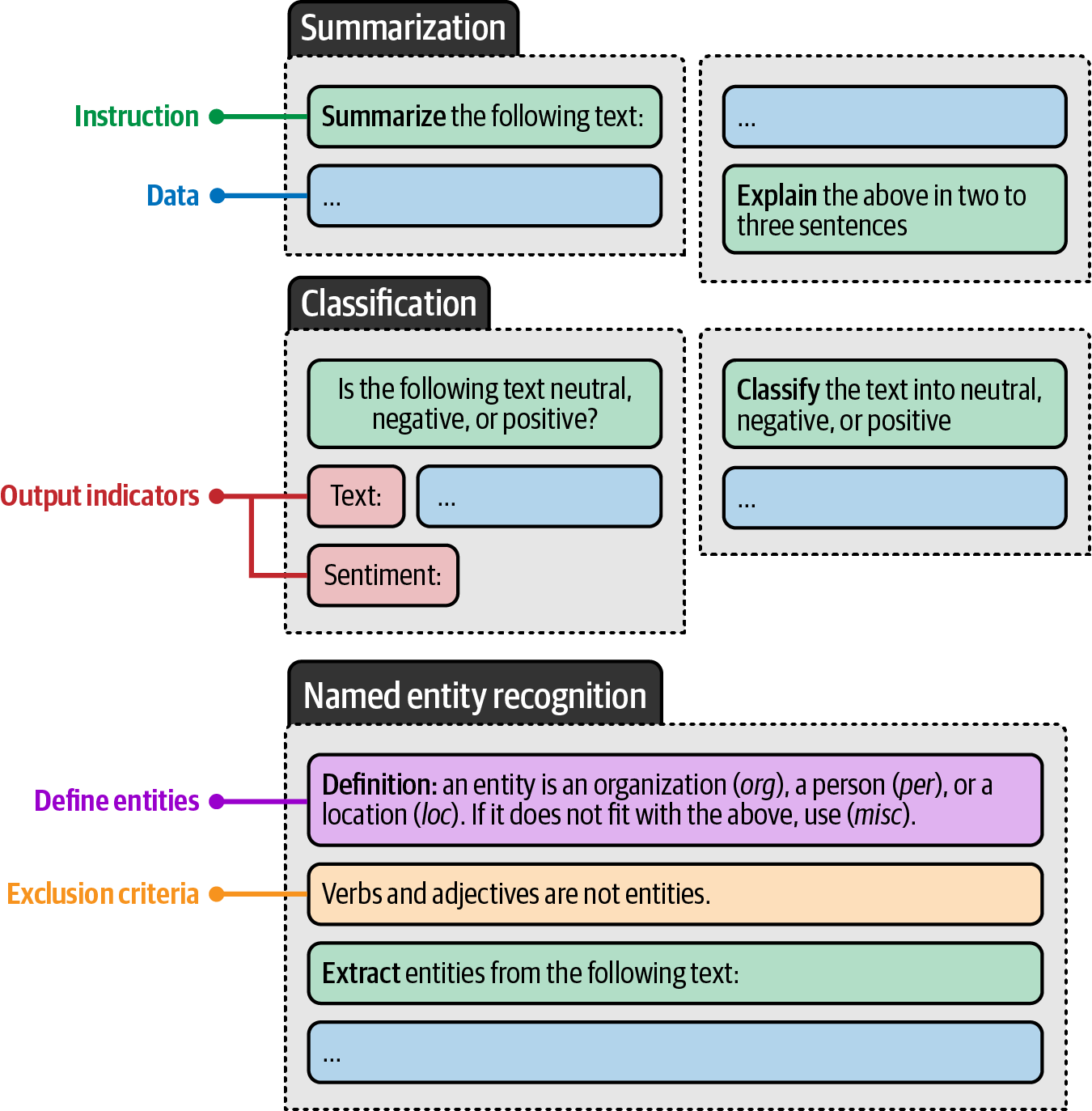

A prompt is the input provided to a large language model to elicit a desired response, which generally consists of multiple components such as instructions, data, and output indicators, and can be as complex as needed.

6.3. Instruction-Based Prompting

Instruction-based prompting is a method of prompting where the primary goal is to have the LLM answer a specific question or resolve a certain task by providing it with specific instructions.

Each of these tasks requires different prompting formats and more specifically, asking different questions of the LLM. A non-exhaustive list of the prompting techniques includes:

-

Specificity

Accurately describe the desired output, for example, instead of "Write a product description," ask "Write a product description in under two sentences using a formal tone."

Specificity is arguably the most important aspect; by restricting and specifying what the model should generate, there is a smaller chance of it generating something unrelated to a use case.

-

Hallucination

LLMs may generate incorrect information confidently, which is referred to as hallucination.

To reduce its impact, ask the LLM to only generate an answer if it knows the answer, and to respond with "I don’t know" if it does not know the answer.

-

Order

Either begin or end the prompt with the instruction.

Especially with long prompts, information in the middle is often forgotten.

LLMs tend to focus on information either at the beginning of a prompt (primacy effect) or the end of a prompt (recency effect).

6.4. Advanced Prompt Engineering

While creating a good prompt might initially seem straightforward—just ask a specific question, be accurate, and add examples—prompting can quickly become complex and is often an underestimated aspect of effectively using LLMs.

6.4.1. Prompt Components

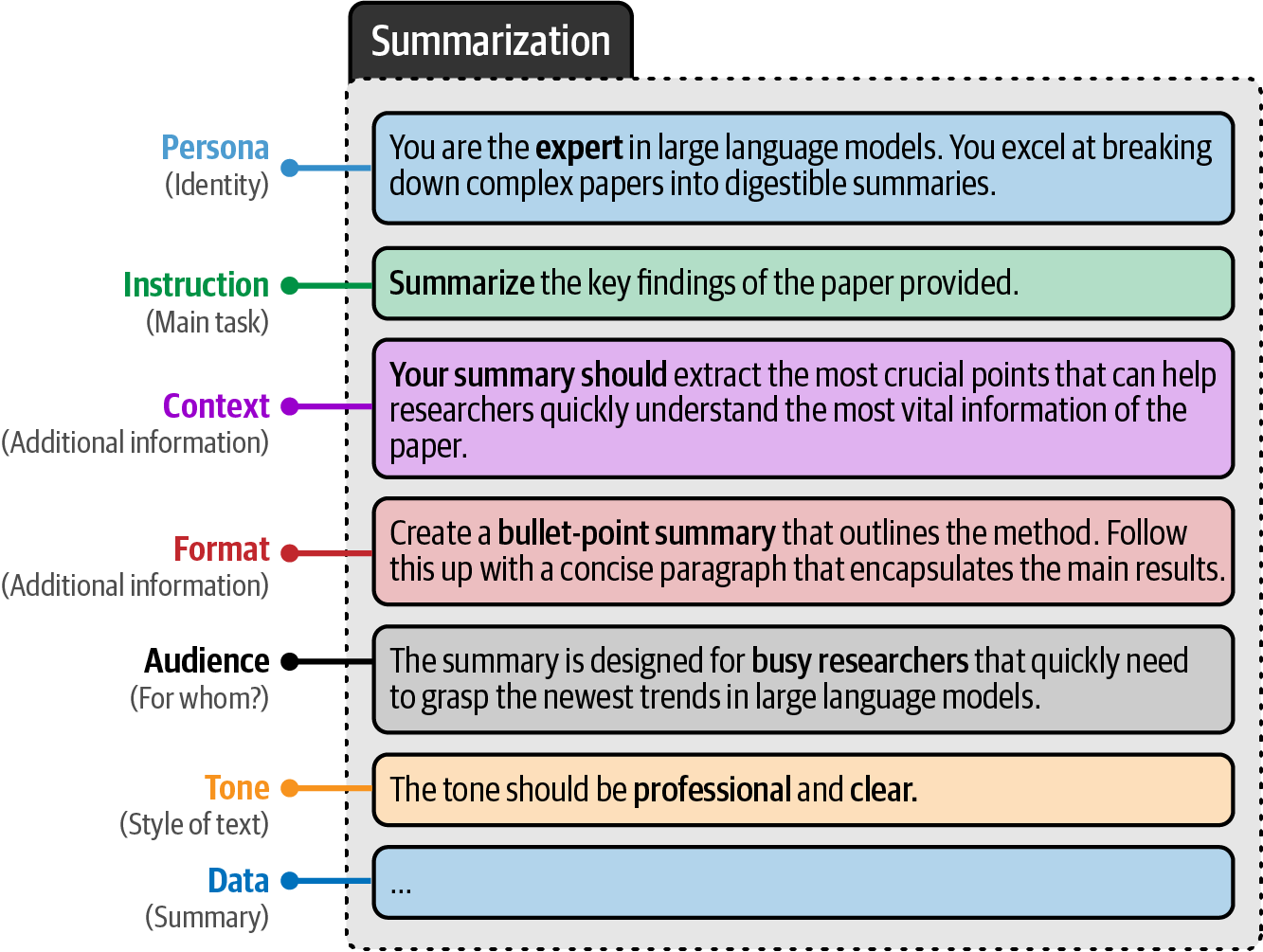

A prompt generally consists of multiple components, such as instruction, data, and output indicators, and other advanced components that can quickly make a prompt quite complex.

# prompt components

persona = 'You are an expert in Large Language models. You excel at breaking down complex papers into digestible summaries.\n'

instruction = 'Summarize the key findings of the paper provided.\n'

context = 'Your summary should extract the most crucial points that can help researchers quickly understand the most vital information of the paper.\n'

data_format = 'Create a bullet-point summary that outlines the method. Follow this up with a concise paragraph that encapsulates the main results.\n'

audience = 'The summary is designed for busy researchers that quickly need to grasp the newest trends in Large Language Models.\n'

tone = 'The tone should be professional and clear.\n'

text = 'MY TEXT TO SUMMARIZE'

data = f'Text to summarize: {text}'

# the full prompt - remove and add pieces to view its impact on the generated output

query = persona + instruction + context + data_format + audience + tone + data6.4.2. In-Context Learning: Providing Examples

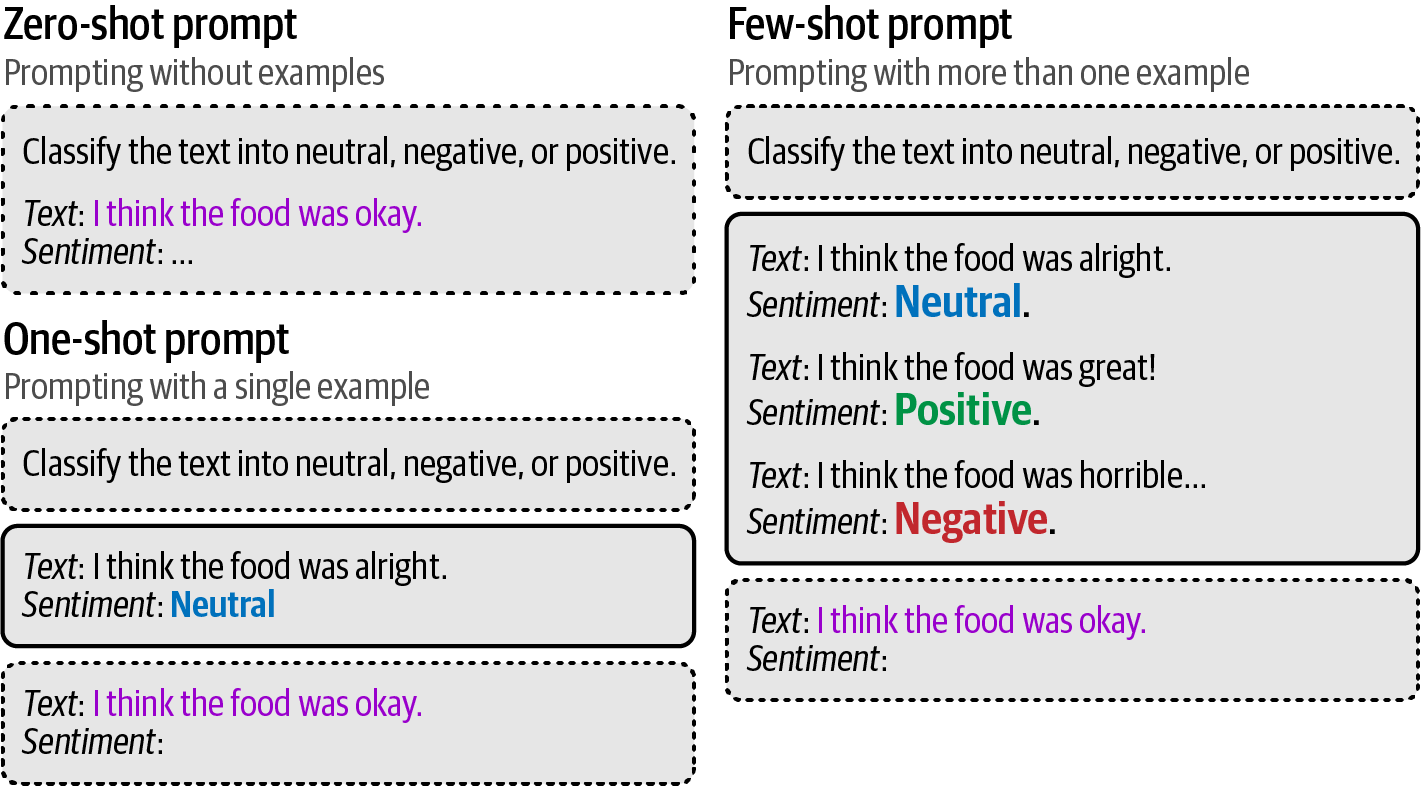

In-context learning (ICL) is a prompting technique that demonstrates the desired task to an LLM through direct examples, rather than solely describing it to provide the model with context to learn from within the prompt.

Zero-shot prompting does not leverage examples, one-shot prompts use a single example, and few-shot prompts use two or more examples.

# use a single example of using the made-up word in a sentence

one_shot_prompt = [

{

'role': 'user',

'content': 'A \'Gigamuru\' is a type of Japanese musical instrument. An example of a sentence that uses the word Gigamuru is:',

},

{

'role': 'assistant',

'content': 'I have a Gigamuru that my uncle gave me as a gift. I love to play it at home.',

},

{

'role': 'user',

'content': 'To \'screeg\' something is to swing a sword at it. An example of a sentence that uses the word screeg is:',

},

]

print(tokenizer.apply_chat_template(one_shot_prompt, tokenize=False))<|user|>A 'Gigamuru' is a type of Japanese musical instrument. An example of a sentence that uses the word Gigamuru is:<|end|><|assistant|>I have a Gigamuru that my uncle gave me as a gift. I love to play it at home.<|end|><|user|>To 'screeg' something is to swing a sword at it. An example of a sentence that uses the word screeg is:<|end|><|endoftext|># generate the output

outputs = pipe(one_shot_prompt)

print(outputs[0]["generated_text"])In the medieval fantasy novel, the knight would screeg his enemies with his gleaming sword.6.4.3. Chain Prompting: Breaking up the Problem

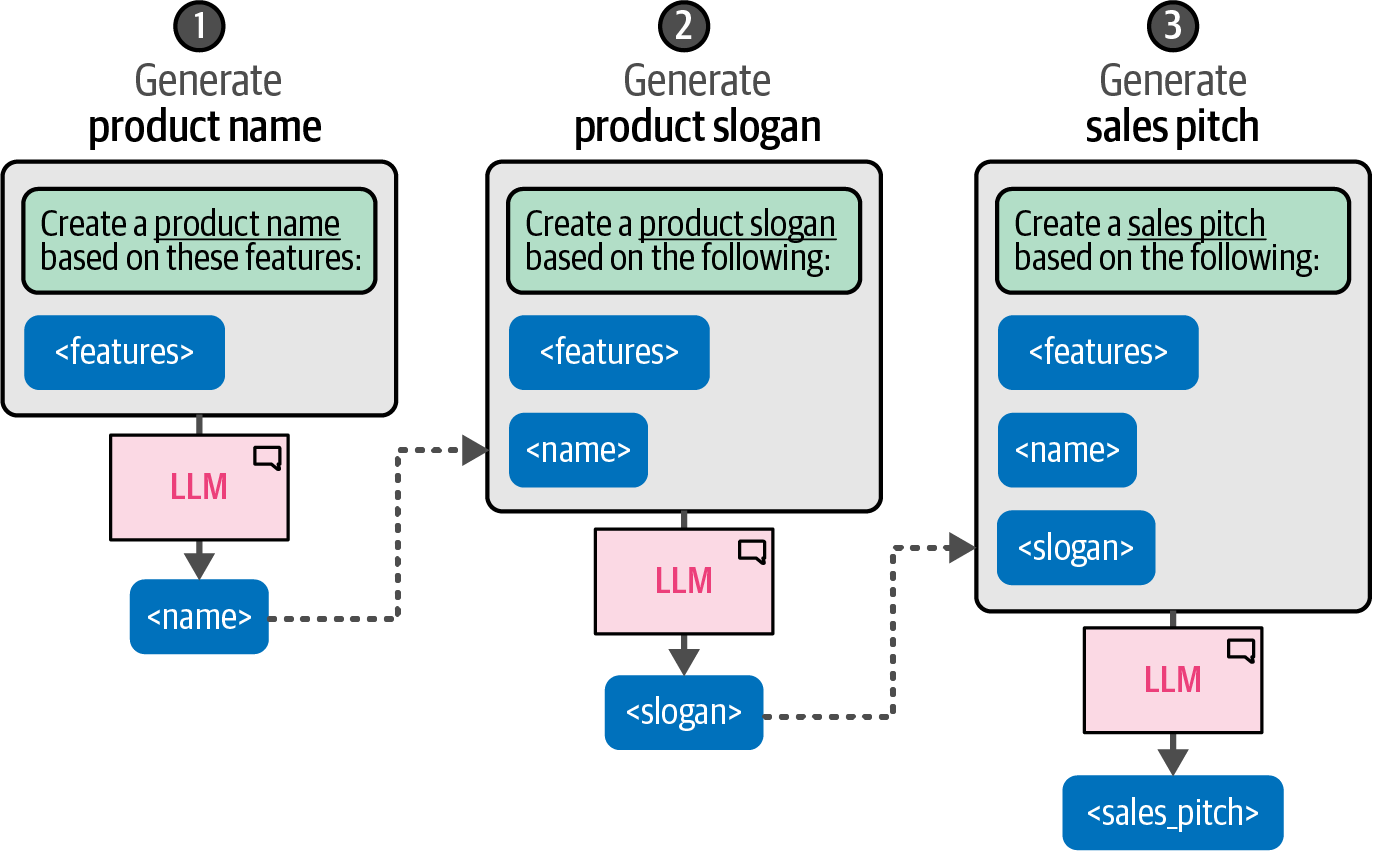

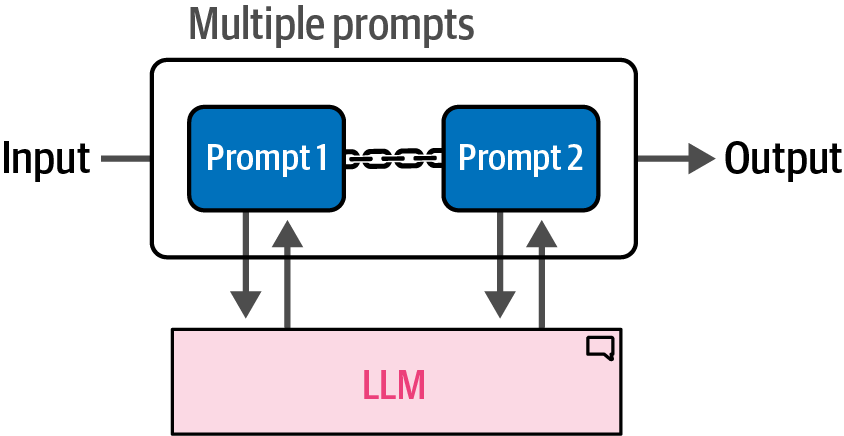

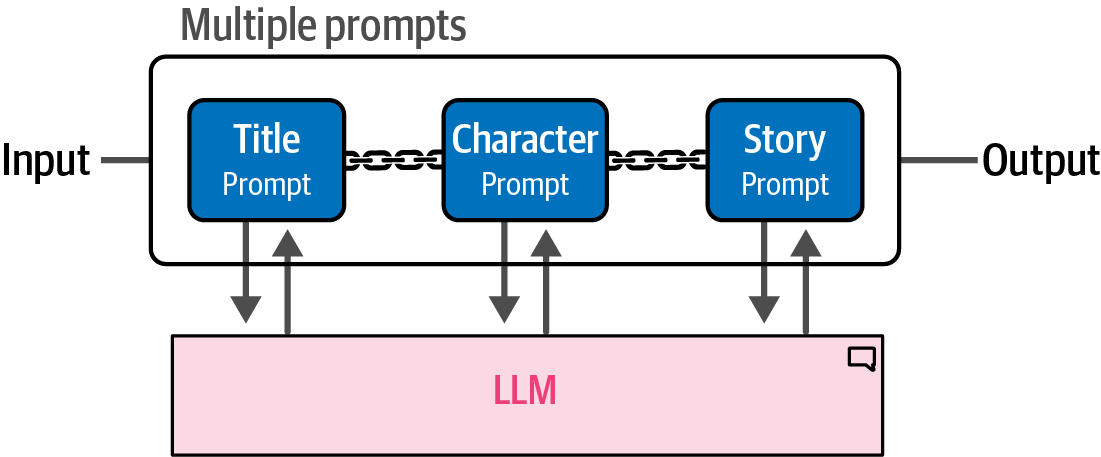

Prompt chaining is a technique that addresses complex tasks by breaking them down across multiple prompts, where the output of one prompt serves as the input for the subsequent prompt, creating a sequence of interactions that collectively solve the problem.

# create name and slogan for a product

product_prompt = [

{

"role": "user",

"content": "Create a name and slogan for a chatbot that leverages LLMs.",

}

]

outputs = pipe(product_prompt)

product_description = outputs[0]["generated_text"]

print(product_description)

# based on a name and slogan for a product, generate a sales pitch

sales_prompt = [

{

"role": "user",

"content": f"Generate a very short sales pitch for the following product: '{product_description}'",

}

]

outputs = pipe(sales_prompt)

sales_pitch = outputs[0]["generated_text"]

print(sales_pitch)Name: LexiBot

Slogan: "Unlock the Power of Language with LexiBot – Your AI Conversation Partner!"

Discover the future of communication with LexiBot – your AI conversation partner. Say goodbye to language barriers and hello to seamless, intelligent interactions. LexiBot is here to unlock the power of language, making every conversation more engaging and productive. Embrace the power of AI with LexiBot today!6.5. Reasoning with Generative Models

Reasoning is a core component of human intelligence and is often compared to the emergent behavior of LLMs that often resembles reasoning (through memorization of training data and pattern matching, rather than true reasoning).

Human reasoning can be broadly categorized into two systems.

-

System 1 thinking represents an automatic, intuitive, and near-instantaneous process, which shares similarities with generative models that automatically generate tokens without any self-reflective behavior.

-

System 2 thinking, in contrast, is a conscious, slow, and logical process, akin to brainstorming and self-reflection.

The system 2 way of thinking, which tends to produce more thoughtful responses than system 1 thinking, would be emulated by giving a generative model the ability to mimic a form of self-reflection.

6.5.1. Chain-of-Thought: Think Before Answering

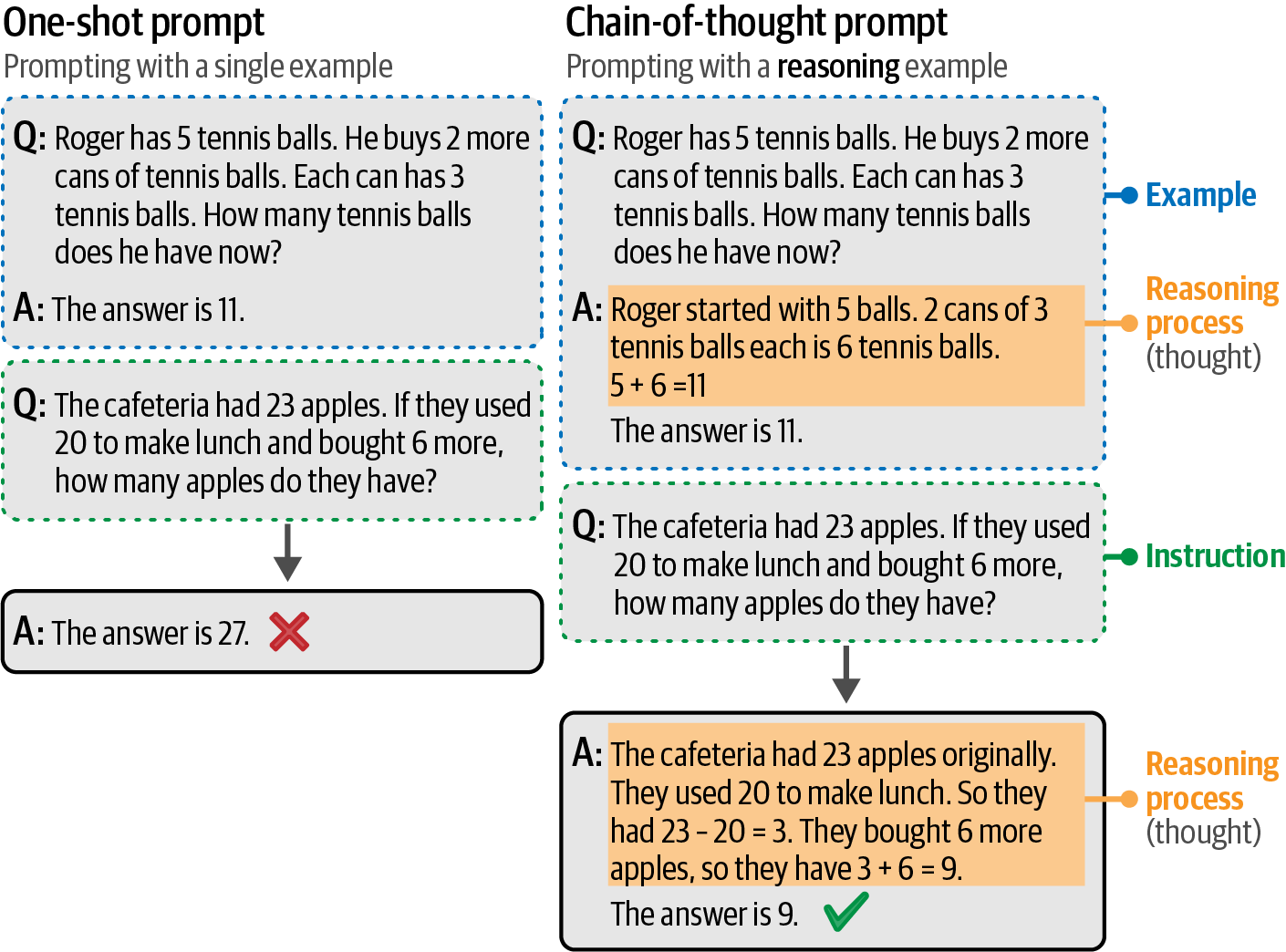

Chain-of-thought (CoT) prompting is a technique that allows large language models (LLMs) to solve a problem as a series of intermediate steps ("thoughts") before giving a final answer.

-

Although chain-of-thought is a great method for enhancing the output of a generative model, it does require one or more examples of reasoning in the prompt, which the user might not have access to.

Figure 70. Chain-of-thought prompting uses reasoning examples to persuade the generative model to use reasoning in its answer.

Figure 70. Chain-of-thought prompting uses reasoning examples to persuade the generative model to use reasoning in its answer.# answering with chain-of-thought cot_prompt = [ { "role": "user", "content": "Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has 3 tennis balls. How many tennis balls does he have now?", }, { "role": "assistant", "content": "Roger started with 5 balls. 2 cans of 3 tennis balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.", }, { "role": "user", "content": "The cafeteria had 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have?", }, ] # generate the output outputs = pipe(cot_prompt) print(outputs[0]["generated_text"])The cafeteria started with 23 apples. They used 20, so they had 23 - 20 = 3 apples left. Then they bought 6 more, so they now have 3 + 6 = 9 apples. The answer is 9. -

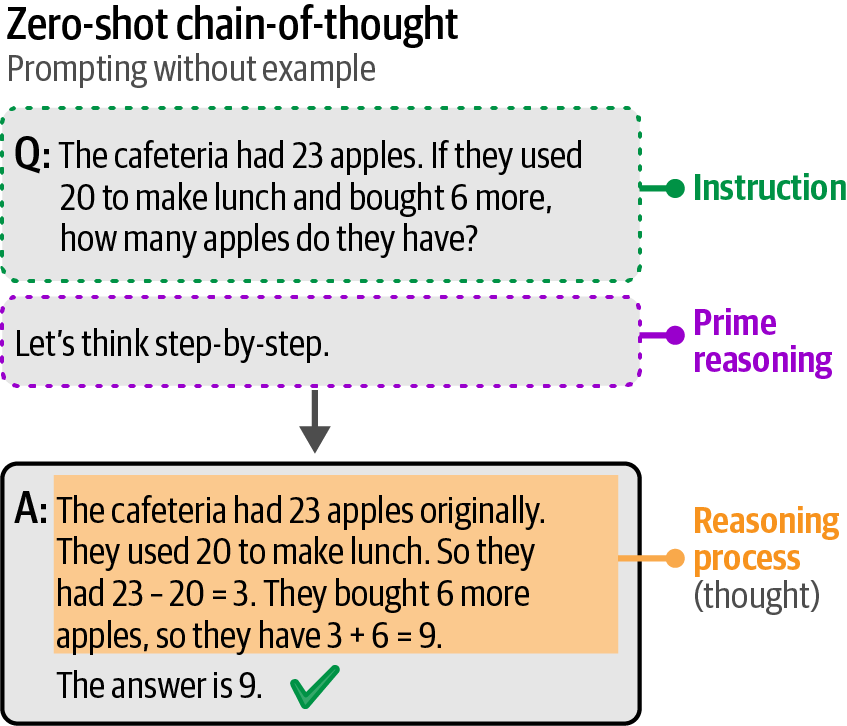

Instead of providing examples, zero-shot chain-of-thought allows a generative model to provide reasoning without explicit examples by directly prompting it for its thought process.

Although the prompt “Let’s think step by step” can improve the output, you are not constrained by this exact formulation. Alterna‐ tives exist like “Take a deep breath and think step-by-step” and “Let’s work through this problem step-by-step.”

Figure 71. Chain-of-thought prompting without using examples. Instead, it uses the phrase “Let’s think step-by-step” to prime reasoning in its answer.

Figure 71. Chain-of-thought prompting without using examples. Instead, it uses the phrase “Let’s think step-by-step” to prime reasoning in its answer.# zero-shot chain-of-thought prompt zeroshot_cot_prompt = [ { "role": "user", "content": "The cafeteria had 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have? Let's think step-by-step.", } ] # generate the output outputs = pipe(zeroshot_cot_prompt) print(outputs[0]["generated_text"])Sure, let's break it down step-by-step: 1. The cafeteria starts with 23 apples. 2. They use 20 apples to make lunch. 3. After using 20 apples, they have: 23 apples - 20 apples = 3 apples left. 4. They then buy 6 more apples. 5. Adding the 6 new apples to the 3 apples they have left: 3 apples + 6 apples = 9 apples. So, the cafeteria now has 9 apples.

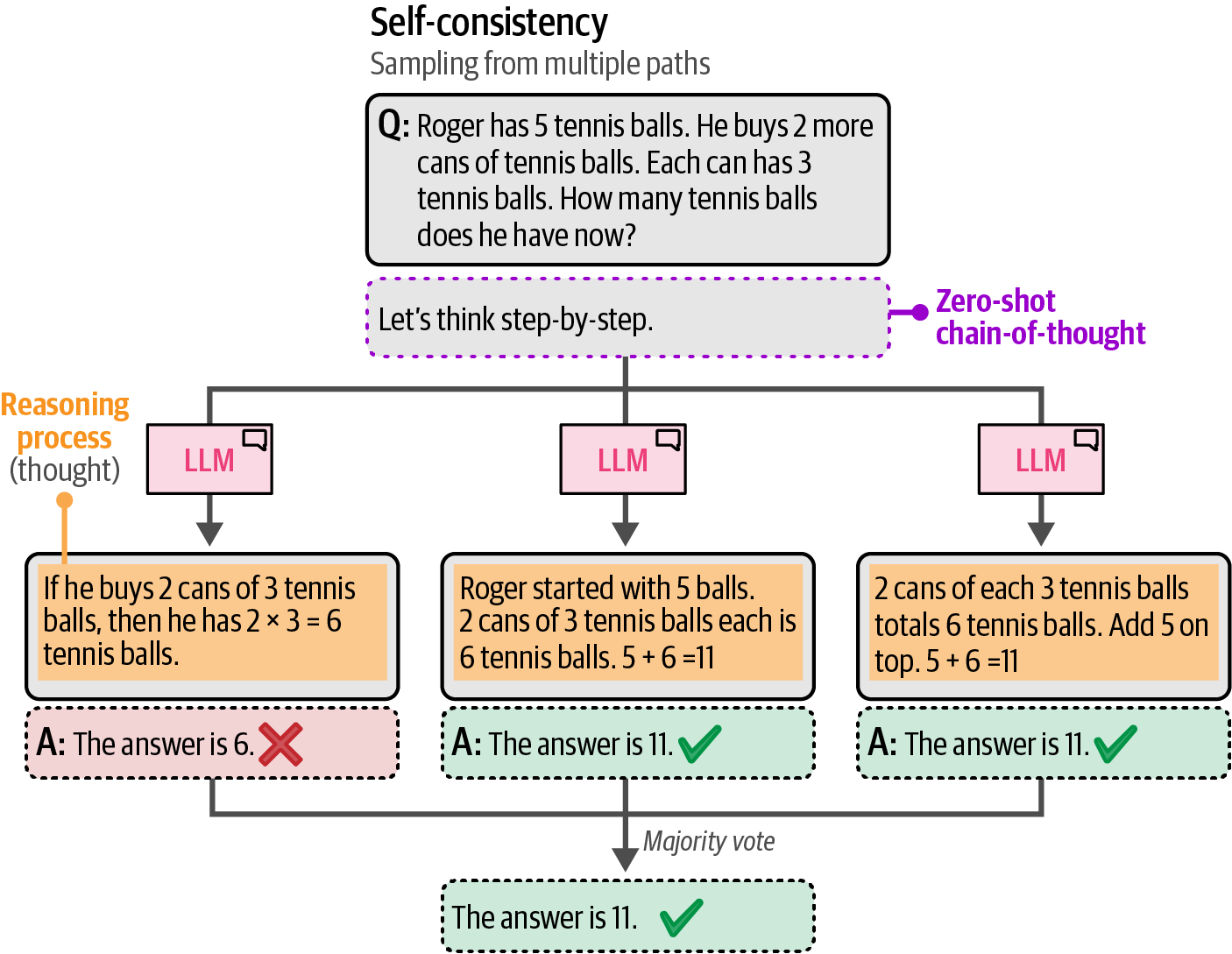

6.5.2. Self-Consistency: Sampling Outputs

Self-consistency is a technique that reduces randomness in generative models by prompting them multiple times with the same input, using varied sampling parameters like temperature and top_p to enhance diversity, and selecting the majority result as the final answer for robustness.

# zero-shot chain-of-thought prompt

zeroshot_cot_prompt = [

{

"role": "user",

"content": "The cafeteria had 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have? Let's think step-by-step.",

}

]

# self-consistency settings

num_samples = 3

temperature = [0.3, 0.5, 0.7]

top_p = [0.8, 0.85, 0.9]

# extract final numerical answers

def extract_answer(text):

numbers = re.findall(r"\d+", text) # find all numbers in the output

return (

numbers[-1] if numbers else None

) # take the last number as the final answer

# generate multiple answers

answers = []

for i in range(num_samples):

outputs = pipe(

zeroshot_cot_prompt,

do_sample=True,

temperature=temperature[i % len(temperature)],

top_p=top_p[i % len(top_p)],

)

response = outputs[0]["generated_text"].strip()

print(f'\n{response}'

final_answer = extract_answer(response)

if final_answer:

answers.append(final_answer)

# perform majority voting on numerical answers

most_common_answer, count = Counter(answers).most_common(1)[0]

print("\ngenerated answers:")

for i, ans in enumerate(answers, 1):

print(f"{i}. {ans}")

print(f"\nfinal answer (majority vote): {most_common_answer}")Sure, let's break it down step-by-step:

1. The cafeteria starts with 23 apples.

2. They use 20 apples to make lunch.

3. After using 20 apples, they have:

23 apples - 20 apples = 3 apples left.

4. They then buy 6 more apples.

5. Adding the 6 apples to the 3 apples they have left gives:

3 apples + 6 apples = 9 apples.

So, the cafeteria

Sure, let's break it down step-by-step:

1. The cafeteria starts with 23 apples.

2. They use 20 apples to make lunch.

3. After using 20 apples, they have:

23 apples - 20 apples = 3 apples left.

4. They then buy 6 more apples.

5. Adding the 6 new apples to the 3 apples they have left, they now have:

3 apples + 6 apples = 9 apples.

Sure, let's break it down step by step:

1. The cafeteria starts with 23 apples.

2. They use 20 apples to make lunch.

- 23 apples - 20 apples = 3 apples remaining.

3. They then buy 6 more apples.

- 3 apples + 6 apples = 9 apples.

So, after these transactions, the cafeteria has 9 apples.

generated answers:

1. 9

2. 9

3. 9

final answer (majority vote): 96.5.3. Tree-of-Thought: Exploring Intermediate Steps

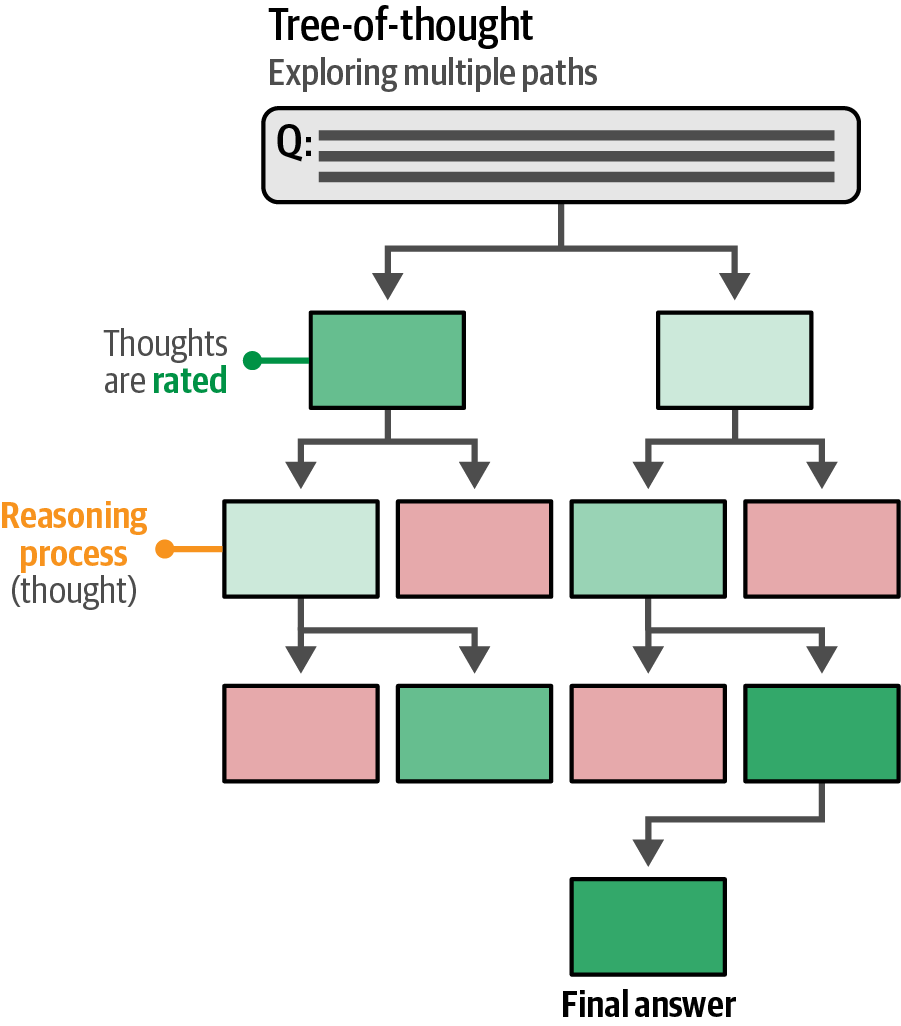

Tree-of-Thought (ToT) is a problem-solving technique structuring reasoning as a decision tree that explores multiple potential solutions at each step, evaluates them, and branches forward with the most promising, similar to brainstorming, to enhance the final outcome.

Tree-of-Thought excels at tasks requiring exploration of multiple paths, such as creative writing, but its reliance on numerous generative model calls can be slow.

A more efficient approach involves prompting the model to simulate a multi-expert discussion to reach a consensus, mimicking the ToT framework with a single call.

# zero-shot tree-of-thought prompt

zeroshot_tot_prompt = [

{

'role': 'user',

'content': "Imagine three different experts are answering this question. All experts will write down 1 step of their thinking, then share it with the group. Then all experts will go on to the next step, etc. If any expert realizes they're wrong at any point then they leave. The question is 'The cafeteria had 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have?' Make sure to discuss the results.",

}

]

# generate the output

outputs = pipe(zeroshot_tot_prompt)

print(outputs[0]['generated_text'])**Expert 1:**

Step 1: Start with the initial number of apples, which is 23.

**Expert 2:**

Step 1: Subtract the apples used for lunch, which is 20, from the initial 23 apples. This leaves 3 apples.

**Expert 3:**

Step 1: Add the 6 apples that were bought to the remaining 3 apples. This results in 9 apples.

**Discussion:**

All three experts agree on the final result. The cafeteria started with 23 apples, used 20 for lunch, leaving them with 3 apples. Then, they bought 6 more apples, bringing the total to 9 apples. Therefore, the cafeteria now has 9 apples.6.6. Output Verification

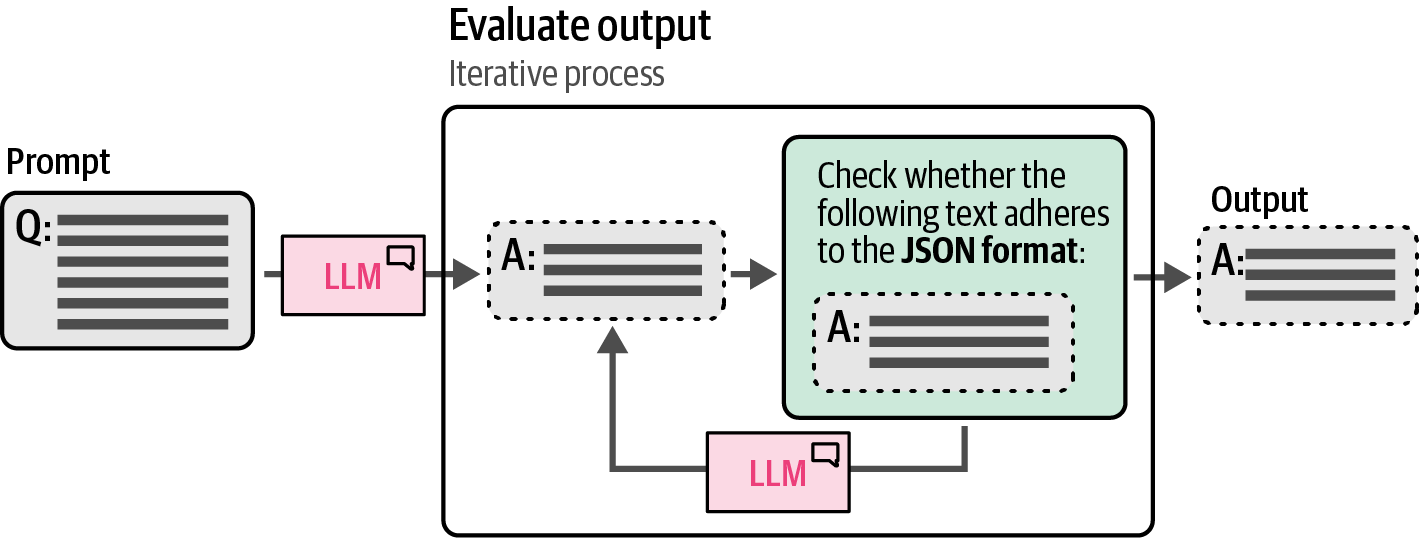

Systems and applications built with generative models might eventually end up in production. When that happens, it is important to verify and control the output of the model to prevent breaking the application and to create a robust generative AI application.

-

By default, most generative models create free-form text without adhering to specific structures other than those defined by natural language.

Some use cases require their output to be structured in certain formats, like JSON.

-

Even allowing the model to generate structured output, it still has the capability to freely generate its content.

For instance, when a model is asked to output either one of two choices, it should not come up with a third.

-

Some open source generative models have no guardrails and will generate outputs that do not consider safety or ethical considerations.

For instance, use cases might require the output to be free of profanity, personally identifiable information (PII), bias, cultural stereotypes, etc.

-

Many use cases require the output to adhere to certain standards or performance.

The aim is to double-check whether the generated information is factually accurate, coherent, or free from hallucination.

Generally, there are three ways of controlling the output of a generative model:

-

Examples: Provide a number of examples of the expected output.

-

Grammar: Control the token selection process.

-

Fine-tuning: Tune a model on data that contains the expected output.

6.6.1. Providing Examples

A simple and straightforward method to fix the output is to provide the generative model with examples of what the output should look like.

The few-shot learning is a helpful technique that guides the output of the generative model, which can be generalized to guide the structure of the output as well.

| An important note here is that it is still up to the model whether it will adhere to your suggested format or not. Some models are better than others at following instructions. |

# zero-shot learning: providing no in-context examples

zeroshot_prompt = [

{

'role': 'user',

'content': 'Create a character profile for an RPG game in JSON format.',

}

]

# generate the output

outputs = pipe(zeroshot_prompt)

print(outputs[0]['generated_text'])

# one-shot learning: providing a single in-context example of the desired output structure

one_shot_template = '''Create a short character profile for an RPG game. Make

sure to only use this format:

{

"description": "A SHORT DESCRIPTION",

"name": "THE CHARACTER'S NAME",

"armor": "ONE PIECE OF ARMOR",

"weapon": "ONE OR MORE WEAPONS"

}

'''

one_shot_prompt = [{'role': 'user', 'content': one_shot_template}]

# generate the output

outputs = pipe(one_shot_prompt)

print(outputs[0]['generated_text']){

"name": "Eldrin Shadowbane",

"class": "Rogue",

"level": 10,

"race": "Elf",

"background": "Eldrin was born into a noble family in the elven city of Luminara. He was trained in the arts of stealth and combat from a young age. However, Eldrin always felt a deep connection to the shadows and the mysteries of the night. He left his family to become a rogue

{

"description": "A skilled archer with a mysterious past, known for their agility and precision.",

"name": "Lyra Swiftarrow",

"armor": "Leather bracers and a lightweight leather tunic",

"weapon": "Longbow, throwing knives"

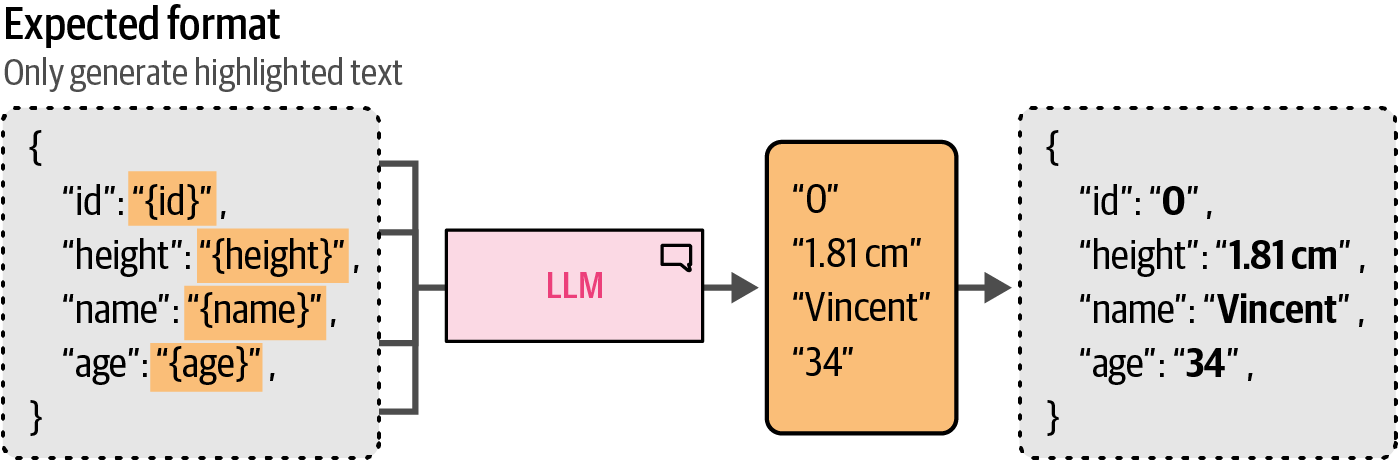

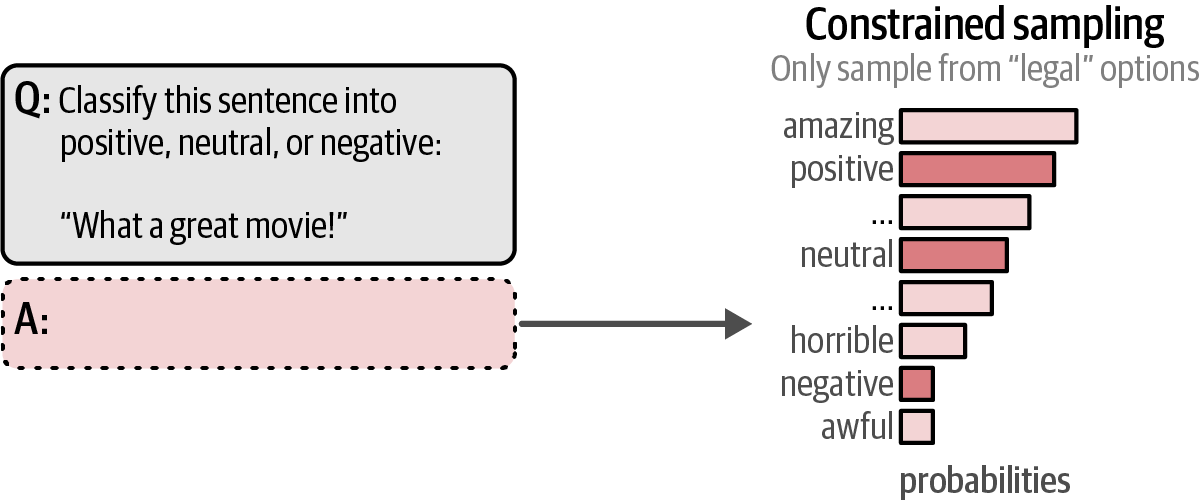

}6.6.2. Grammar: Constrained Sampling

Few-shot learning has a significant disadvantage: explicitly preventing certain output is not possible. Although the model is guided and given instructions, it might still not follow them completely.

Grammar-constrained sampling is a technique used during the token generation process of a Large Language Model (LLM) that enforces adherence to predefined grammars or rules when selecting the next token.

Instead, packages have been rapidly developed to constrain and validate the output of generative models, like Guidance, Guardrails, and LMQL, which leverage generative models to validate their own output.

Like transformers, llama-cpp-python is a library, generally used to efficiently load and use compressed models (quantization) in the GGUF format but can also be used to apply a JSON grammar.

from llama_cpp.llama import Llama

# load the Phi-3 language model using the llama-cpp-python library

llm = Llama.from_pretrained(

repo_id="microsoft/Phi-3-mini-4k-instruct-gguf",

filename="*fp16.gguf",

n_gpu_layers=-1,

n_ctx=2048,

verbose=False,

)

# generate output using the loaded language model for a chat completion task

output = llm.create_chat_completion(

messages=[

{

"role": "user",

"content": "Create a warrior for an RPG in JSON for mat.",

},

],

response_format={"type": "json_object"}, # specify the response_format as a JSON

temperature=0,

)['choices'][0]['message']["content"]

import json

# check whether the output actually is JSON

json_output = json.dumps(json.loads(output), indent=4)

print(json_output){

"warrior": {

"name": "Aldarion the Brave",

"class": "Warrior",

"level": 10,

"attributes": {

"strength": 18,

"dexterity": 10,

"constitution": 16,

"intelligence": 8,

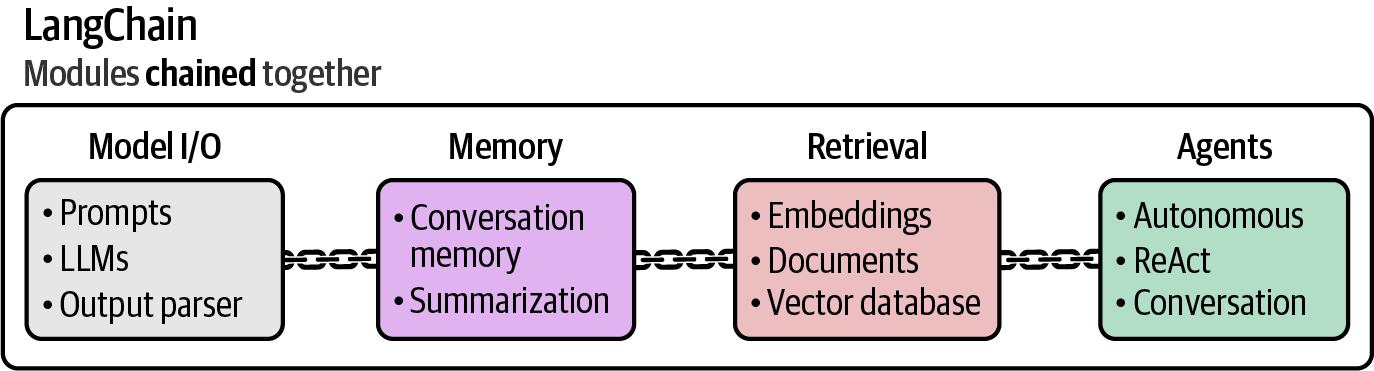

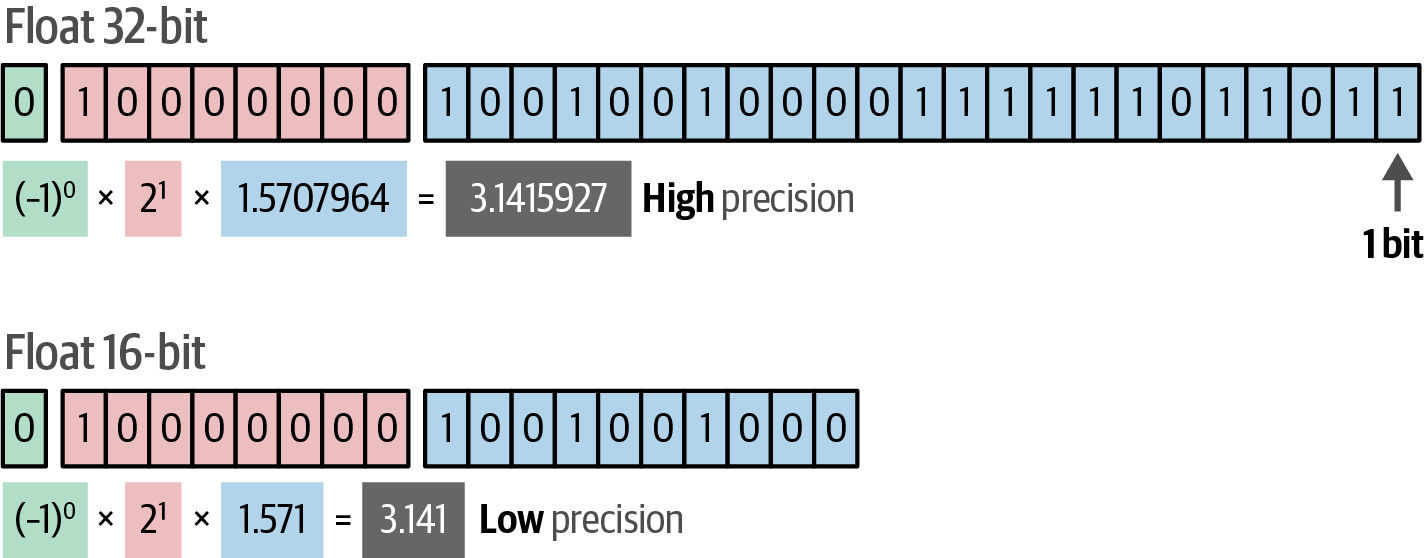

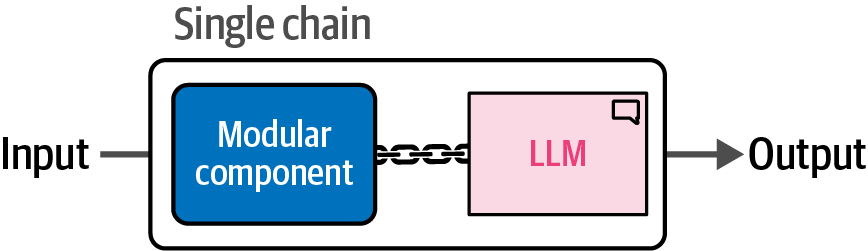

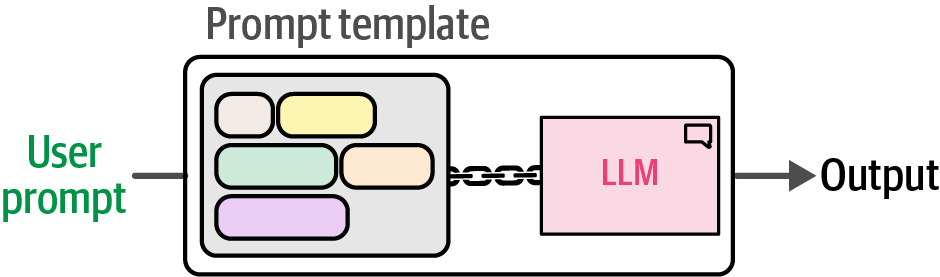

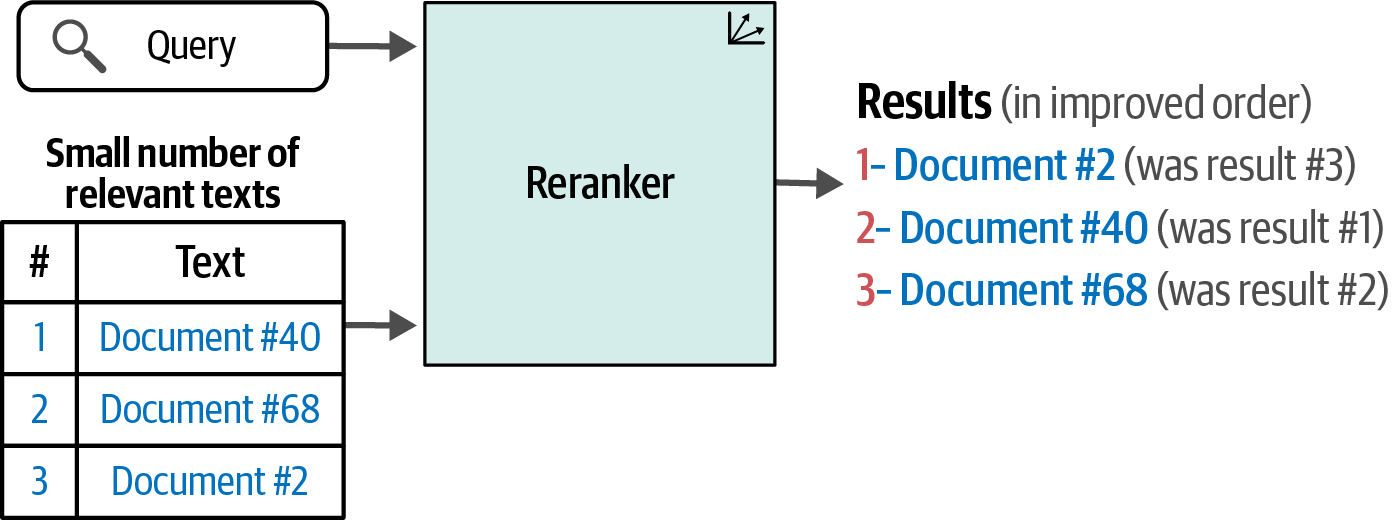

"wisdom": 10,